Published online Jan 19, 2020. doi: 10.5498/wjp.v10.i1.1

Peer-review started: June 23, 2019

First decision: August 20, 2019

Revised: November 14, 2019

Accepted: November 26, 2019

Article in press: November 26, 2019

Published online: January 19, 2020

Processing time: 182 Days and 11.8 Hours

Cognitive issues such as Alzheimer’s disease and other dementias confer a substantial negative impact. Problems relating to sensitivity, subjectivity, and inherent bias can limit the usefulness of many traditional methods of assessing cognitive impairment.

To determine cut-off scores for classification of cognitive impairment, and assess Cognivue® safety and efficacy in a large validation study.

Adults (age 55-95 years) at risk for age-related cognitive decline or dementia were invited via posters and email to participate in two cohort studies conducted at various outpatient clinics and assisted- and independent-living facilities. In the cut-off score determination study (n = 92), optimization analyses by positive percent agreement (PPA) and negative percent agreement (NPA), and by accuracy and error bias were conducted. In the clinical validation study (n = 401), regression, rank linear regression, and factor analyses were conducted. Participants in the clinical validation study also completed other neuropsychological tests.

For the cut-off score determination study, 92 participants completed St. Louis University Mental Status (SLUMS, reference standard) and Cognivue® tests. Analyses showed that SLUMS cut-off scores of < 21 (impairment) and > 26 (no impairment) corresponded to Cognivue® scores of 54.5 (NPA = 0.92; PPA = 0.64) and 78.5 (NPA = 0.5; PPA = 0.79), respectively. Therefore, conservatively, Cognivue® scores of 55-64 corresponded to impairment, and 74-79 to no impairment. For the clinical validation study, 401 participants completed ≥ 1 testing session, and 358 completed 2 sessions 1-2 wk apart. Cognivue® classification scores were validated, demonstrating good agreement with SLUMS scores (weighted κ 0.57; 95%CI: 0.50-0.63). Reliability analyses showed similar scores across repeated testing for Cognivue® (R2 = 0.81; r = 0.90) and SLUMS (R2 = 0.67; r = 0.82). Psychometric validity of Cognivue® was demonstrated vs. traditional neuropsychological tests. Scores were most closely correlated with measures of verbal processing, manual dexterity/speed, visual contrast sensitivity, visuospatial/executive function, and speed/sequencing.

Cognivue® scores ≤ 50 avoid misclassification of impairment, and scores ≥ 75 avoid misclassification of unimpairment. The validation study demonstrates good agreement between Cognivue® and SLUMS; superior reliability; and good psychometric validity.

Core tip: This study was designed to address the question of how to identify early cognitive impairment and how to monitor cognitive impairment over time with high reliability. This is critical in patients at risk for age-related cognitive decline. The study results demonstrated that Cognivue - through its unique adaptive psychophysics technology - had good validity and psychometric properties, as well as superior test-retest reliability compared to the St. Louis University Mental Status examination. Therefore, as a quantitative, computerized, assessment tool, Cognivue represents a safe and effective method for clinicians to more conveniently and effectively identify and track cognitive impairment.

- Citation: Cahn-Hidalgo D, Estes PW, Benabou R. Validity, reliability, and psychometric properties of a computerized, cognitive assessment test (Cognivue®). World J Psychiatr 2020; 10(1): 1-11

- URL: https://www.wjgnet.com/2220-3206/full/v10/i1/1.htm

- DOI: https://dx.doi.org/10.5498/wjp.v10.i1.1

Tools for assessing cognitive function decline are often limited by issues of measurement efficacy[1-5], testing bias[5,6], inconsistent retest reliability[7], or cost[8]. In addition, some tests cannot be administered by non-clinicians, and excessive test length can make some tools impractical for routine use in clinical practice[6,9,10]. Cognivue® is a physiological and psychophysical computerized tool for the automated assessment of cognitive functioning that is not dependent on traditional question-and-answer testing. It was developed based on extensive basic research into the neural mechanisms of functional impairment in memory, aging, and dementia. This was complimented by clinical research demonstrating letter, word, and motion perceptual deficits in patients with Alzheimer’s disease[11-14].

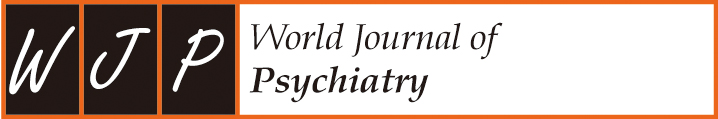

Cognivue® consists of 3 sub-batteries of 10 separately scored sub-tests presented in a 10 min automated sequence. The first sub-battery (visuomotor and visual salience) measures adaptive motor control and dynamic visual contrast sensitivity. These results do not count towards the final score. They are used only to adapt the remaining sub-tests to the response characteristics of the individual subject. This ensures that only cognition is evaluated, and the subject is not at a disadvantage because of visual or motor deficits. The subsequent 2 sub-batteries include perceptual processing (letter, word, shape, and motion discrimination), and memory processing (letter, word, shape, and motion memory). Cognivue® is Food and Drug Administration (FDA)-cleared for use as an adjunctive tool to aid in assessing cognitive impairment in subjects aged 55-95 years and is not intended to be used alone for diagnostic purposes.

This manuscript presents the full results of 2 clinical studies (previously presented at the 2019 annual meeting of the American Association of Geriatric Psychiatry) conducted to assess the efficacy and safety of Cognivue®. The purpose of the first study was to establish Cognivue® cut-off values (e.g., impaired, intermediate, and unimpaired cognitive function) compared to a reference standard, the St. Louis University Mental Status (SLUMS) Examination. The objective of the subsequent FDA pivotal trial was to clinically validate the agreement between the Cognivue® classifications and the SLUMS classifications, examine test-retest reliability, and determine the psychometric properties of the tests.

The study to determine Cognivue® cut-off scores for the classification of cognitive impairment enrolled 92 adults 55 to 95 years of age from assisted and independent-living communities who were at risk for age-related cognitive decline or dementia. Subjects were invited via posters and email to complete both the SLUMS (reference standard) and Cognivue® tests. Exclusion criteria included the presence of motor or visual disabilities and the inability to provide informed consent.

The SLUMS is an 11-item questionnaire with scores ranging from 0 to 30, and it is designed to measure orientation, memory, attention, and executive functions. As previously described, the Cognivue® quantitative assessment tool includes 3 sequentially automated sub-batteries (visuomotor ability, perceptual processing, and memory processing) over a brief 10 min session (Table 1 and Figure 1). The first sub-battery is used to calibrate the subsequent tests based on the individual’s visual and motor abilities. Participants in this study were stratified according to SLUMS score (> 26 = unimpaired, 26-21 = mildly impaired, and < 21 = impaired)[15].

| Sub-battery and Sub-test | Description |

| Basic motor and visual ability | |

| Adaptive motor control test | Assesses visuomotor responsiveness using speed and accuracy measures |

| Measures subject’s ability to control the rotatory movement of the CogniWheel™ in response to rotational visual stimuli | |

| Visual salience test | Assesses basic visual processing functions |

| Measures the subject’s ability to identify a wedge filled by a random pattern of black and white dots shown on an neutral (gray) background | |

| Perceptual processing | |

| Letter discrimination | Measures the subject’s perceptual processing of different forms, despite the addition of increasing amounts of clutter |

| Discriminate real English letters from a variety of non-letter, letter-like shapes | |

| Word discrimination | As above |

| Discriminate real 3-letter words from 3-letter non-words | |

| Shape discrimination | As above |

| Discriminate a circle filled with a common shape from the rest of the display filled with other common shapes | |

| Motion discrimination | As above |

| Discriminate a circle filled with one direction of dot motion from the rest of the display filled with another direction of dot motion | |

| Memory processing | |

| Letter memory | Assesses memory using specialized sets of visual stimuli |

| Measures the subject’s ability to recall which letter was presented as a pre-cue, and then select that letter from a display of alternative items, despite the addition of increasing amounts of clutter | |

| Select the correct letter of the English alphabet | |

| Word memory | As above |

| Select the correct 3-letter word | |

| Shape memory | As above |

| Select the correct shape | |

| Motion memory | As above |

| Select the correct direction of motion | |

Two different optimization methods were used to determine the cut-off values for Cognivue® scores that corresponded to the SLUMS classifications unimpaired, intermediate (mildly impaired), and impaired. The first method used a minimization algorithm to optimize the negative percent agreement (NPA) and positive percent agreement (PPA) between Cognivue® and SLUMS scores in the objective function: (1) NPA = [true negative (TN)/false positive (FP) + TN] × 100%; and (2) PPA = [true positive (TP)/false negative (FN) + TP] × 100%.

The second method used a minimization algorithm with two measures in the objective function, inaccuracy and error bias: (1) Inaccuracy = 1 - (TP + TN/total); (2) Error bias = contrast ratio (difference/sum) of FPs and FNs.

The validation study enrolled 401 adults 55 to 95 years of age at risk for age-related cognitive decline or dementia residing in independent-living communities who were invited via posters and email. Exclusion criteria included the presence of motor or visual disabilities and the inability to provide informed consent. Participants completed Cognivue®, SLUMS, and an array of traditional neuropsychological tests (Table 2). Results of the cut-off determination study were used to group the participants, with subjects in the intermediate categories (low to moderate impairment) being combined with the unimpaired category for each testing modality.

| Validation of classification scores | Purpose: Assess the validity of the previously defined Cognivue® cut-off scores in a larger sample of subjects |

| Methods: Scores on Cognivue® and SLUMS were compared using regression and classification analyses. PPA and NPA were calculated | |

| Assessment of retest reliability | Purpose: Compare scores from repeated administration of Cognivue® to assess retest reliability, compare findings to parallel results from SLUMS |

| Methods: Repeated Cognivue® and SLUMS testing was conducted in 2 sessions 1-2 wk apart with regression and rank linear regression analysis being performed | |

| Assessment of score psychometrics vs other neuropsychological tests | Purpose: Compare scores on Cognivue® and other neuropsychological tests to describe relationship and compare them to SLUMS |

| Methods: 401 participants completed 10 different tests [SLUMS, SLUMS-clock drawing1, SLUMS-animal naming1, RAVLT, TMT-A, TMT-B, Benton JOLO, figural memory, PPB, HVCS, GDS (15-item)]; rank linear regression analysis and factor analysis performed |

Agreement analysis of the impairment classifications as defined in the cut-off determination study was performed, along with an assessment of the retest reliability of Cognivue®, and a comparison of its psychometric properties relative to other neuropsychological tests (Table 2). Regression analyses for agreement and retest reliability, and rank linear regression and factor analysis for psychometric comparisons, among others were performed (Table 2).

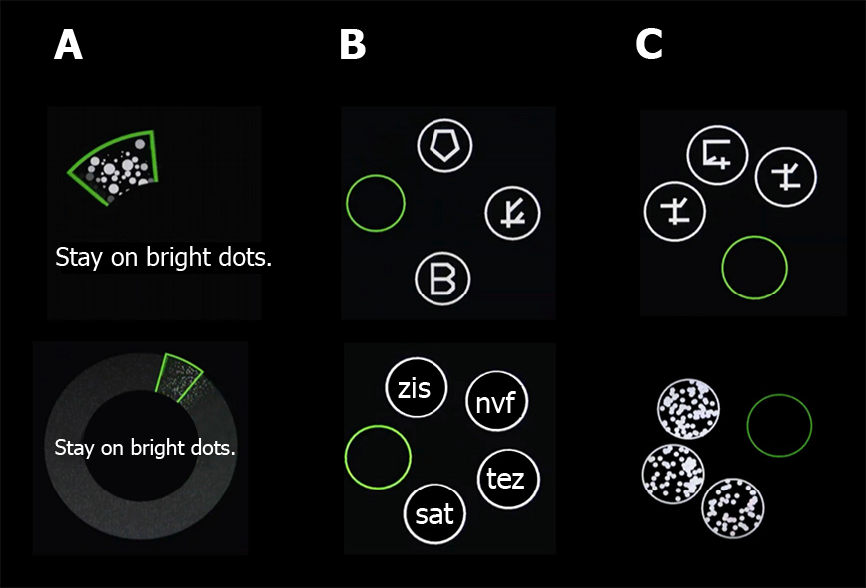

A total of 92 participants completed both the SLUMS and the Cognivue® tests at one of five sites. Individual scores for study participants are shown in Figure 2. Based on SLUMS score, 50% were unimpaired (> 26), 38% were mildly impaired (26-21), and 12% were impaired (< 21) (Figure 2).

In the analysis of NPA and PPA, the SLUMS cut-off score for impaired (< 21) minimized to 0.297 at a Cognivue® cut-off score of 63.5 (NPA = 0.80; PPA = 0.79). The SLUMS cut-off score for unimpaired (> 26) minimized to 0.324 at a Cognivue® cut-off score of 73.5 (NPA = 0.68; PPA = 0.67). In the analysis of optimization by accuracy and error bias, the SLUMS cut-off score of < 21 (impaired) corresponded to a Cognivue® cut-off score of 54.5 (NPA = 0.92; PPA = 0.64). The SLUMS cut-off score of > 26 (unimpaired) corresponded to a Cognivue® cut-off score of 78.5 (NPA = 0.5; PPA = 0.79).

Based on the 2 separate analysis techniques, it was determined that Cognivue® scores between 55 and 64 corresponded to SLUMS scores indicating impaired (0 to 20), and Cognivue® scores between 74 and 79 corresponded to SLUMS scores indicating unimpaired (27 to 30). Cognivue® scores between the ranges classifying impaired and unimpaired (64 and 74) corresponded to SLUMS scores of 21 to 26. Therefore, Cognivue® scores ≤ 50 provide a conservative standard consistent with cognitive impairment that will avoid misclassification of an individual that is impaired, and scores ≥ 75 provide a conservative cut-off consistent with no impairment that will avoid misclassification of an individual that is unimpaired (Table 3).

| SLUMS cut-off scores | Cognivue® cut-off scores | ||

| Impaired | < 21 | → | ≤ 50 |

| Mildly impaired (intermediate) | 21-26 | → | 51-74 |

| Unimpaired | > 26 | → | ≥ 75 |

Part 1: Validation analyses: A total of 401 participants completed at least 1 testing session, and based on SLUMS score, 30% were unimpaired (> 26), 43% were mildly impaired (26-21), and 27% were impaired (< 21). Validation analysis of the previously defined Cognivue® classification scores yielded a PPA of 56% [95% Wilson interval (WI), 0.47-0.65] and a NPA of 95% (95%WI, 0.91-0.97), with a weighted κ = 0.57 [95% confidence intervals (CI): 0.50-0.63]. This suggests a significant categorical relationship between Cognivue® and SLUMS scores. An analysis omitting the intermediate groups as being indeterminate showed a stronger relationship between Cognivue® and SLUMS categories for impaired or unimpaired, with a PPA of 82% (95%WI, 0.72-0.89) and a NPA of 98% (95%WI, 0.93-0.99).

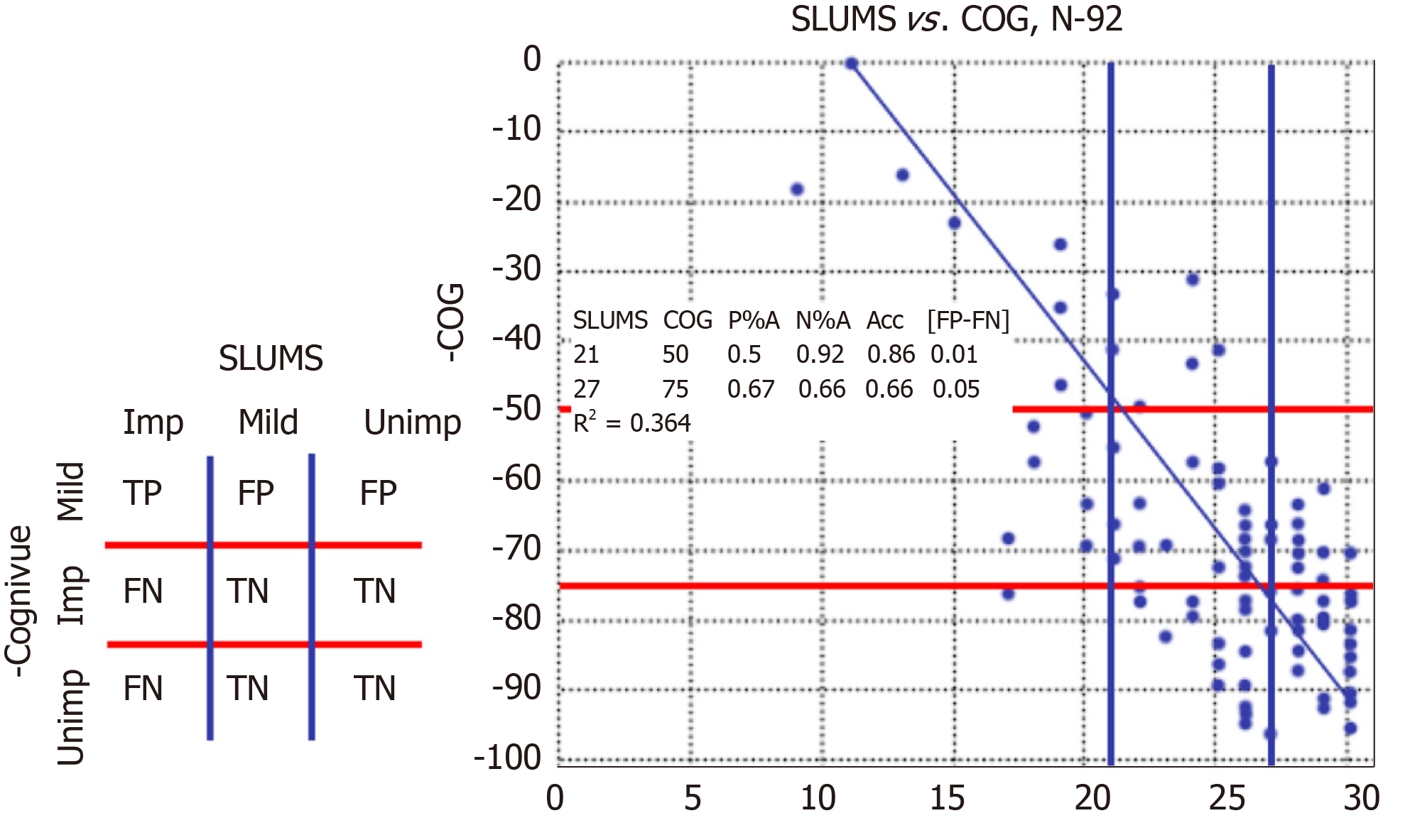

Part 2: Retest reliability analyses: Data were available for 358 participants who completed 2 Cognivue® testing sessions, 1-2 wk apart. Regression analyses of test-retest reliability produced similar scores across repeat testing for Cognivue® (regression fit: R2 = 0.81; r = 0.90) (Figure 3A) and SLUMS (regression fit: R2 = 0.67; r = 0.82) (Figure 3B).

Agreement analysis for Cognivue® test-retest reliability revealed strong correlation between participant classification by first and second Cognivue® tests (PPA = 89%; NPA = 93%), with an intraclass correlation (ICC) of tests 1 and 2 of 0.99 (P < 0.001). The agreement analysis for SLUMS also showed strong agreement between participant classification by first and second SLUMS tests (PPA = 87%; NPA = 87%; ICC, 0.87; P < 0.001).

The Cognivue® classifications of impaired, intermediate, and unimpaired did not differ significantly across repeat testing. Analyzing the 3 classifications separately yielded PPAs of 89% for impaired, 57% for intermediate, and 87% for unimpaired (Table 4). Whereas for SLUMS, the relationships between scores and classifications across repeated testing were less robust than those for Cognivue®. Analyzing the 3 SLUMS classifications separately yielded PPAs of 87% for impaired, 55% for mildly impaired, and 51% for unimpaired.

| 2nd Test | 1st Test | |||

| Impaired | Intermediate | Unimpaired | Total | |

| Impaired | 42 (89%) | 21 | 0 | 63 |

| Intermediate | 5 | 41 (57%) | 32 | 78 |

| Unimpaired | 0 | 10 | 207 (87%) | 217 |

| Total | 47 | 72 | 239 | 358 |

Psychometric analysis: This analysis was based on data from the 401 participants who had completed at least one testing session which included Cognivue®, SLUMS, and a battery of other traditional neuropsychological tests. Rank scores on each psychometric test were plotted against their ranks on SLUMS scores and against their ranks on Cognivue® scores with linear regression lines, the lines’ parameters, and their 95% confidence intervals.

Data were then condensed using a factor analysis of the various neuropsychological test scores. Tests were grouped according to relations between scores across participants. The factor analysis converged in six iterations to yield a five factor solution showing Cognivue® scores most closely correlated with the following types of measures: verbal processing (animal naming and Rey Auditory Verbal Learning Test), manual dexterity and speed (Peg Board), visual acuity (contrast sensitivity), visuospatial and executive function (Trail Making Test-B and judgment of line orientation), and speed and sequencing (Trail Making Test-A) (Table 5).

| Component | |||||

| 1 | 2 | 3 | 4 | 5 | |

| SLUMS-clock drawing | 0.420 | 0.338 | 0.038 | 0.367 | -0.049 |

| SLUMS-animal naming | 0.5291 | 0.346 | 0.146 | 0.365 | -0.125 |

| RAVLT-A-1 | 0.7181 | 0.209 | 0.034 | 0.128 | -0.040 |

| RAVLT-A-2 | 0.8201 | 0.204 | 0.080 | 0.157 | -0.138 |

| RAVLT-A-3 | 0.8321 | 0.193 | 0.120 | 0.190 | -0.057 |

| RAVLT-A-4 | 0.8471 | 0.200 | 0.143 | 0.184 | -0.040 |

| RAVLT-A-5 | 0.8631 | 0.210 | 0.080 | 0.182 | -0.013 |

| RAVLT-B-1 | 0.5791 | 0.213 | 0.104 | 0.178 | -0.060 |

| RAVLT-A-6 | 0.8521 | 0.134 | 0.093 | 0.170 | -0.051 |

| RAVLT-A-7 | 0.8601 | 0.159 | 0.117 | 0.164 | -0.040 |

| RAVLT-hits | 0.6701 | 0.052 | 0.128 | 0.252 | -0.003 |

| RAVLT-fps | -0.408 | -0.017 | -.111 | -0.041 | 0.125 |

| Peg Board-Left | 0.247 | 0.7962 | 0.297 | 0.120 | -0.090 |

| Peg Board-Right | 0.297 | 0.7522 | 0.206 | 0.160 | -0.186 |

| Peg Board-Bimanual | 0.293 | 0.8222 | 0.230 | 0.134 | -0.137 |

| Contrast-Left | 0.146 | 0.133 | 0.8013 | 0.110 | -0.068 |

| Contrast-Right | 0.160 | 0.156 | 0.8023 | 0.094 | -0.106 |

| Contrast-Binocular | 0.189 | 0.183 | 0.8333 | 0.132 | -0.153 |

| TMT-B-Time | -0.312 | -0.088 | -0.213 | -0.7884 | 0.116 |

| TMT-B-Errors | -0.266 | -0.072 | -0.197 | -0.8154 | 0.085 |

| Benton JOLO | 0.185 | 0.196 | -0.024 | 0.4994 | -0.344 |

| TMT-A-Time | -0.115 | -0.185 | -0.158 | -0.150 | 0.8625 |

| TMT-A-Errors | -0.081 | -0.058 | -0.134 | -0.068 | 0.9025 |

| Figural memory | 0.272 | 0.243 | 0.202 | 0.376 | 0.047 |

| GDS | -0.119 | -0.341 | 0.207 | -0.329 | -0.008 |

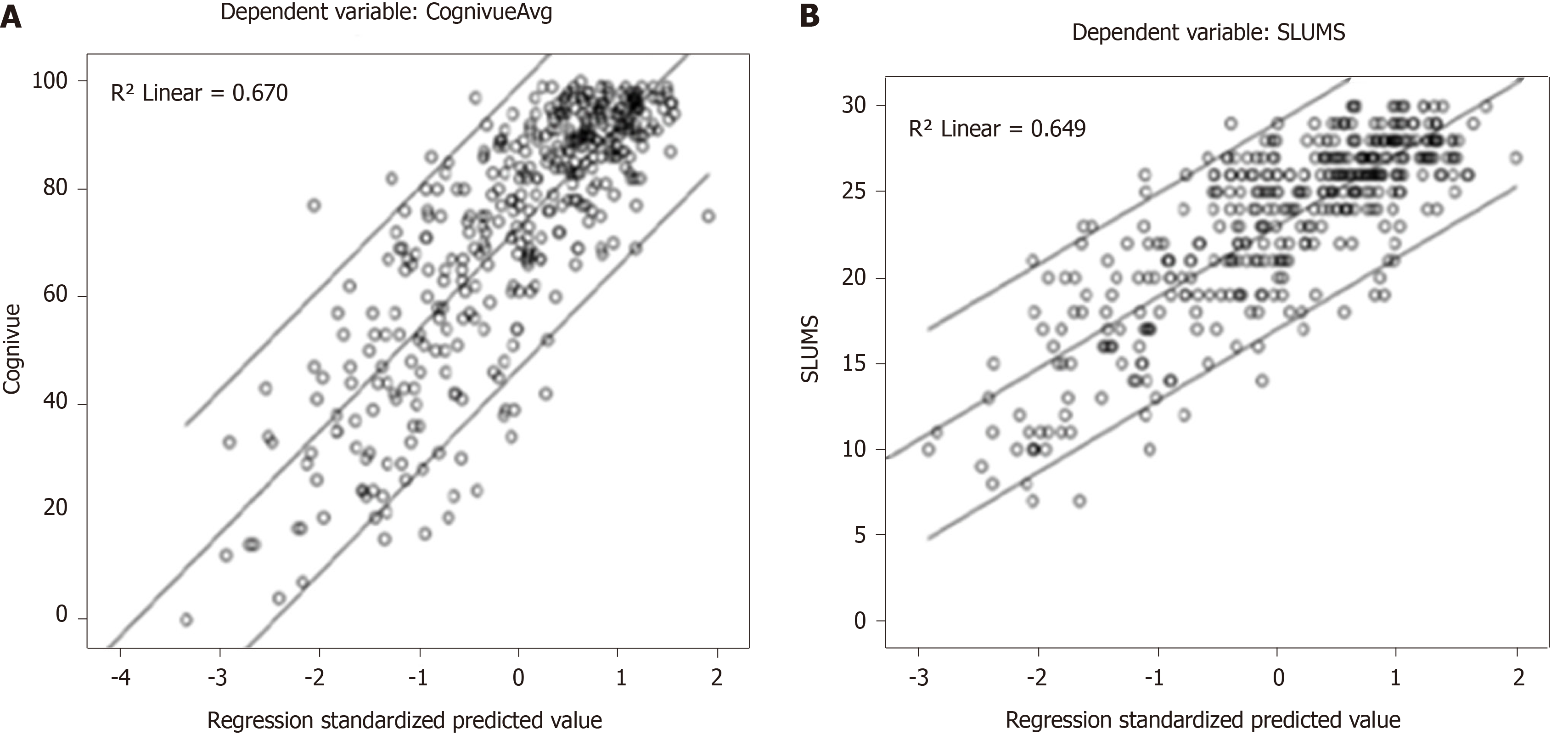

The five factor scores for each participant were used in a multiple linear regression analyses for Cognivue® scores and yielded an adjusted linear R2 of 0.67 (Figure 4A). Similar analysis for SLUMS scores yielded an adjusted linear R2 of 0.65 (Figure 4B).

In the US, estimates of the prevalence of mild cognitive impairment range from 15%-20% among those ≥ 65 years of age[16], and 9.9%-35.2% among those ≥ 70 years of age[17-19], representing a substantial potential impact on both direct and indirect costs. Studies show cognitive issues such as Alzheimer’s disease and other dementias confer substantial negative impacts, including increased hospitalization, assisted living stays, home health care visits, risk of in hospital mortality, as well as increased costs compared to those without such impairments[16].

Cognivue® was designed to overcome some of the limitations inherent in traditional paper and pencil tests for assessing cognitive impairment, such as sensitivity[1,2], subjectivity[3], test-retest reliability[7], as well as educational, language, gender, and cultural biases[5,6].

Cognivue® eliminates these biases through its unique adaptive psychophysics technology, which focuses on whether the subject can detect and identify stimuli, including differentiating between different stimuli, and describing the magnitude or nature of the difference. This is unrelated to the subject’s level of education or social/economic status. Furthermore, the first sub-battery of tests is designed to assess the individual’s visual and motor abilities. These are used only to adapt subsequent tests, and therefore, eliminate bias related to visual and motor deficits and assessing only the individual’s cognitive function.

The dynamic, adaptive technology avoids memorization issues associated with current paper and pencil tests. The test-retest results of this trial suggest that Cognivue® may help eliminate or reduce the effect of memorization and human error/variability on retest reliability[7], while the retests with SLUMS showed a lower degree of retest reliability. Cognivue® subjects are unable to memorize answers due to the adaptive nature of the test.

Other barriers to cognitive testing in clinical practice include the impact on costs and scheduling flexibility that are related to the need for administration by the provider specifically[6,9,20]. Cognivue® is self-administered by the patient and can be initiated by non-clinician support staff. Work load is also reduced by the ability of Cognivue® to track and monitor test outcomes over repeat testing over time.

In a survey of primary care providers, diagnosis and treatment of mild cognitive impairment was impacted by a negative attitude toward the importance of early diagnosis[21]. Recent recommendations recognized that cognitive impairment occurs on a continuum, progressing from age-related decline, to mild cognitive impairment, and to dementia[22]. Early identification of cognitive impairment can lead to earlier management, which can lead to improved prognosis and decreased morbidity, and early discussion regarding decision-making[23,24]. In fact, patients often identify assistance in planning for future treatments as a factor influencing their willingness to be screened[22]. In clinical practice, physician clinical judgment is less effective than structured tools for the recognition of mild cognitive impairment[25]. The 2017 Geriatric Summit on Assessing Cognitive Disorders Among the Aging Population sponsored by the National Academy of Neuropsychology, emphasized the importance of ongoing screening, and the need for automated tools for assessing and recording patient’s results over time[26]. Cognivue®, provides an easy-to-use reliable, computerized, testing tool, which can help clinicians meet these needs.

A limitation of the present studies validating Cognivue® is the use of a single reference standard, SLUMS. However, although Cognivue® has not yet been compared to Mini-Mental Status Examination (MMSE) or Montreal Cognitive Assessment (MoCA) tests—two of the more widely used paper and pencil cognitive assessments—SLUMS has been[15,27-29]. These studies demonstrated either the equivalence or superiority of SLUMS. Therefore, it is a reasonable to infer the likely equivalence, at a minimum, of Cognivue® in terms of its sensitivity, specificity, and psychometric validity to these commonly used tools. Cognivue® may be less likely to under diagnose (reported with MMSE)[28], or over diagnosis mild cases of impairment (10% with MMSE, 25% with MoCA)[22].

Longitudinal follow-up studies are underway to assess the ability of Cognivue® to monitor cognitive deterioration over time. Cognivue® is also being studied in other patient populations and specific disease states such as multiple sclerosis[30]. Additionally, a highly portable model of Cognivue® with a Health Insurance Portability and Accountability Act-compliant data repository is scheduled to be launched in mid-2019.

In conclusion, Cognivue® scores ≤ 50 and ≥ 75 were consistent with conservative standards for impaired and unimpaired, respectively, in the cut-off study. When these scores were used in the clinical trial also reported here, Cognivue® was shown to have good validity and psychometric properties, and superior test- retest reliability compared to SLUMS. As a result, Cognivue® received FDA de novo 510(k) clearance as an adjunctive tool for evaluating cognition through perceptual and memory function in individuals 55-95 years of age[31]. It is not intended to be used as a diagnostic tool.

These studies demonstrated the safety and efficacy of Cognivue®, which is the first FDA-cleared test for the automated computerized assessment of cognitive functioning. This represents an improved approach to help in the early identification of cognitive impairment in patients at-risk for cognitive decline.

The assessment of declining cognitive function due to age or dementia is often impeded by multiple factors such as testing bias, cost, poor measurement efficacy, test inconsistency, and test length. Some tools also specifically require administration by a clinician, adding further constraint to routine testing in clinical practice. Prior research on the neural mechanisms of functional impairment in memory, aging, and dementia has led to the development of a novel computerized testing method.

There is a need for a reliable, easy-to-use, novel method for the early identification, testing, and monitoring of cognitive impairment in at-risk patient populations. Cognivue® is a safe and effective automated method for identifying and tracking cognitive impairment in a more convenient and efficient manner than traditional question and answer paper and pencil testing.

The main objective of the first clinical study was to establish cut-off values for Cognivue® (e.g., impaired, intermediate, and unimpaired cognitive function) relative to the St. Louis University Mental Status (SLUMS). The main objectives of the second study were to clinically validate the agreement between Cognivue® and SLUMS classifications, assess retest reliability of Cognivue®, and assess Cognivue®'s psychometric properties.

Participants in the first study completed both the SLUMS and Cognivue® tests. Optimization methods used to determine cut-off values included a minimization algorithm to optimize the negative percent agreement and positive percent agreement between test scores in the objective function and a minimization algorithm with two measures in the objective function (inaccuracy, error bias). Participants in the second study also completed both the SLUMS and Cognivue® tests as well as other traditional neuropsychological tests. Regression analyses of agreement and retest reliability, as well as rank linear regression and factor analysis for psychometric comparisons were conducted, and agreement analysis of the impairment classifications derived from the first study was also performed.

It was found that Cognivue® scores ≤ 50 would avoid misclassification of an impaired person while providing a conservative standard consistent with cognitive impairment, and that scores ≥ 75 would avoid misclassification of an unimpaired person while providing a conservative cut-off consistent with no impairment. In the second study, Cognivue® demonstrated good validity and psychometric properties, as well as superior test-retest reliability compared to the SLUMS.

With its unique adaptive psychophysics technology, Cognivue® provides a computerized tool for the automated assessment of cognitive functioning free from many of the biases and limitations of the more traditional paper and pencil methods such as sensitivity, subjectivity, test-retest reliability, and educational, language, gender, and cultural biases. Improved prognosis and decreased morbidity may be possible with an earlier implementation of management strategies in patients with cognitive impairment identified earlier in its course. Because Cognivue® is self-administered it provides clinicians with a safe, effective, and time-saving method to efficiently assist in the assessment and monitoring of cognitive impairment in their patients.

Trials assessing the utility of Cognivue® in other, specific patient populations are currently in progress. Additionally, the ability of Cognivue® to monitor the deterioration of cognitive function over time is currently being assessed in longitudinal studies.

Manuscript source: Unsolicited manuscript

Specialty type: Psychiatry

Country of origin: United States

Peer-review report classification

Grade A (Excellent): 0

Grade B (Very good): B, B

Grade C (Good): 0

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Chakrabarti S, Yakoot M S-Editor: Yan JP L-Editor: A E-Editor: Li X

| 1. | Zadikoff C, Fox SH, Tang-Wai DF, Thomsen T, de Bie RM, Wadia P, Miyasaki J, Duff-Canning S, Lang AE, Marras C. A comparison of the mini mental state exam to the Montreal cognitive assessment in identifying cognitive deficits in Parkinson's disease. Mov Disord. 2008;23:297-299. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 207] [Cited by in RCA: 225] [Article Influence: 13.2] [Reference Citation Analysis (0)] |

| 2. | Athilingam P, Visovsky C, Elliott AF, Rogal PJ. Cognitive screening in persons with chronic diseases in primary care: challenges and recommendations for practice. Am J Alzheimers Dis Other Demen. 2015;30:547-558. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 15] [Cited by in RCA: 17] [Article Influence: 1.7] [Reference Citation Analysis (0)] |

| 3. | Connor DJ, Jenkins CW, Carpenter D, Crean R, Perera P. Detection of Rater Errors on Cognitive Instruments in a Clinical Trial Setting. J Prev Alzheimers Dis. 2018;5:188-196. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1] [Cited by in RCA: 1] [Article Influence: 0.1] [Reference Citation Analysis (0)] |

| 5. | Ranson JM, Kuźma E, Hamilton W, Muniz-Terrera G, Langa KM, Llewellyn DJ. Predictors of dementia misclassification when using brief cognitive assessments. Neurol Clin Pract. 2019;9:109-117. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 34] [Cited by in RCA: 43] [Article Influence: 7.2] [Reference Citation Analysis (0)] |

| 6. | Cordell CB, Borson S, Boustani M, Chodosh J, Reuben D, Verghese J, Thies W, Fried LB; Medicare Detection of Cognitive Impairment Workgroup. Alzheimer's Association recommendations for operationalizing the detection of cognitive impairment during the Medicare Annual Wellness Visit in a primary care setting. Alzheimers Dement. 2013;9:141-150. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 300] [Cited by in RCA: 354] [Article Influence: 27.2] [Reference Citation Analysis (0)] |

| 7. | Collie A, Darby D, Maruff P. Computerised cognitive assessment of athletes with sports related head injury. Br J Sports Med. 2001;35:297-302. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 145] [Cited by in RCA: 141] [Article Influence: 5.9] [Reference Citation Analysis (0)] |

| 8. | Nieuwenhuis-Mark RE. The death knoll for the MMSE: has it outlived its purpose? J Geriatr Psychiatry Neurol. 2010;23:151-157. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 95] [Cited by in RCA: 115] [Article Influence: 7.7] [Reference Citation Analysis (0)] |

| 9. | Sheehan B. Assessment scales in dementia. Ther Adv Neurol Disord. 2012;5:349-358. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 179] [Cited by in RCA: 200] [Article Influence: 16.7] [Reference Citation Analysis (0)] |

| 10. | Bradford A, Kunik ME, Schulz P, Williams SP, Singh H. Missed and delayed diagnosis of dementia in primary care: prevalence and contributing factors. Alzheimer Dis Assoc Disord. 2009;23:306-314. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 725] [Cited by in RCA: 691] [Article Influence: 43.2] [Reference Citation Analysis (0)] |

| 11. | Fernandez R, Duffy CJ. Early Alzheimer's disease blocks responses to accelerating self-movement. Neurobiol Aging. 2012;33:2551-2560. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 11] [Article Influence: 0.8] [Reference Citation Analysis (0)] |

| 12. | Velarde C, Perelstein E, Ressmann W, Duffy CJ. Independent deficits of visual word and motion processing in aging and early Alzheimer's disease. J Alzheimers Dis. 2012;31:613-621. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 15] [Article Influence: 1.3] [Reference Citation Analysis (0)] |

| 13. | Kavcic V, Vaughn W, Duffy CJ. Distinct visual motion processing impairments in aging and Alzheimer's disease. Vision Res. 2011;51:386-395. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 68] [Cited by in RCA: 60] [Article Influence: 4.3] [Reference Citation Analysis (0)] |

| 14. | Mapstone M, Dickerson K, Duffy CJ. Distinct mechanisms of impairment in cognitive ageing and Alzheimer's disease. Brain. 2008;131:1618-1629. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 35] [Cited by in RCA: 35] [Article Influence: 2.1] [Reference Citation Analysis (0)] |

| 15. | Tariq SH, Tumosa N, Chibnall JT, Perry MH, Morley JE. Comparison of the Saint Louis University mental status examination and the mini-mental state examination for detecting dementia and mild neurocognitive disorder--a pilot study. Am J Geriatr Psychiatry. 2006;14:900-910. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 443] [Cited by in RCA: 426] [Article Influence: 22.4] [Reference Citation Analysis (0)] |

| 16. | Alzheimer’s Association. 2018 Alzheimer’s disease facts and figures. Alzheimers Dement. 2018;14:367-429. |

| 17. | Katz MJ, Lipton RB, Hall CB, Zimmerman ME, Sanders AE, Verghese J, Dickson DW, Derby CA. Age-specific and sex-specific prevalence and incidence of mild cognitive impairment, dementia, and Alzheimer dementia in blacks and whites: a report from the Einstein Aging Study. Alzheimer Dis Assoc Disord. 2012;26:335-343. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 261] [Cited by in RCA: 287] [Article Influence: 22.1] [Reference Citation Analysis (0)] |

| 18. | Petersen RC, Roberts RO, Knopman DS, Geda YE, Cha RH, Pankratz VS, Boeve BF, Tangalos EG, Ivnik RJ, Rocca WA. Prevalence of mild cognitive impairment is higher in men. The Mayo Clinic Study of Aging. Neurology. 2010;75:889-897. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 444] [Cited by in RCA: 558] [Article Influence: 37.2] [Reference Citation Analysis (0)] |

| 19. | Ganguli M, Chang CC, Snitz BE, Saxton JA, Vanderbilt J, Lee CW. Prevalence of mild cognitive impairment by multiple classifications: The Monongahela-Youghiogheny Healthy Aging Team (MYHAT) project. Am J Geriatr Psychiatry. 2010;18:674-683. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 128] [Cited by in RCA: 129] [Article Influence: 8.6] [Reference Citation Analysis (0)] |

| 20. | Wild K, Howieson D, Webbe F, Seelye A, Kaye J. Status of computerized cognitive testing in aging: a systematic review. Alzheimers Dement. 2008;4:428-437. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 348] [Cited by in RCA: 285] [Article Influence: 16.8] [Reference Citation Analysis (0)] |

| 21. | Boise L, Camicioli R, Morgan DL, Rose JH, Congleton L. Diagnosing dementia: perspectives of primary care physicians. Gerontologist. 1999;39:457-464. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 219] [Cited by in RCA: 223] [Article Influence: 8.6] [Reference Citation Analysis (0)] |

| 22. | Canadian Task Force on Preventive Health Care. Pottie K, Rahal R, Jaramillo A, Birtwhistle R, Thombs BD, Singh H, Gorber SC, Dunfield L, Shane A, Bacchus M, Bell N, Tonelli M. Recommendations on screening for cognitive impairment in older adults. CMAJ. 2016;188:37-46. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 46] [Cited by in RCA: 77] [Article Influence: 7.7] [Reference Citation Analysis (0)] |

| 23. | Lin JS, O'Connor E, Rossom RC, Perdue LA, Burda BU, Thompson M, Eckstrom E. Screening for cognitive impairment in older adults: An evidence update for the U.S. Preventive Services Task Force. Rockville (MD): Agency for Healthcare Research and Quality 2013; . |

| 24. | World Health Organization. Risk reduction of cognitive decline and dementia: WHO guidelines. Geneva: World Health Organization 2019; Available from: https://apps.who.int/iris/bitstream/handle/10665/312180/9789241550543-eng.pdf. |

| 25. | Borson S, Scanlan JM, Watanabe J, Tu SP, Lessig M. Improving identification of cognitive impairment in primary care. Int J Geriatr Psychiatry. 2006;21:349-355. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 146] [Cited by in RCA: 175] [Article Influence: 9.2] [Reference Citation Analysis (0)] |

| 26. | Perry W, Lacritz L, Roebuck-Spencer T, Silver C, Denney RL, Meyers J, McConnel CE, Pliskin N, Adler D, Alban C, Bondi M, Braun M, Cagigas X, Daven M, Drozdick L, Foster NL, Hwang U, Ivey L, Iverson G, Kramer J, Lantz M, Latts L, Ling SM, Maria Lopez A, Malone M, Martin-Plank L, Maslow K, Melady D, Messer M, Most R, Norris MP, Shafer D, Silverberg N, Thomas CM, Thornhill L, Tsai J, Vakharia N, Waters M, Golden T. Population Health Solutions for Assessing Cognitive Impairment in Geriatric Patients. Innov Aging. 2018;2:igy025. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 14] [Cited by in RCA: 18] [Article Influence: 2.6] [Reference Citation Analysis (0)] |

| 27. | Cao L, Hai S, Lin X, Shu D, Wang S, Yue J, Liu G, Dong B. Comparison of the Saint Louis University Mental Status Examination, the Mini-Mental State Examination, and the Montreal Cognitive Assessment in detection of cognitive impairment in Chinese elderly from the geriatric department. J Am Med Dir Assoc. 2012;13:626-629. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 41] [Cited by in RCA: 44] [Article Influence: 3.4] [Reference Citation Analysis (0)] |

| 28. | Buckingham D, Mackor K, Miller R, Pullam N, Molloy K. Comparing the cognitive screening tools: MMSE and SLUMS. PURE Insights. 2013;2:3. |

| 29. | Feliciano L, Horning SM, Klebe KJ, Anderson SL, Cornwell RE, Davis HP. Utility of the SLUMS as a cognitive screening tool among a nonveteran sample of older adults. Am J Geriatr Psychiatry. 2013;21:623-630. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 48] [Cited by in RCA: 41] [Article Influence: 3.4] [Reference Citation Analysis (0)] |

| 30. | Smith AD, Duffy C, Goodman AD. Novel computer-based testing shows multi-domain cognitive dysfunction in patients with multiple sclerosis. Mult Scler J Exp Transl Clin. 2018;4:2055217318767458. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 4] [Cited by in RCA: 4] [Article Influence: 0.6] [Reference Citation Analysis (0)] |

| 31. | US Food and Drug Administration. De Novo Classification Request For Cognivue. De Novo Summary (DEN130033). [accessed December 22, 2018]. Available from: www.accessdata.fda.gov/cdrh_docs/reviews/DEN130033.pdf. |