Published online Feb 18, 2022. doi: 10.5312/wjo.v13.i2.201

Peer-review started: May 17, 2021

First decision: October 16, 2021

Revised: November 26, 2021

Accepted: January 11, 2022

Article in press: January 11, 2022

Published online: February 18, 2022

Processing time: 276 Days and 11.4 Hours

Assessing academic productivity allows academic departments to identify the strengths of their scholarly contribution and provides an opportunity to evaluate areas for improvement.

To provide objective benchmarks for departments seeking to enhance academic productivity and identify those with significant improvement in recent past.

Our study retrospectively analyzed a cohort of orthopaedic faculty at United States-based academic orthopaedic programs. 5502 full-time orthopaedic faculty representing 178 programs were included in analysis. Variables included for analysis were National Institutes of Health funding (2014-2018), leadership positions in orthopaedic societies (2018), editorial board positions of top orthopaedic journals (2018), total number of publications and Hirsch-index. A weighted algorithm was used to calculate a cumulative score for each academic program. This study was performed at a large, United States medical school.

All 178 programs included in analysis were evaluated using the comprehensive weighted algorithm. The five institutions with the highest cumulative score, in decreasing order, were: Washington University in St. Louis, the Hospital for Special Surgery, Sidney Kimmel Medical College (SKMC) at Thomas Jefferson University, the University of California, San Francisco (UCSF) and Massachusetts General Hospital (MGH)/Brigham and Women’s/Harvard. The five institutions with the highest score per capita, in decreasing order, were: Mayo Clinic (Rochester), Washington University in St. Louis, Rush University, Virginia Commonwealth University (VCU) and MGH/Brigham and Women’s/Harvard. The five academic programs that had the largest improvement in cumulative score from 2013 to 2018, in decreasing order, were: VCU, SKMC at Thomas Jefferson University, UCSF, MGH/Brigham and Women’s/Harvard, and Brown University.

This algorithm can provide orthopaedic departments a means to assess academic productivity, monitor progress, and identify areas for improvement as they seek to expand their academic contributions to the orthopaedic community.

Core Tip: Assessing academic productivity allows academic departments to identify the strengths of their scholarly contribution and provides an opportunity to evaluate areas for improvement. By identifying measures of academic productivity for full-time faculty at academic orthopaedic programs in the United States, we were able to establish a comprehensive weighted algorithm for valuation of the scholarly achievement of each program. Furthermore, by establishing and documenting the findings and methodology of this algorithm, programs have the opportunity to assess, monitor, and identify areas of growth as they seek to expand their academic contributions to the orthopaedic community.

- Citation: Trikha R, Olson TE, Chaudry A, Ishmael CR, Villalpando C, Chen CJ, Hori KR, Bernthal NM. Assessing the academic achievement of United States orthopaedic departments. World J Orthop 2022; 13(2): 201-211

- URL: https://www.wjgnet.com/2218-5836/full/v13/i2/201.htm

- DOI: https://dx.doi.org/10.5312/wjo.v13.i2.201

The evaluation of academic orthopaedic surgery programs based on scholarly contribution is difficult to assess. Faculty are often measured by bibliometric variables that represent their academic productivity such as citation indices, number of publications and amount of research funding[1-3]. Our study aimed to measure the current scholarly productivity of orthopaedic departments in the United States. The results of this analysis are an assessment of orthopaedic programs based on the academic contributions of their faculty. In addition to recognizing highly academic departments, our study aims to provide orthopaedic departments with a tool that can be continually utilized to monitor academic productivity and, thus, identify areas for improvement.

There are many difficulties associated with evaluating the academic productivity of orthopaedic surgery programs. The subjective nature of certain metrics, such as national reputation and faculty satisfaction, used in rankings like Doximity or U.S. News & World Report[4-7] often make standardizing academic achievement difficult. Furthermore, current productivity is not always accurately reflected, as the productivity of alumni for the preceding 15 years are included in these rankings[4]. Another difficulty in evaluating academic achievement is the lack of consensus as to the weight that different objective bibliometric measures should have when determining overall academic contribution.

Efforts to quantify academic achievement have gained popularity amongst various specialties over the past decade. Publicly available metrics such as National Institutes of Health (NIH) funding, faculty Hirsch-indices (h-index) and number of publications have been used in plastic surgery, ophthalmology, dermatology, urology and a variety of other medical specialties to provide a measurement of an institution’s academic prowess[8-15]. The h-index is a well validated tool to accurately measure academic output and has been lauded in the orthopaedic community[16-18]. The h-index is defined as the number of publications (h) an individual has that receive at least h citations, with each other publication having < h citations[19]. Therefore, the h-index can never exceed the number of publications a faculty member has and considers both quality and quantity of a faculty member’s publications. NIH funding has also been validated as an accurate measure of scholarly impact across different specialties[9,20].

Previous studies in other specialties have utilized algorithms that weigh metrics of academic achievement to ultimately rank programs based on their academic productivity[12,21,22]. Our current study uses data from 2014-2018 to provide a five-year updated, enhanced analysis of our previous study[23], and continue to assess orthopaedic programs based on the academic output of their faculty. Cumulative statistics as well as per capita statistics, which help to highlight both programs with a large volume of academic output as well as smaller programs with a high academic yield, were used in this study. Furthermore, in order to acknowledge programs that have improved their scholarly productivity, we quantified the change in cumulative score from our previous paper that used data from 2013 to our current study that uses data from 2014-2018. As scholarly productivity is often linked with academic promotion, the ability to attract talented faculty and other important factors[3], standardized methods are necessary to accurately assess the academic achievement of orthopaedic surgery programs. The authors believe that the establishment of a consistent and representative algorithm of program achievement can be used as a tool to continually monitor progress over time and, importantly, provide guidance to individual programs on target areas to enhance overall scholarly productivity.

Programs included for analysis were identified through a search of the Accreditation Council for Graduate Medical Education website[24]. Faculty included for analysis were identified using faculty lists on individual departmental websites. An email was subsequently sent to program directors and coordinators for each institution to verify that the list of faculty on the department website was accurate and up to date. In an effort to standardize faculty lists, only faculty with a full-time appointment in the respective department of orthopaedic surgery were included for analysis. This included research faculty but did not include surgeons from other specialties, house staff, co-appointed faculty, part-time faculty or emeritus faculty as depicted on individual departmental websites. All inputted data and calculations were reviewed by multiple authors independently to ensure accuracy.

Our current study includes the same bibliometrics as our prior study[23] to quantify academic productivity for each faculty member. While some of these metrics are cumulative over a faculty member’s career, including total number of publications and h-index, other metrics such as NIH funding from 2014-2018, leadership society membership for 2018 and journal editorial board membership for 2018 provide a more current evaluation of academic productivity. As NIH funding can fluctuate dramatically, five years of NIH funding was analyzed. The bibliometrics of each faculty member within an orthopaedic surgery department were cumulated. A weighted algorithm was subsequently used to compute a score for each academic institution to assess their scholarly contribution. Change in cumulative score for each program from 2013 to 2018 was then calculated.

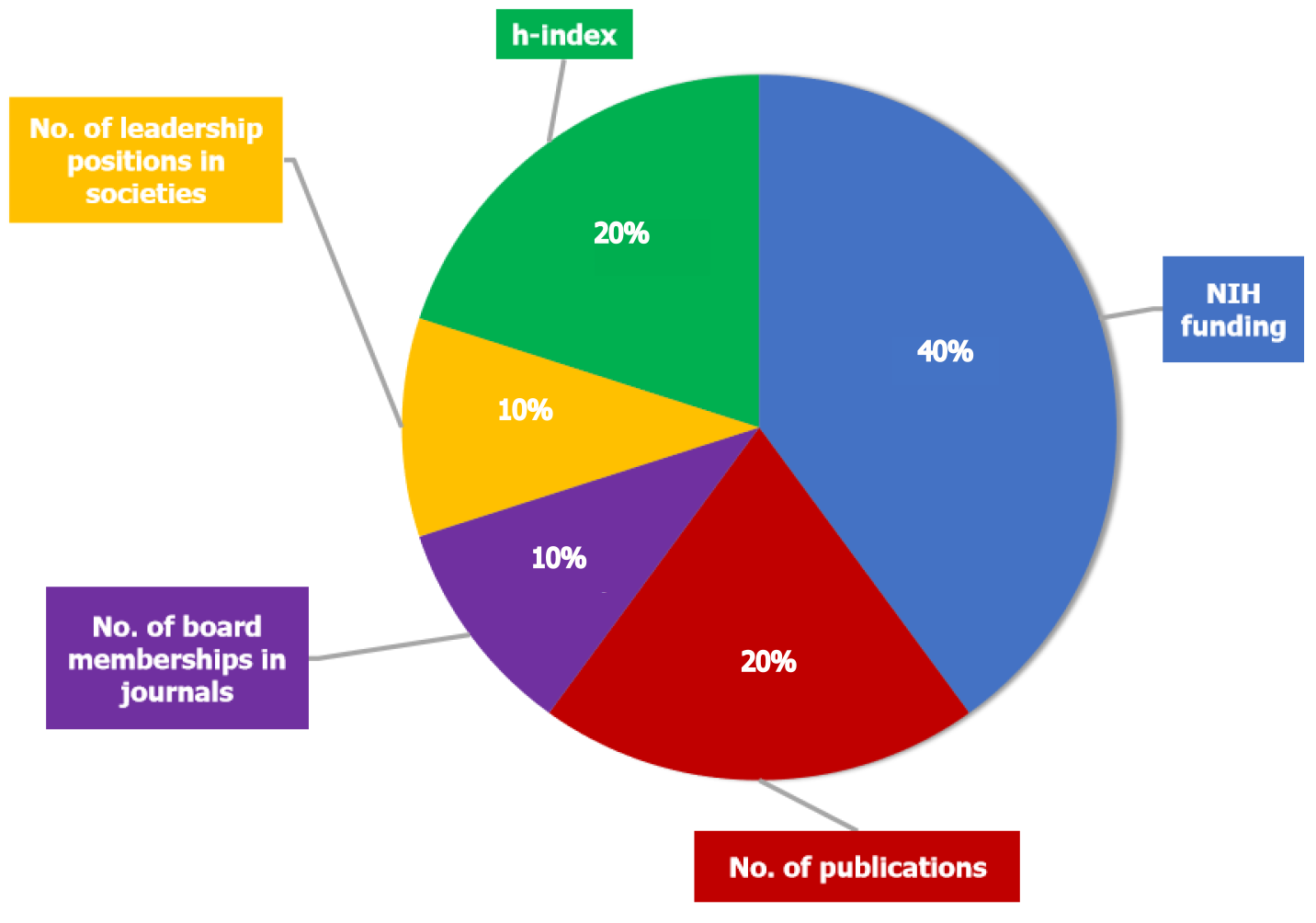

The weighted score was calculated as follows. For each of the five categories, each academic program was assigned a score from zero to one, with the program with the highest score in an individual category assigned a one. The category-specific score for each academic institution was calculated by dividing the value of a specific outcome measure attained by an institution by the value of that outcome measure attained by the highest achieving institution. That score was then either multiplied by 2.0 for the category of NIH funding, 1.0 for the categories of number of publications and h-index or 0.5 for the categories of leadership society membership and journal editorial board membership. Thus, NIH funding accounted for 40% of the total score, number of publications and h-index each accounted for 20% and society leadership and journal editorial board membership each accounted for 10% (Figure 1). For example, the faculty from the University of Iowa accounted for 2789 total publications. This was divided by the highest number of publications (13494) achieved by any institution (the Hospital for Special Surgery, Cornell) and multiplied by 1.0 (weighing factor for number of publications). A score of 0.207 was then given to the University of Iowa for the “number of publications” category. The same computation was then repeated with each bibliometric being divided by the number of full-time faculty within a department to calculate the per capita measurement.

The validated Scopus database was used to determine the total number of publications and h-index for each individual faculty member[25,26]. Scopus was chosen to analyze the total number of publications as Scopus only includes peer-reviewed literature and has the broadest coverage of any database[25]. Our analysis of total number of publications will therefore include all types of publications from an individual’s career. The NIH Research Portfolio Online Reporting Tool was used to obtain NIH funding from 2014-2018[27].

Our analysis also included the two largest general orthopaedic societies in the United States: The Orthopaedic Research Society (ORS) and the American Academy of Orthopaedic Surgeons (AAOS) as well as the preeminent society from each orthopaedic subspecialty to stay consistent with our prior study. These societies were chosen to give an equal representation to all orthopaedic subspecialties. In an effort to decrease inherent bias, no societies that are nomination-dependent were included for analysis. Faculty on editorial boards of American orthopaedic journals with an impact factor over 2.5 were included for analysis. These journals included all journals from our previous study as well as the Orthopaedic Journal of Sports Medicine and Clinical Research on Foot and Ankle.

Of 181 academic orthopaedic programs with an accredited residency program were included for data analysis. Three institutions were excluded due to the lack of a list of faculty members on departmental websites and limited contacts to find this information. 36 out of the remaining 178 programs responded to the authors’ email for a response rate of 20.2%.

Programs received cumulative NIH grant funding between 2014-2018 ranging from $31.9 million [University of California, San Francisco (UCSF)] to no NIH funding. Washington University in St. Louis ($29.3 million), Virginia Commonwealth University (VCU) ($28.6 million), University of Rochester ($23.0 million), Brown University ($22.1 million) and Sidney Kimmel Medical College (SKMC) at Thomas Jefferson University ($18.2 million) represent the next five institutions with the highest NIH funding during this period (Table 1).

| Institution | NIH funding | Points (weighted) |

| University of California, San Francisco | $31928483 | 2 |

| Washington University in St. Louis | $29320191 | 1.836616603 |

| Virginia Commonwealth University | $28619478 | 1.792723945 |

| University of Rochester | $23035238 | 1.442927182 |

| Brown University | $22064165 | 1.382099175 |

| SKMC at Thomas Jefferson University | $18237937 | 1.142424274 |

| University of Pennsylvania | $17252775 | 1.080713731 |

| Mayo Clinic (Rochester) | $16801697 | 1.052458208 |

| University of Utah | $16762167 | 1.049982049 |

| Yale University | $16184261 | 1.01378202 |

The total number of publications for full-time faculty of an orthopaedic department ranged from 12 to 13494 at the Hospital for Special Surgery (Cornell). The five institutions with the most publications following the Hospital for Special Surgery were SKMC at Thomas Jefferson University (9259), Mayo Clinic (Rochester) (8735), Washington University in St. Louis (6616), Massachusetts General Hospital (MGH)/Brigham and Women's/Harvard (6421), and Rush University (5661) (Table 2).

| Institution | Publications | Points (weighted) |

| Hospital for Special Surgery (Cornell) | 13494 | 1 |

| SKMC at Thomas Jefferson University | 9259 | 0.68615681 |

| Mayo Clinic (Rochester) | 8735 | 0.64732474 |

| Washington University in St. Louis | 6616 | 0.49029198 |

| MGH/Brigham and Women's/Harvard | 6421 | 0.47584111 |

| Rush University | 5661 | 0.41951979 |

| New York University | 4882 | 0.36179043 |

| University of Pennsylvania | 4603 | 0.34111457 |

| University of Pittsburgh | 4407 | 0.3265896 |

| Stanford University | 3903 | 0.28923966 |

The Hospital for Special Surgery had the highest cumulative h-index with 3318, followed by SKMC at Thomas Jefferson University which had a h-index of 1988 (Table 3).

| Institution | h-index | Points (weighted) |

| Hospital for Special Surgery (Cornell) | 3318 | 1 |

| SKMC at Thomas Jefferson University | 1988 | 0.59915612 |

| Washington University in St. Louis | 1680 | 0.50632911 |

| Mayo Clinic (Rochester) | 1627 | 0.49035564 |

| MGH/Brigham and Women's/Harvard | 1454 | 0.43821579 |

| University of California, San Francisco | 1178 | 0.35503315 |

| University of Pittsburgh | 1126 | 0.33936106 |

| New York University | 1109 | 0.33423749 |

| University of California, Los Angeles | 1101 | 0.3318264 |

| Rush University | 1078 | 0.32489451 |

Fifty-two programs had faculty members holding at least one leadership position in orthopaedic surgery societies in the United States, representing 29.2% of the 178 programs evaluated. Full-time faculty at MGH/Brigham and Women’s/Harvard garnered the most leadership positions with seven, followed by Duke University with six. Four leadership positions were held by faculty at the Hospital for Special Surgery, Johns Hopkins University, Mayo Clinic (Rochester), Rush University, and SKMC at Thomas Jefferson University (Table 4).

| Institution | Leadership positions | Points (weighted) |

| MGH/Brigham and Women's/Harvard | 7 | 0.500 |

| Duke University | 6 | 0.429 |

| Hospital for Special Surgery (Cornell) | 4 | 0.286 |

| Johns Hopkins University | 4 | 0.286 |

| Mayo Clinic (Rochester) | 4 | 0.286 |

| Rush University | 4 | 0.286 |

| SKMC at Thomas Jefferson University | 4 | 0.286 |

| University of North Carolina | 3 | 0.214 |

| Cleveland Clinic | 3 | 0.214 |

| Washington University in St. Louis | 3 | 0.214 |

| Yale University | 3 | 0.214 |

Full-time faculty at the Hospital for Special Surgery held the most editorial board positions at top orthopaedic and subspecialty journals with 20 positions, followed by MGH/Brigham and Women’s/Harvard with 19 positions and Washington University in St. Louis with 18 editorial board positions (Table 5).

| Institution | Editorial board positions | Points (weighted) |

| Hospital for Special Surgery (Cornell) | 20 | 0.5 |

| MGH/Brigham and Women's/Harvard | 19 | 0.475 |

| Washington University in St. Louis | 18 | 0.45 |

| SKMC at Thomas Jefferson University | 16 | 0.4 |

| University of Pittsburgh | 15 | 0.375 |

| Johns Hopkins University | 11 | 0.275 |

| Columbia University | 11 | 0.275 |

| Stanford University | 11 | 0.275 |

| University of Michigan | 11 | 0.275 |

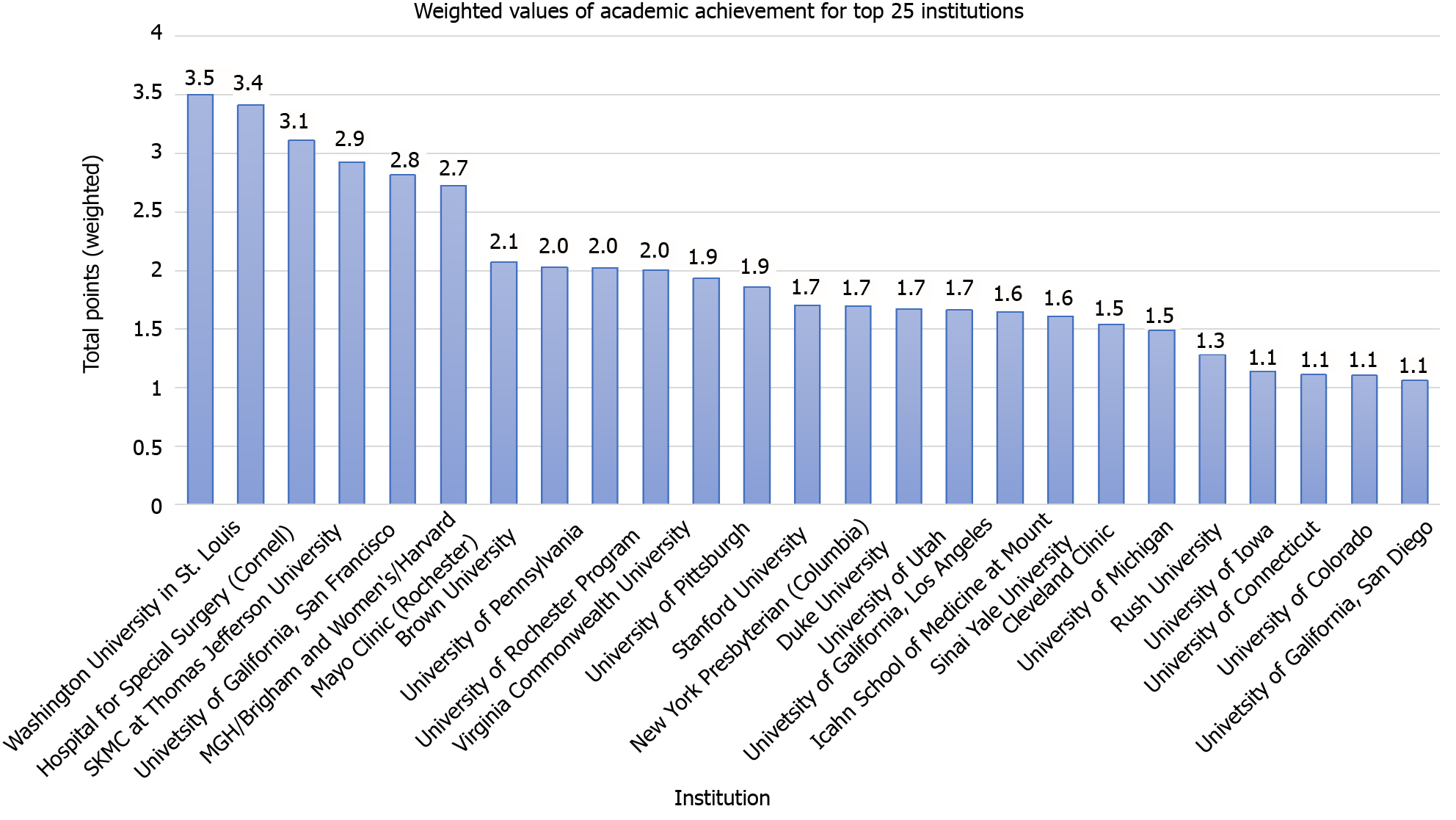

All 178 programs were evaluated using the comprehensive weighted algorithm. Based on this algorithm, Washington University in St. Louis was shown to be the most academically productive orthopaedic surgery program in the United States. The following five most academically productive orthopaedic programs were: The Hospital for Special Surgery, SKMC at Thomas Jefferson University, the UCSF, MGH/Brigham and Women’s/Harvard and Mayo Clinic (Rochester) (Figure 2).

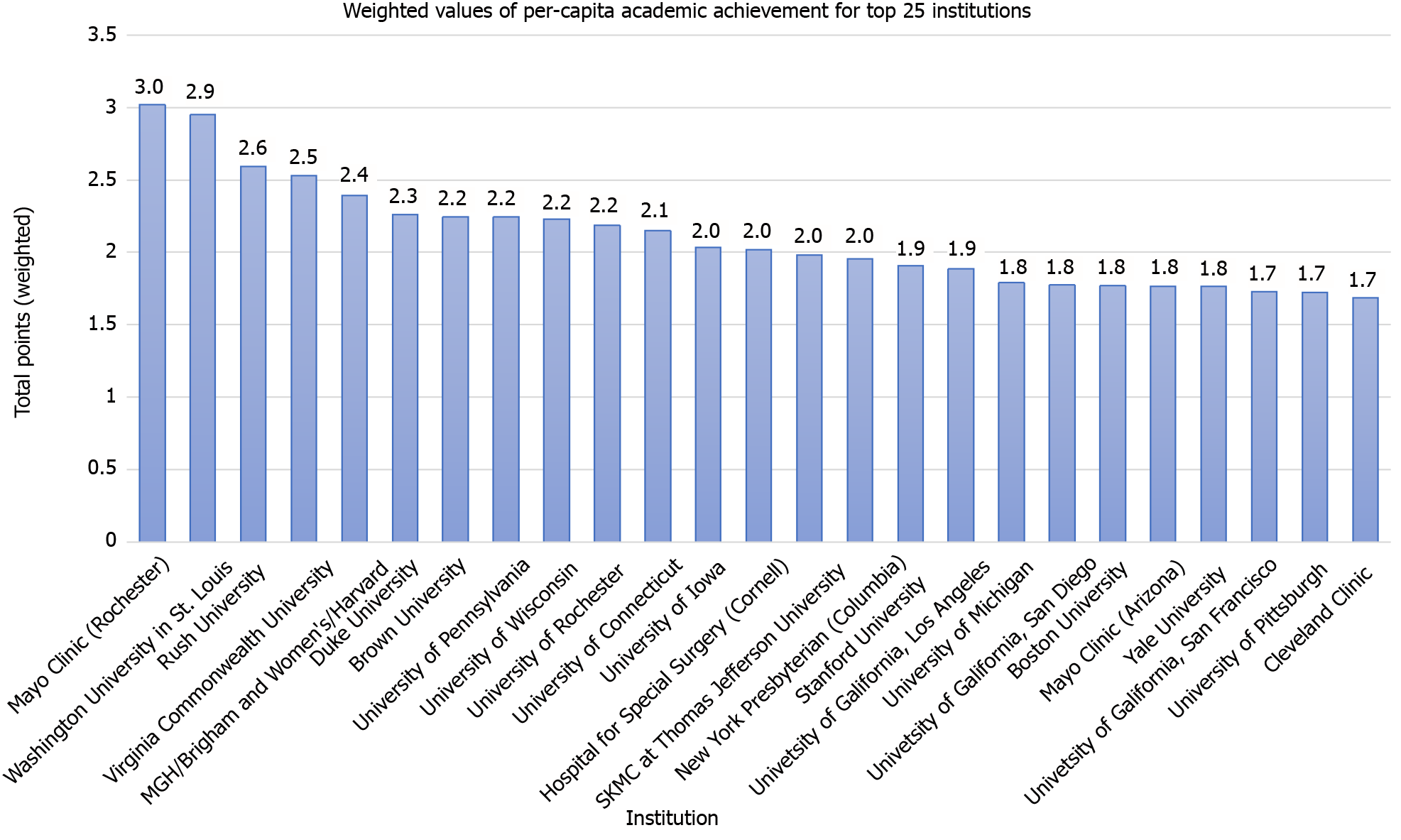

Based on per-capita measurements of academic achievement that accounts for the number of full-time faculty in each program, the most academically productive orthopaedic surgery programs were: Mayo Clinic (Rochester), Washington University in St. Louis, Rush University, VCU, MGH/Brigham and Women’s/Harvard, and Duke University (Figure 3).

VCU had the largest improvement in their score from 2013 with a 1.62 point change. SKMC at Thomas Jefferson University (1.40), UCSF (1.39), MGH/Brigham and Women’s/Harvard (1.31), Brown University (1.23) and Icahn School of Medicine at Mount Sinai (0.92) were the next five institutions with the largest improvement in cumulative score since 2013 (Table 6).

| Institution | Change in points from 2013 |

| Virginia Commonwealth University | 1.62 |

| SKMC at Thomas Jefferson University | 1.40 |

| University of California, San Francisco | 1.39 |

| MGH/Brigham and Women's/Harvard | 1.31 |

| Brown University | 1.23 |

| Icahn School of Medicine at Mount Sinai | 0.92 |

| University of Utah | 0.90 |

| Hospital for Special Surgery (Cornell) | 0.86 |

| Cleveland Clinic Foundation Program | 0.83 |

| Columbia University | 0.76 |

This study aims to assess the scholarly contribution of orthopaedic departments using objective bibliometrics from 2014 to 2018. With so many metrics available to assess academic achievement, this is admittedly both difficult and controversial. In light of the financial, reputational and academic pressures surrounding academic productivity, our goal was to (1) Acknowledge academic institutions for their scientific contribution; (2) Allow academic departments to communicate best practices to one another; and (3) Provide a method to longitudinally monitor academic improvement to facilitate a discussion as to the definition of academic success.

It is imperative to consider that the mission of orthopaedic programs and faculty is not always rooted in academic achievement, but rather is based on operative and clinical capability, outreach to underserved populations, teaching and mentorship, and/or technological innovation. Undoubtedly, there are metrics other than academic productivity that define a program’s “success.” Although many of the results of this study are organized numerically, the findings are not intended for comparison against one another. The purpose of this study was not to “rank” orthopaedic departments, but rather to establish a tool that programs may use to assess their own academic productivity against their respective baseline values established in this study. The conclusions reached in this study only pertain to academic productivity as related to the specific bibliometrics analyzed. Additionally, departments inherently differ in size and maturity of research infrastructure. Nonetheless, in a culture that is constantly interested in evaluations, analyzing academic productivity using objective metrics remains an important factor to appraise orthopaedic departments.

There are several limitations of this study to consider. One important limitation to our study is the subjective nature by which the weighted algorithm was formulated. The authors believe that our previous study[23] did not place enough emphasis on the effect that basic science research has on academic productivity. Although other sources of basic science funding such as the Department of Defense and the Orthopaedic Research and Education Foundation exist, the NIH is the largest public funder of biomedical research worldwide[27]. Therefore, as basic science is a large portion of the NIH portfolio[27], NIH funding was given additional weight (40% of cumulative score) relative to other bibliometrics included. As the distribution of points in our current study slightly differs from our previous paper, our calculation of score improvement from 2013 to 2018 is subject to limitations. Given that any choice of variables for a weighted algorithm will have an element of subjectivity to it, the authors accept these limitations. The authors also acknowledge that it may be difficult to identify part-time, co-appointed or emeritus faculty based solely on departmental websites. Furthermore, there are undoubtedly changes in faculty lists over from 2013 to 2018. However, universities and academic centers have different criteria for “joint appointments.” As such, in an effort to decrease inherent bias, part-time or co-appointed faculty were not included. All 178 programs were also contacted in an attempt to confirm faculty lists.

Further limitations of this study lie in the actual bibliometrics used. The h-index has been validated both within and outside of the orthopaedic community[16-18], however it is not without its criticisms. The h-index does not proportionally reflect the impact of authors who have published a small number of highly cited studies, nor does it proportionally reflect the impact of authors who have published a large number of scarcely cited studies. The h-index and the total number of publications also do not take into account the order that authors are listed and thus, the impact that each author had[28,29]. Augmentations of the h-index have been proposed[28-30], however until they are widely accepted and publicly available, the authors believe that the h-index remains the best metric. Furthermore, the authors only reported journal editorial board members and leaders in orthopaedic academic societies for the 2018 calendar year as most journals and societies do not have this data for prior years publicly available. This excluded either of these metrics for years prior. In an effort to minimize this limitation, editorial board and society leadership each only accounted for 10% of the overall score. While the authors believe a snapshot of recent academic productivity is important when evaluating recent academic achievement, it is imperative to understand how the availability of prior data would affect these results.

Based on this algorithm, Washington University in St. Louis, the Hospital for Special Surgery, SKMC at Thomas Jefferson University, the UCSF and MGH/Brigham and Women’s/Harvard are currently the five most cumulatively academically productive orthopaedic surgery programs. The Mayo Clinic (Rochester), Washington University in St. Louis, Rush University, VCU and MGH/Brigham and Women’s/Harvard are currently the five most academically productive orthopaedic surgery programs per capita. The five academic programs that had the largest improvement in cumulative score from 2013 to 2018 were: VCU, SKMC at Thomas Jefferson University, UCSF, MGH/Brigham and Women’s/ Harvard, and Brown University.

This algorithm is easily reproducible and provides a metric that departments can use to track their academic productivity over time as well as identify areas for improvement. These reported bibliometrics can continually be updated in upcoming years as a measure of changing scholarly contribution. Programs that have shown dramatic improvement in scholarly contribution since our 2013 study can be seen as model programs. Programs striving for similar improvement would have other programs identified to serve as roadmaps, opening up an avenue for communication. Furthermore, as factors affecting academic promotion are often difficult to assess, this standardized algorithm may, importantly, aid academic medical centers to determine promotion. This is not a list of the “best” orthopaedic surgery institutions as clinical care metrics were not included in the analysis. This analysis did not attempt to take into account the quality of clinical care provided, or the clinical education provided to medical students, residents or fellows, therefore the authors would like to reiterate that this algorithm was not used to rank orthopaedic departments.

Orthopaedic surgery faculty are often measured by bibliometric variables that represent their academic productivity such as citation indices, number of publications and amount of research funding.

Assessing academic productivity allows academic departments to identify the strengths of their scholarly contribution and provides an opportunity to evaluate areas for improvement.

To provide objective benchmarks for departments seeking to enhance academic productivity and identify those with improvement in recent past.

Our study retrospectively analyzed a cohort of orthopaedic faculty at United States-based academic orthopaedic programs. Variables included for analysis were National Institutes of Health funding (2014-2018), leadership positions in orthopaedic societies (2018), editorial board positions of top orthopaedic journals (2018), total number of publications and Hirsch-index. A weighted algorithm was used to calculate a cumulative score for each academic program.

The five institutions with the highest cumulative score, in decreasing order, were: Washington University in St. Louis, the Hospital for Special Surgery, Sidney Kimmel Medical College (SKMC) at Thomas Jefferson University, the University of California, San Francisco (UCSF) and Massachusetts General Hospital (MGH)/Brigham and Women’s/Harvard. The five institutions with the highest score per capita, in decreasing order, were: Mayo Clinic (Rochester), Washington University in St. Louis, Rush University, Virginia Commonwealth University (VCU) and MGH/Brigham and Women’s/Harvard. The five academic programs that had the largest improvement in cumulative score from 2013 to 2018, in decreasing order, were: VCU, SKMC at Thomas Jefferson University, UCSF, MGH/Brigham and Women’s/Harvard, and Brown University.

This algorithm can provide orthopaedic departments a means to assess academic productivity, monitor progress, and identify areas for improvement as they seek to expand their academic contributions to the orthopaedic community.

The authors would like to reiterate that this is in no way a ranking system as there are many unique challenges that institutions face. We hope that this provides a tool that programs may use to assess and improve their own academic productivity, while simultaneously providing an opportunity to praise the growth and achievement of institutions on a cumulative as well as per capita basis.

Provenance and peer review: Unsolicited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Orthopedics

Country/Territory of origin: United States

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): 0

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Sa-Ngasoongsong P S-Editor: Fan JR L-Editor: A P-Editor: Fan JR

| 1. | Hohmann E, Glatt V, Tetsworth K. Orthopaedic Academic Activity in the United States: Bibliometric Analysis of Publications by City and State. J Am Acad Orthop Surg Glob Res Rev. 2018;2:e027. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 2] [Cited by in RCA: 6] [Article Influence: 0.9] [Reference Citation Analysis (0)] |

| 2. | Tetsworth K, Fraser D, Glatt V, Hohmann E. Use of Google Scholar public profiles in orthopedics. J Orthop Surg (Hong Kong). 2017;25:2309499017690322. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 6] [Article Influence: 0.8] [Reference Citation Analysis (0)] |

| 3. | Valsangkar NP, Zimmers TA, Kim BJ, Blanton C, Joshi MM, Bell TM, Nakeeb A, Dunnington GL, Koniaris LG. Determining the Drivers of Academic Success in Surgery: An Analysis of 3,850 Faculty. PLoS One. 2015;10:e0131678. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 41] [Cited by in RCA: 49] [Article Influence: 4.9] [Reference Citation Analysis (0)] |

| 4. | Residency Navigator Methodology. Doximity. [cited 16 July 2019]. Available from: https://residency.doximity.com/methodology. |

| 5. | U.S. News and World Reports. Best Orthopedic Hospitals. [cited 16 July 2019]. Available from: https://health.usnews.com/best-hospitals/rankings/orthopedics. |

| 6. | The Active Times. Unique Luxury Stargazing Domes-See the Stars and planet Mars. [cited 16 July 2019]. Available from: http://www.theactivetimes.com/best-orthopedichospitals-us. |

| 7. | The Leapfrog Group. Search Leapfrog’s Hospital and Surgery Center Ratings. [cited 16 July 2019]. Available from: http://www.leapfroggroup.org/cp. |

| 8. | Blumenthal KG, Huebner EM, Banerji A, Long AA, Gross N, Kapoor N, Blumenthal DM. Sex differences in academic rank in allergy/immunology. J Allergy Clin Immunol. 2019;144:1697-1702.e1. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 13] [Cited by in RCA: 19] [Article Influence: 3.2] [Reference Citation Analysis (0)] |

| 9. | Colaco M, Svider PF, Mauro KM, Eloy JA, Jackson-Rosario I. Is there a relationship between National Institutes of Health funding and research impact on academic urology? J Urol. 2013;190:999-1003. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 57] [Cited by in RCA: 68] [Article Influence: 5.7] [Reference Citation Analysis (0)] |

| 10. | LaRocca CJ, Wong P, Eng OS, Raoof M, Warner SG, Melstrom LG. Academic productivity in surgical oncology: Where is the bar set for those training the next generation? J Surg Oncol. 2018;118:397-402. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 6] [Article Influence: 0.9] [Reference Citation Analysis (0)] |

| 11. | McDermott M, Gelb DJ, Wilson K, Pawloski M, Burke JF, Shelgikar AV, London ZN. Sex Differences in Academic Rank and Publication Rate at Top-Ranked US Neurology Programs. JAMA Neurol. 2018;75:956-961. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 66] [Cited by in RCA: 91] [Article Influence: 15.2] [Reference Citation Analysis (0)] |

| 12. | Namavar AA, Marczynski V, Choi YM, Wu JJ. US dermatology residency program rankings based on academic achievement. Cutis. 2018;101:146-149. [PubMed] |

| 13. | Svider PF, Lopez SA, Husain Q, Bhagat N, Eloy JA, Langer PD. The association between scholarly impact and National Institutes of Health funding in ophthalmology. Ophthalmology. 2014;121:423-428. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 57] [Cited by in RCA: 76] [Article Influence: 6.9] [Reference Citation Analysis (0)] |

| 14. | Yang HY, Rhee G, Xuan L, Silver JK, Jalal S, Khosa F. Analysis of H-index in Assessing Gender Differences in Academic Rank and Leadership in Physical Medicine and Rehabilitation in the United States and Canada. Am J Phys Med Rehabil. 2019;98:479-483. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 33] [Cited by in RCA: 45] [Article Influence: 7.5] [Reference Citation Analysis (0)] |

| 15. | Therattil PJ, Hoppe IC, Granick MS, Lee ES. Application of the h-Index in Academic Plastic Surgery. Ann Plast Surg. 2016;76:545-549. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 68] [Cited by in RCA: 85] [Article Influence: 10.6] [Reference Citation Analysis (0)] |

| 16. | Bastian S, Ippolito JA, Lopez SA, Eloy JA, Beebe KS. The Use of the h-Index in Academic Orthopaedic Surgery. J Bone Joint Surg Am. 2017;99:e14. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 80] [Cited by in RCA: 127] [Article Influence: 15.9] [Reference Citation Analysis (0)] |

| 17. | Martinez M, Lopez S, Beebe K. Gender Comparison of Scholarly Production in the Musculoskeletal Tumor Society Using the Hirsch Index. J Surg Educ. 2015;72:1172-1178. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 17] [Cited by in RCA: 23] [Article Influence: 2.3] [Reference Citation Analysis (0)] |

| 18. | Nowak JK, Lubarski K, Kowalik LM, Walkowiak J. H-index in medicine is driven by original research. Croat Med J. 2018;59:25-32. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 15] [Cited by in RCA: 20] [Article Influence: 2.9] [Reference Citation Analysis (0)] |

| 19. | Hirsch JE. An index to quantify an individual's scientific research output. Proc Natl Acad Sci U S A. 2005;102:16569-16572. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5772] [Cited by in RCA: 4419] [Article Influence: 221.0] [Reference Citation Analysis (0)] |

| 20. | Svider PF, Mauro KM, Sanghvi S, Setzen M, Baredes S, Eloy JA. Is NIH funding predictive of greater research productivity and impact among academic otolaryngologists? Laryngoscope. 2013;123:118-122. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 84] [Cited by in RCA: 89] [Article Influence: 6.8] [Reference Citation Analysis (0)] |

| 21. | Benway BM, Kalidas P, Cabello JM, Bhayani SB. Does citation analysis reveal association between h-index and academic rank in urology? Urology. 2009;74:30-33. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 111] [Cited by in RCA: 125] [Article Influence: 7.8] [Reference Citation Analysis (0)] |

| 22. | Ponce FA, Lozano AM. Academic impact and rankings of American and Canadian neurosurgical departments as assessed using the h index. J Neurosurg. 2010;113:447-457. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 55] [Cited by in RCA: 63] [Article Influence: 4.2] [Reference Citation Analysis (0)] |

| 23. | Namavar AA, Loftin AH, Khahera AS, Stavrakis AI, Hegde V, Johansen D, Zoller S, Bernthal N. Evaluation of US Orthopaedic Surgery Academic Centers Based on Measurements of Academic Achievement. J Am Acad Orthop Surg. 2019;27:e118-e126. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 13] [Cited by in RCA: 16] [Article Influence: 2.7] [Reference Citation Analysis (0)] |

| 24. | Accrediation Council for Graduate Medical Education (ACGME). Public Advanced Program Search. [cited 04 April 2019]. Available from: https://apps.acgme.org/ads/Public/Programs/Search. |

| 25. | Scopus. Scopus Database. [cited 16 July 2019]. Available from: http://www.scopus.com/. |

| 26. | Walker B, Alavifard S, Roberts S, Lanes A, Ramsay T, Boet S. Inter-rater reliability of h-index scores calculated by Web of Science and Scopus for clinical epidemiology scientists. Health Info Libr J. 2016;33:140-149. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 14] [Cited by in RCA: 19] [Article Influence: 2.4] [Reference Citation Analysis (0)] |

| 27. | Gillum LA, Gouveia C, Dorsey ER, Pletcher M, Mathers CD, McCulloch CE, Johnston SC. NIH disease funding levels and burden of disease. PLoS One. 2011;6:e16837. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 158] [Cited by in RCA: 148] [Article Influence: 10.6] [Reference Citation Analysis (0)] |

| 28. | Post A, Li AY, Dai JB, Maniya AY, Haider S, Sobotka S, Choudhri TF. c-index and Subindices of the h-index: New Variants of the h-index to Account for Variations in Author Contribution. Cureus. 2018;10:e2629. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 2] [Cited by in RCA: 4] [Article Influence: 0.6] [Reference Citation Analysis (0)] |

| 29. | Thompson DF, Callen EC, Nahata MC. New indices in scholarship assessment. Am J Pharm Educ. 2009;73:111. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 59] [Cited by in RCA: 59] [Article Influence: 3.7] [Reference Citation Analysis (0)] |

| 30. | Zhang CT. The h'-index, effectively improving the h-index based on the citation distribution. PLoS One. 2013;8:e59912. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 36] [Cited by in RCA: 38] [Article Influence: 3.2] [Reference Citation Analysis (0)] |