Published online Feb 28, 2019. doi: 10.4329/wjr.v11.i2.19

Peer-review started: November 30, 2018

First decision: January 4, 2019

Revised: January 14, 2019

Accepted: January 26, 2019

Article in press: January 27, 2019

Published online: February 28, 2019

Processing time: 91 Days and 16.6 Hours

Artificial intelligence (AI) is gaining extensive attention for its excellent performance in image-recognition tasks and increasingly applied in breast ultrasound. AI can conduct a quantitative assessment by recognizing imaging information automatically and make more accurate and reproductive imaging diagnosis. Breast cancer is the most commonly diagnosed cancer in women, severely threatening women’s health, the early screening of which is closely related to the prognosis of patients. Therefore, utilization of AI in breast cancer screening and detection is of great significance, which can not only save time for radiologists, but also make up for experience and skill deficiency on some beginners. This article illustrates the basic technical knowledge regarding AI in breast ultrasound, including early machine learning algorithms and deep learning algorithms, and their application in the differential diagnosis of benign and malignant masses. At last, we talk about the future perspectives of AI in breast ultrasound.

Core tip: Artificial intelligence (AI) is gaining extensive attention for its excellent performance in image-recognition tasks and increasingly applied in breast ultrasound. In this review, we summarize the current knowledge of AI in breast ultrasound, including the technical aspects, and its applications in the differentiation between benign and malignant breast masses. In the meanwhile, we also discuss the future perspectives, such as combining with elastography and contrast-enhanced ultrasound, to improve the performance of AI in breast ultrasound.

- Citation: Wu GG, Zhou LQ, Xu JW, Wang JY, Wei Q, Deng YB, Cui XW, Dietrich CF. Artificial intelligence in breast ultrasound. World J Radiol 2019; 11(2): 19-26

- URL: https://www.wjgnet.com/1949-8470/full/v11/i2/19.htm

- DOI: https://dx.doi.org/10.4329/wjr.v11.i2.19

Breast cancer is the most common malignant tumor and the second leading cause of cancer death among women in the United States[1]. In recent years, the incidence and mortality of breast cancer have increased year by year[2,3]. Mortality can be reduced by early detection and timely therapy. Therefore, its early and correct diagnosis has received significant attention. There are several predominant diagnostic methods for breast cancer, such as X-ray mammography, ultrasound, and magnetic resonance imaging (MRI).

Ultrasound is a first-line imaging tool for breast lesion characterization for its high availability, cost-effectiveness, acceptable diagnostic performance, and noninvasive and real-time capabilities. In addition to B-mode ultrasound, new techniques such as color Doppler, spectral Doppler, contrast-enhanced ultrasound, and elastography can also help ultrasound doctors obtain more accurate information. However, it suffers from operator dependence[4].

In recent years, artificial intelligence (AI), particularly deep learning (DL) algorithms, is gaining extensive attention for its extremely excellent performance in image-recognition tasks. AI can make a quantitative assessment by recognizing imaging information automatically so as to improve ultrasound performance in imaging breast lesions[5].

The use of AI in breast ultrasound has also been combined with other novel technology, such as ultrasound radiofrequency (RF) time series analysis[6], multimodality GPU-based computer-assisted diagnosis of breast cancer using ultrasound and digital mammography image[7], optical breast imaging[8,9], QT-based breast tissue volume imaging[10], and automated breast volume scanning (ABVS)[11].

So far, most studies on the use of AI in breast ultrasound focus on the differentiation of benign and malignant breast masses based on the B-mode ultrasound features of the masses. There is a need of a review to summarize the current status and future perspectives of the use of AI in breast ultrasound. In this paper, we introduce the applications of AI for breast mass detection and diagnosis with ultrasound.

Early AI mainly refers to traditional machine learning. It solves problems with two steps: object detection and object recognition. First, the machine uses a bounding box detection algorithm to scan the entire image to find the possible area of the object; second, the object recognition algorithm identifies and recognizes the object based on the previous step.

In the identification process, experts need to determine certain features and encode them into a data type. The machine extracts such features through images, performs quantitative analysis processing and then gives a judgment. It will be able to assist the radiologist to discover and analyze the lesions and improve the accuracy and efficiency of the diagnosis.

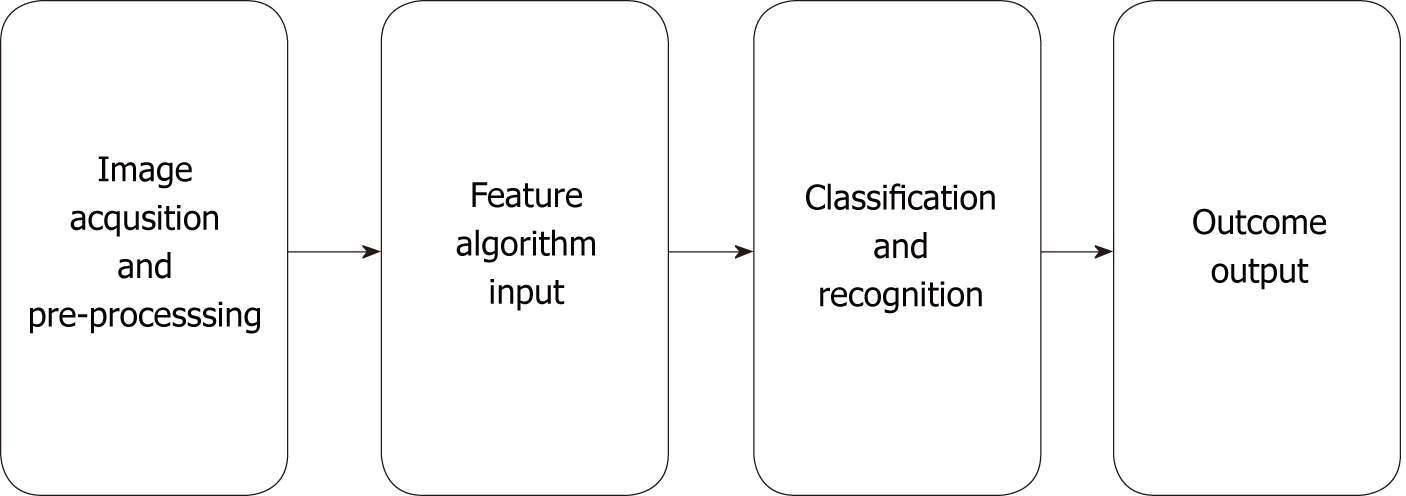

In the 1980s, computer-aided diagnosis (CAD) technology developed rapidly in medical imaging diagnosis. The workflow of the CAD system is roughly divided into several processes: data preprocessing, image segmentation-feature, extraction, selection and classification recognition, and result output (Figure 1).

In traditional machine learning, most applied features of a breast mass on ultrasound, including shape, texture, location, orientation and so on, require experts to identify and encode each as a data type. Therefore, the performance of machine learning algorithms depends on the accuracy of the extracted features of benign and malignant breast masses.

Identifying effective computable features from the Breast Imaging Reporting and Data System (BI-RADS) can help distinguish between benign and potential malignant lesions by different machine learning methods. Lesion margin and orientation were optimum features in almost all of the different machine learning methods[12].

CAD model can also be used to classify benign and metastatic lymph nodes in patients with breast tumor. Zhang et al[13] proposed a computer-assisted method through dual-modal features extracted from real-time elastography (RTE) and B-mode ultrasound. With the assistance of computer, five morphological features describing the hilum, size, shape, and echogenic uniformity of a lymph node were extracted from B-mode ultrasound, and three elastic features consisting of hard area ratio, strain ratio, and coefficient of variance were extracted from RTE. This computer-assisted method is proved to be valuable for the identification of benign and metastatic lymph nodes.

Recently, great progress has been made in processing and segmentation of images and selection of regions of interest (ROIs) in CAD. Feng et al[14] proposed a method of adaptively utilizing neighboring information, which can effectively improve the breast tumor segmentation performance on ultrasound images. Cai et al[15] proposed a phased congruency-based binary pattern texture descriptor, which is effective and robust to segament and classify B-mode ultrasound images regardless of image grey-scale variation.

According to the similarity of algorithm functions and forms, machine learning generally includes support vector machine, fuzzy logic, artificial neural network, etc., and each has its own advantages and disadvantages. Bing et al[16] proposed a novel method based on sparse representation for breast ultrasound image classification under the framework of multi-instance learning (MIL). Compared with state-of-the-art MIL method, this method achieved its obvious superiority in classification accuracy.

Lee et al[17] studied a novel Fourier-based shape feature extraction technique and proved that this technique provides higher classification accuracy for breast tumors in computer-aided B-mode ultrasound diagnosis system.

Otherwise, more features extracted and trained may benefit the recognition effciency. De et al[18] questioned the claim that training of machines with a simplified set of features would have a better effect on recognition. They conducted related experiments, and the results showed that the performance obtained with all 22 features in this experiment was slightly better than that obtained with a reduced set of features.

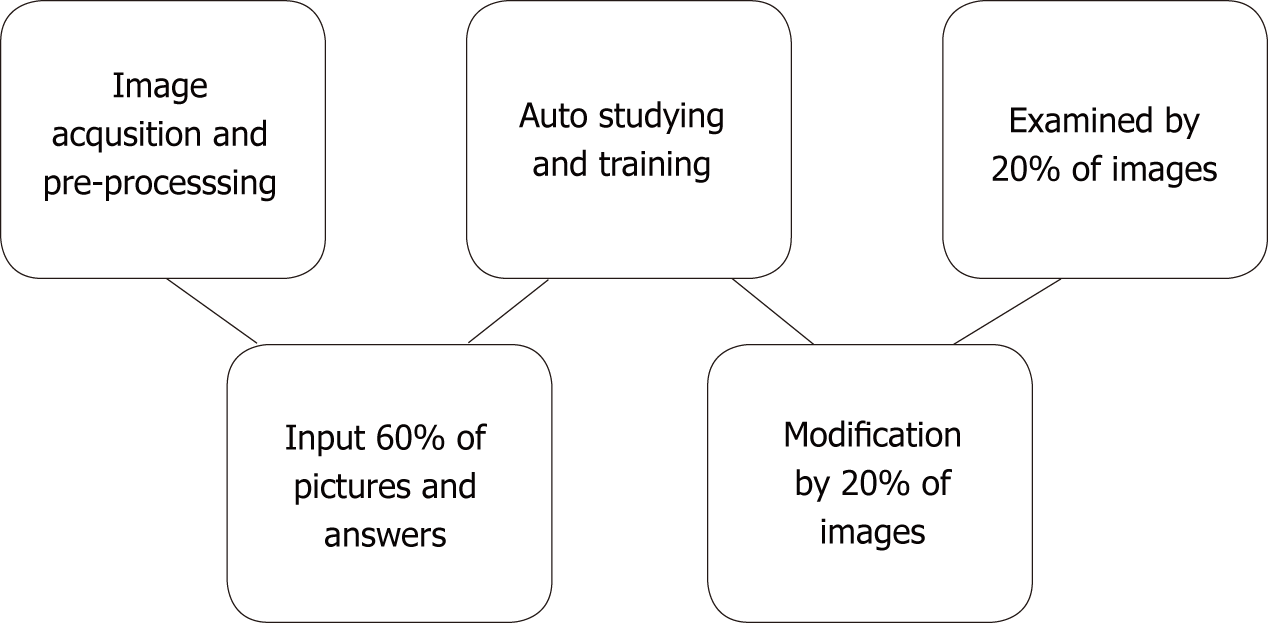

In contrast to traditional machine learning algorithms, DL algorithms do not rely on the features and ROIs that humans set in advance[19,20]. On the contrary, it prefers carrying out all the task processions on its own. Taking the convolutional neural networks (CNNs), the most popular architecture in DL for medical imaging, as an example, input layers, hidden layers, and output layers constitute the whole model, among which hidden layers are the key determinant of accomplishing the recognition. Hidden layers consist of quantities of convolutional layers and the fully connected layer. Convolutional layers handle different and massive problems that the machine raise itself on the basis of the input task, and the fully connected layer then connects them to be a complex system so as to output the outcome easily[21]. It has been proved that DL won an overwhelming victory over other architectures in computer vision completion despite its excessive data and hardware dependencies[22]. In medical imaging, besides ultrasound[23], studies have found that DL methods also perform perfectly on computed tomography[24] and MRI[25] (Figure 2).

Becker et al[26] conducted a retrospective study to evaluate the performance of generic DL software (DLS) in classifying breast cancer based on ultrasound images. They found that the accuracy of DLS to diagnose breast cancer is comparable to that of radiologists, and DLS can learn better and faster than a human reader without prior experience.

Zhang et al[27] established a DL architecture that could automatically extract image features from shear-wave elastography and evaluated the DL architecture in differentiation between benign and malignant breast tumors. The results showed that DL achieved better classification performance with an accuracy of 93.4%, a sensitivity of 88.6%, a specificity of 97.1%, and an area under the receiver operating characteristic curve (AUC) of 0.947.

Han et al[28] used CNN DL framework to differentiate the distinctive types of lesions and nodules on breast images acquired by ultrasound. The networks showed an accuracy of about 0.9, a sensitivity of 0.86, and a specificity of 0.96. This method shows promising results to classify malignant lesions in a short time and supports the diagnosis of radiologists in discriminating malignant lesions. Therefore, the proposed method can work in tandem with human radiologists to improve performance.

CNN has proven to be an effective task classifier, while it requires a large amount of training data, which can be a difficult task. Transferred deep neural networks are powerful tools for training deeper networks without overfitting and they may have better performance than CNN. Xiao et al[29] compared the performance of three transferred models, a CNN model, and a traditional machine learning-based model to differentiate benign and malignant tumors from breast ultrasound data and found that the transfer learning method outperformed the traditional machine learning model and the CNN model, where the transferred InceptionV3 achieved the best performance with an accuracy of 85.13% and an AUC of 0.91. Moreover, they built the model with combined features extracted from all three transferred models, which achieved the best performance with an accuracy of 89.44% and an AUC of 0.93 on an independent test set.

Yap et al[30] studied the use of three DL methods (patch-based LeNet, U-Net, and a transfer learning approach with a pretrained FCN-AlexNet) for breast ultrasound lesion detection and compared their performance against four state-of-the-art lesion detection algorithms. The results demonstrate that the transfer learning method showed the best performance over the other two DL approaches when assessed on two datasets in terms of true positive fraction, false positives per image, and F-measure.

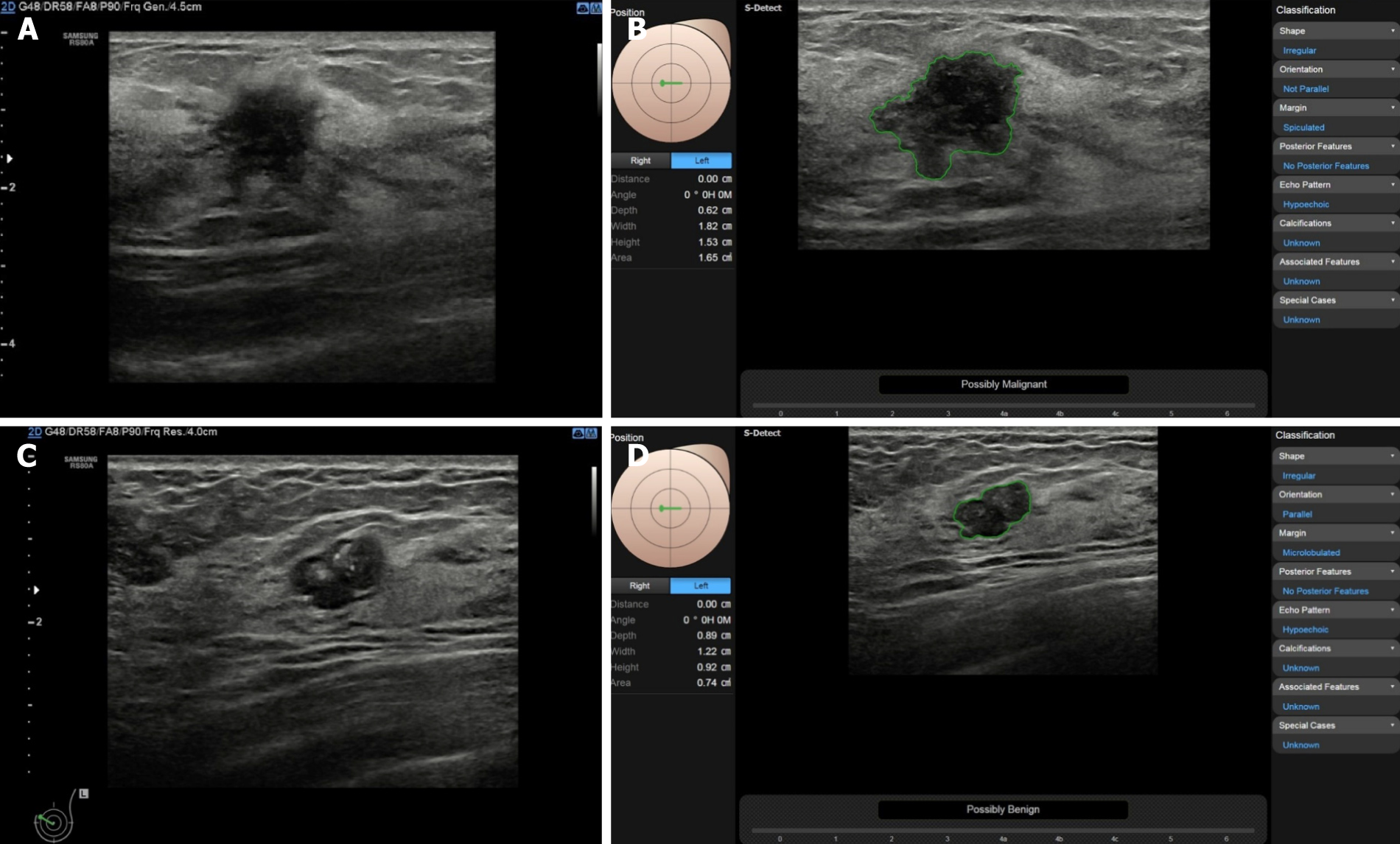

Images are usually uploaded from the ultrasonic machine to the workstation for image re-processing, while a DL technique (S-detect) can directly identify and mark breast masses on the ultrasound system. S-detect is a tool equipped in the Samsung RS80A ultrasound system, and based on the DL algorithm, it performs lesion segmentation, feature analysis, and descriptions according to the BI-RADS 2003 or BI-RADS 2013 lexicon. It can give immediate judgment of benignity or malignancy in the freezd images on the ultrasound machine after choosing ROI automatically or manually (Figure 3). Kim et al[31] evaluated the diagnostic performance of S-detect for the differentiation of benign from malignant breast lesions. When the cutoff was set at category 4a in BI-RADS, the specificity, PPV, and accuracy were significantly higher in S-detect compared to the radiologist (P < 0.05 for all), and the AUC was 0.725 compared to 0.653 (P = 0.038).

Di Segni et al[32] also evaluated the diagnostic performance of S-detect in the assessment of focal breast lesions. S-detect showed a sensitivity > 90% and a 70.8% specificity, with inter-rater agreement ranging from moderate to good. S-detect may be a feasible tool for the characterization of breast lesions and assist physicians in making clinical decisions.

AI has been increasingly applied in ultrasound and proved to be a powerful tool to provide a reliable diagnosis with higher accuracy and efficiency and reduce the workload of pyhsicians. It is roughly divided into early machine learning controlled by manual input algorithms, and DL, with which software can self-study. There is still no guidelines to recommend the application of AI with ultrasound in clinical practice, and more studies are required to explore more advanced methods and to prove their usefulness.

In the near future, we believe that AI in breast ultrasound can not only distinguish between benign and malignant breast masses, but also further classify specific benign diseases, such as inflammative breast mass and fibroplasia. In addition, AI in ultrasound may also predict Tumor Node Metastasis classification[33], prognosis, and the treatment response for patients with breast cancer. Last but not the least, the accuracy of AI on ultrasound to differentiate benign from malignant breast lesions may not only be based on B-mode ultrasound images, but also could combine images from other advanced techniqes, such as ABVS, elastography, and contrast-enhanced ultrasound.

Manuscript source: Invited manuscript

Specialty type: Radiology, nuclear medicine and medical imaging

Country of origin: China

Peer-review report classification

Grade A (Excellent): 0

Grade B (Very good): B

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): 0

P- Reviewer: Bazeed MF, Gao BL S- Editor: Ji FF L- Editor: Wang TQ E- Editor: Song H

| 1. | Siegel RL, Miller KD, Jemal A. Cancer Statistics, 2017. CA Cancer J Clin. 2017;67:7-30. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 11065] [Cited by in RCA: 12186] [Article Influence: 1523.3] [Reference Citation Analysis (3)] |

| 2. | Ferlay J, Soerjomataram I, Dikshit R, Eser S, Mathers C, Rebelo M, Parkin DM, Forman D, Bray F. Cancer incidence and mortality worldwide: sources, methods and major patterns in GLOBOCAN 2012. Int J Cancer. 2015;136:E359-E386. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 20108] [Cited by in RCA: 20511] [Article Influence: 2051.1] [Reference Citation Analysis (20)] |

| 3. | Global Burden of Disease Cancer Collaboration; Fitzmaurice C, Dicker D, Pain A, Hamavid H, Moradi-Lakeh M, MacIntyre MF, Allen C, Hansen G, Woodbrook R, Wolfe C, Hamadeh RR, Moore A, Werdecker A, Gessner BD, Te Ao B, McMahon B, Karimkhani C, Yu C, Cooke GS, Schwebel DC, Carpenter DO, Pereira DM, Nash D, Kazi DS, De Leo D, Plass D, Ukwaja KN, Thurston GD, Yun Jin K, Simard EP, Mills E, Park EK, Catalá-López F, deVeber G, Gotay C, Khan G, Hosgood HD 3rd, Santos IS, Leasher JL, Singh J, Leigh J, Jonas JB, Sanabria J, Beardsley J, Jacobsen KH, Takahashi K, Franklin RC, Ronfani L, Montico M, Naldi L, Tonelli M, Geleijnse J, Petzold M, Shrime MG, Younis M, Yonemoto N, Breitborde N, Yip P, Pourmalek F, Lotufo PA, Esteghamati A, Hankey GJ, Ali R, Lunevicius R, Malekzadeh R, Dellavalle R, Weintraub R, Lucas R, Hay R, Rojas-Rueda D, Westerman R, Sepanlou SG, Nolte S, Patten S, Weichenthal S, Abera SF, Fereshtehnejad SM, Shiue I, Driscoll T, Vasankari T, Alsharif U, Rahimi-Movaghar V, Vlassov VV, Marcenes WS, Mekonnen W, Melaku YA, Yano Y, Artaman A, Campos I, MacLachlan J, Mueller U, Kim D, Trillini M, Eshrati B, Williams HC, Shibuya K, Dandona R, Murthy K, Cowie B, Amare AT, Antonio CA, Castañeda-Orjuela C, van Gool CH, Violante F, Oh IH, Deribe K, Soreide K, Knibbs L, Kereselidze M, Green M, Cardenas R, Roy N, Tillmann T, Li Y, Krueger H, Monasta L, Dey S, Sheikhbahaei S, Hafezi-Nejad N, Kumar GA, Sreeramareddy CT, Dandona L, Wang H, Vollset SE, Mokdad A, Salomon JA, Lozano R, Vos T, Forouzanfar M, Lopez A, Murray C, Naghavi M. The Global Burden of Cancer 2013. JAMA Oncol. 2015;1:505-527. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1945] [Cited by in RCA: 2055] [Article Influence: 205.5] [Reference Citation Analysis (0)] |

| 4. | Hooley RJ, Scoutt LM, Philpotts LE. Breast ultrasonography: state of the art. Radiology. 2013;268:642-659. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 168] [Cited by in RCA: 195] [Article Influence: 16.3] [Reference Citation Analysis (1)] |

| 5. | Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18:500-510. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1552] [Cited by in RCA: 1850] [Article Influence: 264.3] [Reference Citation Analysis (2)] |

| 6. | Uniyal N, Eskandari H, Abolmaesumi P, Sojoudi S, Gordon P, Warren L, Rohling RN, Salcudean SE, Moradi M. Ultrasound RF time series for classification of breast lesions. IEEE Trans Med Imaging. 2015;34:652-661. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 59] [Cited by in RCA: 46] [Article Influence: 4.6] [Reference Citation Analysis (0)] |

| 7. | Sidiropoulos KP, Kostopoulos SA, Glotsos DT, Athanasiadis EI, Dimitropoulos ND, Stonham JT, Cavouras DA. Multimodality GPU-based computer-assisted diagnosis of breast cancer using ultrasound and digital mammography images. Int J Comput Assist Radiol Surg. 2013;8:547-560. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 15] [Cited by in RCA: 10] [Article Influence: 0.8] [Reference Citation Analysis (1)] |

| 8. | Pearlman PC, Adams A, Elias SG, Mali WP, Viergever MA, Pluim JP. Mono- and multimodal registration of optical breast images. J Biomed Opt. 2012;17:080901-080901. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 7] [Article Influence: 0.5] [Reference Citation Analysis (0)] |

| 9. | Lee JH, Kim YN, Park HJ. Bio-optics based sensation imaging for breast tumor detection using tissue characterization. Sensors (Basel). 2015;15:6306-6323. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 6] [Cited by in RCA: 6] [Article Influence: 0.6] [Reference Citation Analysis (0)] |

| 10. | Malik B, Klock J, Wiskin J, Lenox M. Objective breast tissue image classification using Quantitative Transmission ultrasound tomography. Sci Rep. 2016;6:38857. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 24] [Cited by in RCA: 28] [Article Influence: 3.1] [Reference Citation Analysis (0)] |

| 11. | Wang HY, Jiang YX, Zhu QL, Zhang J, Xiao MS, Liu H, Dai Q, Li JC, Sun Q. Automated Breast Volume Scanning: Identifying 3-D Coronal Plane Imaging Features May Help Categorize Complex Cysts. Ultrasound Med Biol. 2016;42:689-698. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 8] [Cited by in RCA: 9] [Article Influence: 1.0] [Reference Citation Analysis (0)] |

| 12. | Shen WC, Chang RF, Moon WK, Chou YH, Huang CS. Breast ultrasound computer-aided diagnosis using BI-RADS features. Acad Radiol. 2007;14:928-939. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 94] [Cited by in RCA: 70] [Article Influence: 3.9] [Reference Citation Analysis (1)] |

| 13. | Zhang Q, Suo J, Chang W, Shi J, Chen M. Dual-modal computer-assisted evaluation of axillary lymph node metastasis in breast cancer patients on both real-time elastography and B-mode ultrasound. Eur J Radiol. 2017;95:66-74. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 16] [Cited by in RCA: 28] [Article Influence: 3.5] [Reference Citation Analysis (0)] |

| 14. | Feng Y, Dong F, Xia X, Hu CH, Fan Q, Hu Y, Gao M, Mutic S. An adaptive Fuzzy C-means method utilizing neighboring information for breast tumor segmentation in ultrasound images. Med Phys. 2017;44:3752-3760. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 29] [Cited by in RCA: 21] [Article Influence: 2.6] [Reference Citation Analysis (0)] |

| 15. | Cai L, Wang X, Wang Y, Guo Y, Yu J, Wang Y. Robust phase-based texture descriptor for classification of breast ultrasound images. Biomed Eng Online. 2015;14:26. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 51] [Cited by in RCA: 53] [Article Influence: 5.3] [Reference Citation Analysis (0)] |

| 16. | Bing L, Wang W. Sparse Representation Based Multi-Instance Learning for Breast Ultrasound Image Classification. Comput Math Methods Med. 2017;2017:7894705. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 6] [Cited by in RCA: 9] [Article Influence: 1.1] [Reference Citation Analysis (1)] |

| 17. | Lee JH, Seong YK, Chang CH, Park J, Park M, Woo KG, Ko EY. Fourier-based shape feature extraction technique for computer-aided B-Mode ultrasound diagnosis of breast tumor. Conf Proc IEEE Eng Med Biol Soc. 2012;2012:6551-6554. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 6] [Article Influence: 0.5] [Reference Citation Analysis (0)] |

| 18. | de S Silva SD, Costa MG, de A Pereira WC, Costa Filho CF. Breast tumor classification in ultrasound images using neural networks with improved generalization methods. Conf Proc IEEE Eng Med Biol Soc. 2015;2015:6321-6325. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 5] [Article Influence: 0.6] [Reference Citation Analysis (0)] |

| 19. | Miotto R, Wang F, Wang S, Jiang X, Dudley JT. Deep learning for healthcare: review, opportunities and challenges. Brief Bioinform. 2018;19:1236-1246. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1189] [Cited by in RCA: 913] [Article Influence: 130.4] [Reference Citation Analysis (0)] |

| 20. | Shen D, Wu G, Suk HI. Deep Learning in Medical Image Analysis. Annu Rev Biomed Eng. 2017;19:221-248. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2581] [Cited by in RCA: 1951] [Article Influence: 243.9] [Reference Citation Analysis (0)] |

| 21. | Suzuki K. Overview of deep learning in medical imaging. Radiol Phys Technol. 2017;10:257-273. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 380] [Cited by in RCA: 420] [Article Influence: 52.5] [Reference Citation Analysis (0)] |

| 22. | Erickson BJ, Korfiatis P, Akkus Z, Kline TL. Machine Learning for Medical Imaging. Radiographics. 2017;37:505-515. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 647] [Cited by in RCA: 771] [Article Influence: 96.4] [Reference Citation Analysis (0)] |

| 23. | Metaxas D, Axel L, Fichtinger G, Szekely G. Medical image computing and computer-assisted intervention--MICCAI2008. Preface. Med Image Comput Comput Assist Interv. 2008;11:V-VII. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 17] [Cited by in RCA: 17] [Article Influence: 1.0] [Reference Citation Analysis (0)] |

| 24. | González G, Ash SY, Vegas-Sánchez-Ferrero G, Onieva Onieva J, Rahaghi FN, Ross JC, Díaz A, San José Estépar R, Washko GR; COPDGene and ECLIPSE Investigators. Disease Staging and Prognosis in Smokers Using Deep Learning in Chest Computed Tomography. Am J Respir Crit Care Med. 2018;197:193-203. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 191] [Cited by in RCA: 178] [Article Influence: 25.4] [Reference Citation Analysis (0)] |

| 25. | Ghafoorian M, Karssemeijer N, Heskes T, van Uden IWM, Sanchez CI, Litjens G, de Leeuw FE, van Ginneken B, Marchiori E, Platel B. Location Sensitive Deep Convolutional Neural Networks for Segmentation of White Matter Hyperintensities. Sci Rep. 2017;7:5110. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 153] [Cited by in RCA: 125] [Article Influence: 15.6] [Reference Citation Analysis (1)] |

| 26. | Becker AS, Mueller M, Stoffel E, Marcon M, Ghafoor S, Boss A. Classification of breast cancer in ultrasound imaging using a generic deep learning analysis software: a pilot study. Br J Radiol. 2018;91:20170576. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 23] [Cited by in RCA: 64] [Article Influence: 9.1] [Reference Citation Analysis (0)] |

| 27. | Zhang Q, Xiao Y, Dai W, Suo J, Wang C, Shi J, Zheng H. Deep learning based classification of breast tumors with shear-wave elastography. Ultrasonics. 2016;72:150-157. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 144] [Cited by in RCA: 122] [Article Influence: 13.6] [Reference Citation Analysis (0)] |

| 28. | Han S, Kang HK, Jeong JY, Park MH, Kim W, Bang WC, Seong YK. A deep learning framework for supporting the classification of breast lesions in ultrasound images. Phys Med Biol. 2017;62:7714-7728. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 175] [Cited by in RCA: 198] [Article Influence: 24.8] [Reference Citation Analysis (0)] |

| 29. | Xiao T, Liu L, Li K, Qin W, Yu S, Li Z. Comparison of Transferred Deep Neural Networks in Ultrasonic Breast Masses Discrimination. Biomed Res Int. 2018;2018:4605191. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 61] [Cited by in RCA: 66] [Article Influence: 9.4] [Reference Citation Analysis (0)] |

| 30. | Yap MH, Pons G, Marti J, Ganau S, Sentis M, Zwiggelaar R, Davison AK, Marti R, Moi Hoon Yap, Pons G, Marti J, Ganau S, Sentis M, Zwiggelaar R, Davison AK, Marti R. Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE J Biomed Health Inform. 2018;22:1218-1226. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 333] [Cited by in RCA: 365] [Article Influence: 45.6] [Reference Citation Analysis (0)] |

| 31. | Kim K, Song MK, Kim EK, Yoon JH. Clinical application of S-Detect to breast masses on ultrasonography: a study evaluating the diagnostic performance and agreement with a dedicated breast radiologist. Ultrasonography. 2017;36:3-9. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 47] [Cited by in RCA: 67] [Article Influence: 7.4] [Reference Citation Analysis (0)] |

| 32. | Di Segni M, de Soccio V, Cantisani V, Bonito G, Rubini A, Di Segni G, Lamorte S, Magri V, De Vito C, Migliara G, Bartolotta TV, Metere A, Giacomelli L, de Felice C, D'Ambrosio F. Automated classification of focal breast lesions according to S-detect: validation and role as a clinical and teaching tool. J Ultrasound. 2018;21:105-118. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 28] [Cited by in RCA: 50] [Article Influence: 7.1] [Reference Citation Analysis (0)] |

| 33. | Plichta JK, Ren Y, Thomas SM, Greenup RA, Fayanju OM, Rosenberger LH, Hyslop T, Hwang ES. Implications for Breast Cancer Restaging Based on the 8th Edition AJCC Staging Manual. Ann Surg. 2018;. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 41] [Cited by in RCA: 66] [Article Influence: 13.2] [Reference Citation Analysis (0)] |