Peer-review started: July 23, 2018

First decision: August 20, 2018

Revised: September 24, 2018

Accepted: October 19, 2018

Article in press: October 19, 2018

Published online: November 5, 2018

Processing time: 107 Days and 12.1 Hours

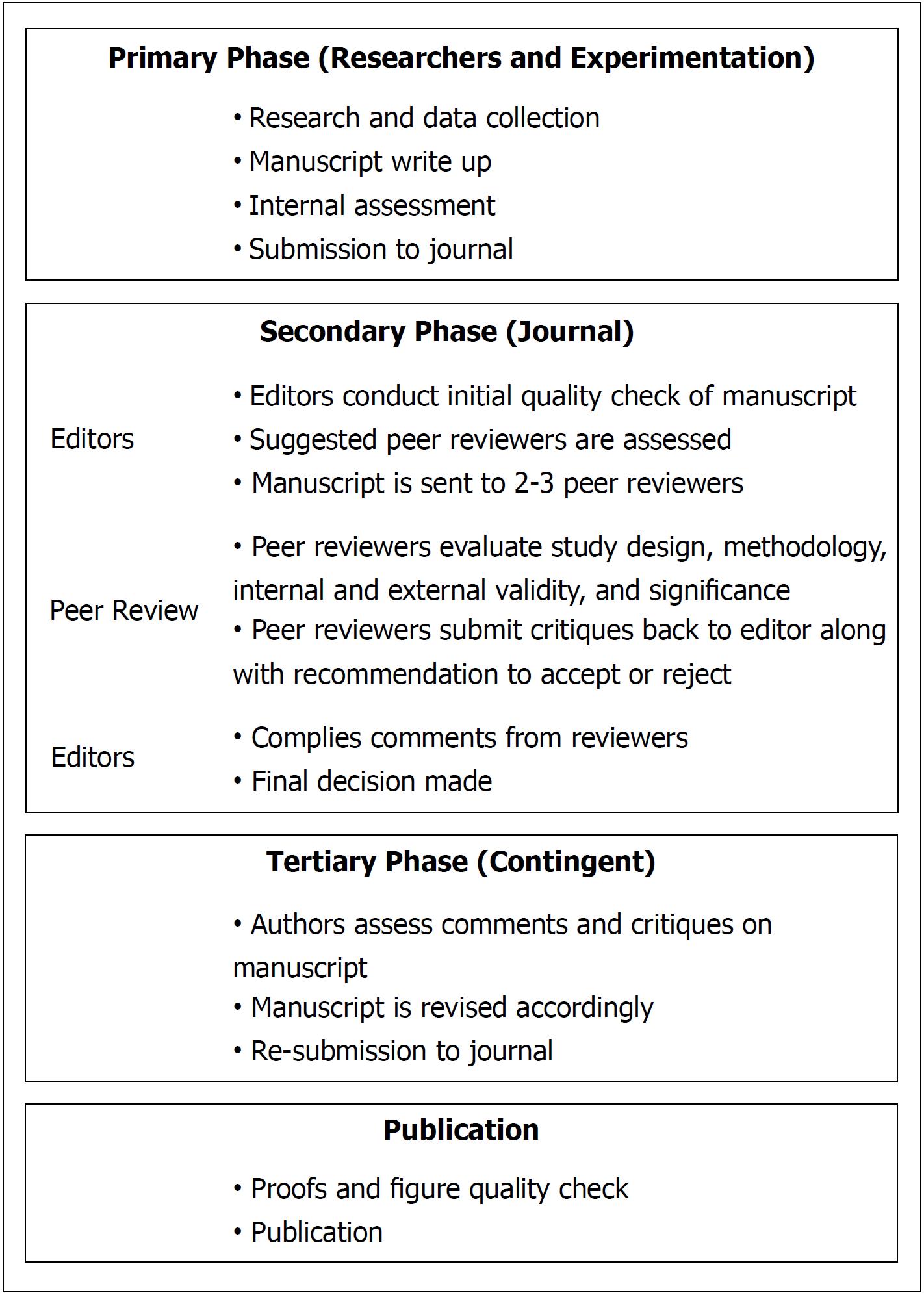

In order to ensure that the highest quality of literature is published, most journals utilize a peer review process for manuscripts submitted. Although the primary purpose for this process is to filter out ”bad science”, the process is not perfect. While there is a general consensus among researchers and clinicians that something must be done to improve upon the method for properly vetting manuscripts, there are conflicting opinions on how to best implement new policies. In this paper, we discuss the most well-supported suggestions to improve the process, with the hopes of increasing rigor and reproducibility, ensuring double-blinding, developing set guidelines, offering early training to reviewers, and giving reviewers better feedback and recognition for their work.

Core tip: The peer review process lacks the proper transparency required to ensure adequate promotion of reproducibility and reliability, which are two core characteristics that peer-reviewed, published data should possess. It is important for researchers to re-evaluate the peer review process continuously in order to formulate up-to-date methods for improving transparency, thereby strengthening its credibility.

- Citation: Joshi ND, Deshpande KS, Roehmer CW, Vyas D. A slight glance at peer review. World J Surg Proced 2018; 8(1): 1-5

- URL: https://www.wjgnet.com/2219-2832/full/v8/i1/1.htm

- DOI: https://dx.doi.org/10.5412/wjsp.v8.i1.1

The peer review process serves as the primary filtering mechanism for journals and is meant to identify scientific shortcomings in submitted manuscripts. In the current scientific climate of “publish or perish”, this process is more necessary now than ever[1]. With the widespread problem of insufficient transparency combined with bias, there is a dire need to reevaluate present methods for accepting publications. The rigor and reproducibility of data in science is imperative, and the transparency of the peer review process is supposed to promote this. However, this process more often than not occurs behind closed doors[2]. Relying on a somewhat hidden process is problematic, to say the least, especially since scientists are often judged not by the quality of their science but by their skill at acquiring grants.

Academic research departments, pharmaceutical companies, and universities all benefit from publishing research. Grant funding for basic and clinical research is extremely competitive. To receive fundable scores on a grant, investigators are expected to have a strong track record of publishing. Grants are the driving force of research, translate to job security for researchers, and provide the money necessary for institutions to maintain their research[3]. Sadly, with the demand for quantity increasing, it seems that the quality of publications is being overlooked. To better understand the motivations and implications behind research publications, it is necessary to take a look at the current state of the peer review process, as well as potential resolutions.

The peer review process itself involves many interested parties. The authors are the primary stakeholders, as they wish to get their paper published. The next interested party is the research journal, where the primary obligation is to ensure the integrity and accuracy of the research that is published. Lastly, the public has an important interest in the research, as people rely on understandable, thoroughly explained research to provide the most genuine analyses of research topics.

One of the most prevalent misperceptions of the peer review process is that it is always consistent, objective, and reliable[4]. With how far research has advanced, manuscripts, and especially grants, are becoming denser, thus requiring advanced training to truly decide whether or not the findings hold scientific merit. With grants focused on answering such specific questions in science today, it is only human that people will have differing opinions regarding the grant’s strengths, weaknesses and importance. Even with the most thorough investigations, there is little chance that different reviewers will completely agree[4]. Furthermore, the entire review process can be undermined by authors suggesting a “friendly reviewer”, which could be a colleague they know who will go easy on their work to ensure funding or, in the case of a manuscript, publication. The peer review process has become less reliable for thorough screening and is more like a casino’s roulette table based on odds, with partakers selecting the right combination of a number of factors (e.g., the right journal, favorable reviews, etc). In most journals, this game occurs behind closed doors, which opens the process to a multitude of problems[2,5].

Science is based on facts and data. Bias occurs when subjectivity is introduced into the interpretation of scientific work. Two commonly found forms of bias in peer review are confirmation bias and conservatism bias. Confirmation bias results when an individual entity interprets gathered evidence to affirm, rather than to challenge, common scientific beliefs in the respective field of literature[3]. This occurs in the peer review system when a reviewer is biased against a manuscript that raises data inconsistent with the reviewer’s own findings or opinions. Conservatism bias is evident when innovators are confronted with the hard truth that resource allocation is often impacted by partiality towards data that supports a particular research path[3]. Such bias has major repercussions. It violates crucial ethical standards which should be followed by journals and granting institutions when publishing and funding research, removing funding from projects in pursuit of revolutionary theories, which threatens funding for projects that pursue revolutionary theories, thus hindering scientific progress.

Additionally, the peer review process has been historically biased against negative results. Authors do not care to spend time writing up results that do not prove their hypotheses and there is very little journalistic value in publishing negative studies. Peer reviewers can be part of the problem here, but equal or greater blame falls on the journals, as many explicitly ask reviewers about results and novelty or importance[3]. There is no time to perseverate on negative results. This constant push forward leads to poor research reporting, which has been estimated to have led to billions of dollars of waste due to unreproducible results[5]. The Declaration of Helsinki, the set of ethical principles used to govern clinical research, states that researchers must be held responsible for the completeness and accuracy of their reports. Reports of research not in accordance with the principles of this declaration should not be accepted for publication[6,7]. In the current peer review process, there is a significant lack of regulation and infrastructure to uphold the moral obligations of reporting all research in an unbiased manner, according to the declaration[6,7].

Board members of journals are often unregulated. For instance, an editor-in-chief term should be limited, rather than allowing the same person to hold that position and make all final decisions on submitted research for 15-20 years. Although people all respect colleagues and professors, some editors-in-chief are simply too far removed from the current generation’s research interests and practices. Furthermore, they may begin losing the patience, mental power or decision-making capacity to uphold a high quality peer review process. Research, which is conducted by particular names, affiliations, institutions, or countries, may experience superior or inferior treatment according to an editor-in-chief’s relationship with those entities. Moreover, some research manuscripts never see the light of day because they do not conform with the association’s leading force, which, for a journal, is the editor-in-chief. Therefore, improved selection and cycling of editors-in-chief and editorial board members, along with assignment of received manuscripts to persons with expertise, would produce a higher quality and less biased assessment. To aid in this, reviewers and editorial board members need to also feel that they are rewarded for their work and time, since many journals are making money from submissions through free labor from reviewers. Journals also gain importance when they have high quality reviewers combing through their submitted literature.

There are a number of metrics used to evaluate the prestige of research journals. The most widely accepted and established metric is the impact factor. The impact factor is determined by taking the number of citations a journal receives for all articles published in the previous two years divided by the number of articles published by that journal during that time[8]. The thought is that as the impact factor of a journal increases, the quality of the work accepted by this journal would also equally increase. However, as we have seen by recent retractions of published works from high-profile journals, this theory is not always true. If a researcher continually publishes in high impact factor journals, peer reviewers may be more inclined to give their manuscripts “the benefit of the doubt” and not scrutinize their work as much as they should. The possibility of someone putting higher merit into an author’s work based on the number of higher impact publications underscores the need to generate standardized, broad-reaching regulations for manuscript publication.

Another issue in the peer review process is the identification of manipulated data. Due to a lack of transparency by both authors and peer reviewers, data falsification may go unnoticed. An example of this is the 1998 study published in Lancet by Andrew Wakefield. He claimed that the measles portion of the measles, mumps and rubella vaccines caused autism. This work was later fully retracted, and Wakefield was permanently barred from practicing medicine in the United Kingdom because the results were not able to be reproduced by other laboratories. Nevertheless, despite the retraction, these published results led to the global scale propagation of ill-advised health decisions that has persisted for decades. If a research paper that is accused of misconduct (e.g., falsification of data) is set to be retracted, it is still often cited for decades before the actual retraction occurs[9]. Unfortunately, many cases of scientific misconduct go undiscovered, even though the retraction rate has risen 20-fold over the years[9]. It is therefore possible that peer review has become better at detecting misconduct. Alternatively, it is also possible that misconduct has simply been increasing due to the increased stress and pressure to publish or perish[9] (Figure 1).

If the ultimate goal of peer review is to produce quality literature, the process must be held to the most rigorous standards[10]. Keeping this in mind, many people have presented and attempted to implement various ideas to improve the process; notwithstanding, most of their recommendations have been based solely on expert opinion, rather than on experimental trials[11]. Some of the most promising improvements include not allowing authors to suggest reviewers, opening peer-review, establishing set guidelines, allowing authors to appeal, and adding training, feedback, and recognition for reviewers.

One of the first steps in the process of peer review is finding qualified individuals to review the paper. Many journals currently encourage/require authors to suggest preferred or non-preferred reviewers, with the idea that an author would be more comfortable with reviewers who are experts in their field of study[10]. This practice may generate immediate bias and conflict of interest by offering the option for authors to suggest friendly reviewers and to exclude reviewers who they know will be harsh critics[12]. Through eliminating this option and keeping reviewer selection within the hands of only the journal editors, this avenue for possible exploitation of the review process is restricted. However, this change would require more work on the part of the editor, and therefore may not be a long-term solution to the problem. Even if reviewers are good friends of the authors, they may judge a manuscript as being poor, since the review process is anonymous. Also, renowned scientists accept invitations to review and then hand off the work to their group of members, due to other commitments. These members may not have the full expertise and experience to perform an adequately sound review. This practice is not acceptable and is strictly forbidden by journals, however it may happen quite often.

One of the most highly debated fixes to the peer review issue concerns whether or not journal editors should blind the reviewers and authors in the review process, and, if so, who should be blinded. There have been mixed results in studies evaluating the effectiveness of blinding. Initial studies by McNutt et al[13] showed that blinding slightly improved the quality of the review from the editor’s perspective. However, more recent, larger trials have shown no difference in the quality of reviews after blinding[14,15].

The main advantage to blinding reviewers is that it may allow for less biased and more critical reviews[10]. However, the double-blind methodology does not always hold true, as up to 50 percent of reviewers have been able to guess the identity of a paper’s author[12,16]. Therefore, several journals have moved toward a completely open review process and note some advantages. If reviewers know that they will be identified, they may exert additional effort during their review. Additionally, more open lines of communication between reviewers and authors have allowed for a high level of transparency and better understanding, which has ultimately led to increased rates of article acceptance into journals[14].

Although some prior authors have found that the only advantage to double-blind studies is the appearance of fairness, rather than actually being more fair, a preponderance of evidence seems to show that a double-blind strategy is still the best approach to the peer review process[15]. If performed properly, the double-blind process can reduce reviewer bias and improve objectivity, as opposed to the open review process, which can increase the likelihood of editorial favoritism and non-participation[17].

One commonly offered suggestion to formalize and streamline the review process is setting comprehensive guidelines for reviewers[17,18]. Having concrete guidelines in place before review can serve the dual purpose of allowing authors to have a clear knowledge of how their papers will be reviewed, as well as giving reviewers a full understanding of their responsibilities. By giving reviewers a clear picture of what a review will entail, this may increase reviewer participation. Guidelines should include clear statements regarding the specific criteria used to evaluate each paper, as well as descriptions of standards used to grade a paper’s quality[17].

Other studies have encouraged the idea of training reviewers prior to review. A study done in 2006 showed that training reviewers, whether in-person or electronically, showed minor improvements in quality, not significant enough to make a noticeable difference[4]. Also, formal training can be difficult to arrange and very expensive to implement, given the vast number of journals and all the variability in their publishing rules. One issue with training current reviewers is that those with years of experience may be hesitant to change their style of review. It is therefore recommended that all reviewer training begin early in a reviewers’ careers to allow them to develop good habits early on. Additionally, electronic methods of training should be promoted, as reviewers would be able to complete this in their own time, which would be logistically feasible for most journals.

A major criticism of the peer review process cocerns what an author should do when two reviewers disagree on how to improve a paper. There are often only two reviewers for a paper, leaving the journal editor as the person who has to “break the tie” between reviewers and decide the fate of the manuscript[12,19]. One proposed solution to this is to make all reviewer comments available for other reviewers to see[19]. This solution, however, can cause problems, including increased time of review or reviewers not stating their original ideas. One solution to this problem is to keep the comments of reviewers private, but to require three reviewers per paper so there is never a tie. Although a requirement of three reviewers would increase the diversity of the audience reviewing the manuscript, the greatest concern with this method is the increase in the time it takes to publish. Scientific advances are happening at such a rapid pace that it is crucial to keep time in mind with any potential fix to the system.

If we are to improve the quality of the review process, it is crucial for reviewers to receive constructive criticism of their performance after a review. Journals should consider using standardized scoring and feedback models for reviewers to allow them to see where they might be able to improve their reviews of future papers. This method would improve the process, but also has potential downsides, such as scores for reviewers being based on reactionary responses from authors having liked or disliked reviewers’ comments. Despite the potential pitfalls, this route offers a relatively easy effort fix for the current system.

Additionally, reviewers may feel that there is not enough incentive to review papers. By increasing recognition and incentivizing reviewers, this may lead to more reviewer participation, as well as faster turnaround times. Currently, the benefits of reviewing may only include free journal access, increased access to new literature, and the reward of giving back to the respective field. Suggestions for improved recognition have included financial incentives, free conference access, or even making reviews citable[11,17]. However, making reviews citable may disproportionately increase the number of positive reviews. Additionally, if journals give reviewers incentives, this may not only limit the number of papers a journal can publish due to the financial burden, but may also introduce the possibility of a reviewer simply trying to review as many papers as possible. A possible option would be to create a metric for researchers and clinicians, similar to an impact score, that would track the number of papers an individual has reviewed.

Lastly, a problem that authors often confront at the end of the peer review process is how to defend a rejected submission. Most journals do not offer any type of author appeal system and often declare the peer review and editor’s decision to be final. This refusal to consider appeals could cause important research to be overlooked[12]. Journals should offer no guarantee, but it is important for them to listen to valid appeals from authors as a way of reducing reporting bias. Some journals have even begun hiring an ombudsperson in order to handle these situations[17]. Clearly, each journal should have a streamlined system with specific criteria to ensure a prompt and fair process for both author and journal.

For years, the peer review process has been a guiding force for scientific publishing. However, the process continues to experience controversy and maintain fundamental flaws. Although the weaknesses within the process are easy to identify, journals need to do their best to correct them so that everyone may benefit from the valuable research being performed around the world. One such correction could be developing and promoting journals that specialize in publishing negative results. Science is not perfect and the current system only gives credit to research that is published. The “publish or perish” mentality needs to be addressed and revamped. Science is ever more becoming a collaborative experience, with clinicians and researchers understanding that they cannot be experts on everything. Allowing researchers to publish negative results would encourage a spirit of collaboration and would lead to a dramatic shift in scientific discovery. Grant money would be used more efficiently because of the increased transparency regarding experimental outcomes that did not bear fruit. Furthermore, less time would be wasted and more time would be spent expanding the knowledge base. While this may seem like an idealistic goal for the future of research, we hope that the stigma of negative results is eliminated and that the scientific community can work together to improve upon the peer review system for the betterment of science itself.

Manuscript source: Unsolicited manuscript

Specialty type: Surgery

Country of origin: United States

Peer-review report classification

Grade A (Excellent): 0

Grade B (Very good): B, B

Grade C (Good): C

Grade D (Fair): 0

Grade E (Poor): 0

P- Reviewer: Hussain A, Laschke MW, Yan SL S- Editor: Cui LJ L- Editor: Filipodia E- Editor: Yin SY

| 1. | Al-Adawi S, Ali BH, Al-Zakwani I. Research Misconduct: The Peril of Publish or Perish. Oman Med J. 2016;31:5-11. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 8] [Article Influence: 0.9] [Reference Citation Analysis (0)] |

| 2. | Wicherts JM. Peer Review Quality and Transparency of the Peer-Review Process in Open Access and Subscription Journals. PLoS One. 2016;11:e0147913. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 76] [Cited by in RCA: 75] [Article Influence: 8.3] [Reference Citation Analysis (0)] |

| 3. | Manchikanti L, Kaye AD, Boswell MV, Hirsch JA. Medical journal peer review: process and bias. Pain Physician. 2015;18:E1-E14. [PubMed] |

| 4. | Smith R. Peer review: a flawed process at the heart of science and journals. J R Soc Med. 2006;99:178-182. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 245] [Cited by in RCA: 261] [Article Influence: 13.7] [Reference Citation Analysis (0)] |

| 5. | Chalmers I, Glasziou P. Should there be greater use of preprint servers for publishing reports of biomedical science? F1000Res. 2016;5:272. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 10] [Cited by in RCA: 9] [Article Influence: 1.0] [Reference Citation Analysis (0)] |

| 7. | Stanciu C. Ethics, research, and Helsinki declaration. Rev Med Chir Soc Med Nat Iasi. 2001;105:626-627. [PubMed] |

| 8. | Walter G, Bloch S, Hunt G, Fisher K. Counting on citations: a flawed way to measure quality. Med J Aust. 2003;178:280-281. [PubMed] |

| 9. | George SL. Research misconduct and data fraud in clinical trials: prevalence and causal factors. Int J Clin Oncol. 2016;21:15-21. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 45] [Cited by in RCA: 41] [Article Influence: 4.1] [Reference Citation Analysis (0)] |

| 10. | Sprowson AP, Rankin KS, McNamara I, Costa ML, Rangan A. Improving the peer review process in orthopaedic journals. Bone Joint Res. 2013;2:245-247. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 10] [Cited by in RCA: 8] [Article Influence: 0.7] [Reference Citation Analysis (0)] |

| 11. | Vercellini P, Buggio L, Viganò P, Somigliana E. Peer review in medical journals: Beyond quality of reports towards transparency and public scrutiny of the process. Eur J Intern Med. 2016;31:15-19. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 16] [Cited by in RCA: 18] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 12. | Faggion CM Jr. Improving the peer-review process from the perspective of an author and reviewer. Br Dent J. 2016;220:167-168. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 11] [Article Influence: 1.4] [Reference Citation Analysis (0)] |

| 13. | McNutt RA, Evans AT, Fletcher RH, Fletcher SW. The effects of blinding on the quality of peer review. A randomized trial. JAMA. 1990;263:1371-1376. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 171] [Cited by in RCA: 134] [Article Influence: 3.8] [Reference Citation Analysis (0)] |

| 14. | van Rooyen S, Godlee F, Evans S, Black N, Smith R. Effect of open peer review on quality of reviews and on reviewers’ recommendations: a randomised trial. BMJ. 1999;318:23-27. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 262] [Cited by in RCA: 221] [Article Influence: 8.5] [Reference Citation Analysis (0)] |

| 15. | Justice AC, Cho MK, Winker MA, Berlin JA, Rennie D. Does masking author identity improve peer review quality? A randomized controlled trial. PEER Investigators. JAMA. 1998;280:240-242. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 173] [Cited by in RCA: 161] [Article Influence: 6.0] [Reference Citation Analysis (0)] |

| 16. | Fisher M, Friedman SB, Strauss B. The effects of blinding on acceptance of research papers by peer review. JAMA. 1994;272:143-146. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 93] [Cited by in RCA: 73] [Article Influence: 2.4] [Reference Citation Analysis (0)] |

| 17. | Knudson DV, Morrow JR Jr, Thomas JR. Advancing kinesiology through improved peer review. Res Q Exerc Sport. 2014;85:127-135. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 16] [Cited by in RCA: 5] [Article Influence: 0.5] [Reference Citation Analysis (0)] |

| 18. | Ward TN. Peer review: Toward improving the integrity of the process. Neurology. 2015;85:1734-1735. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 4] [Article Influence: 0.4] [Reference Citation Analysis (0)] |

| 19. | Feinstein AR. Some ethical issues among editors, reviewers and readers. J Chronic Dis. 1986;39:491-493. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 5] [Article Influence: 0.1] [Reference Citation Analysis (0)] |