Published online Oct 21, 2016. doi: 10.3748/wjg.v22.i39.8641

Peer-review started: July 16, 2016

First decision: August 19, 2016

Revised: September 2, 2016

Accepted: September 14, 2016

Article in press: September 14, 2016

Published online: October 21, 2016

A new feature extraction technique for the detection of lesions created from mucosal inflammations in Crohn’s disease, based on wireless capsule endoscopy (WCE) images processing is presented here. More specifically, a novel filtering process, namely Hybrid Adaptive Filtering (HAF), was developed for efficient extraction of lesion-related structural/textural characteristics from WCE images, by employing Genetic Algorithms to the Curvelet-based representation of images. Additionally, Differential Lacunarity (DLac) analysis was applied for feature extraction from the HAF-filtered images. The resulted scheme, namely HAF-DLac, incorporates support vector machines for robust lesion recognition performance. For the training and testing of HAF-DLac, an 800-image database was used, acquired from 13 patients who undertook WCE examinations, where the abnormal cases were grouped into mild and severe, according to the severity of the depicted lesion, for a more extensive evaluation of the performance. Experimental results, along with comparison with other related efforts, have shown that the HAF-DLac approach evidently outperforms them in the field of WCE image analysis for automated lesion detection, providing higher classification results, up to 93.8% (accuracy), 95.2% (sensitivity), 92.4% (specificity) and 92.6% (precision). The promising performance of HAF-DLac paves the way for a complete computer-aided diagnosis system that could support physicians’ clinical practice.

Core tip: This paper presents a novel procedure to analyze wireless capsule endoscopy (WCE) images and extract features towards the automatic detection of Crohn’s disease-based lesions. In this direction, a hybrid adaptive filtering process is proposed that aims to refine the WCE images, prior to feature extraction, by selecting via a genetic algorithm approach the most informative curvelet-based components of the images. Then, differential lacunarity is employed for extracting color-texture features in YCbCr color space. The experimental results showed that the proposed WCE image analysis scheme is robust and outperforms related approaches of the literature, mainly in the case of mild lesions detection.

- Citation: Charisis VS, Hadjileontiadis LJ. Potential of hybrid adaptive filtering in inflammatory lesion detection from capsule endoscopy images. World J Gastroenterol 2016; 22(39): 8641-8657

- URL: https://www.wjgnet.com/1007-9327/full/v22/i39/8641.htm

- DOI: https://dx.doi.org/10.3748/wjg.v22.i39.8641

Wireless capsule endoscopy (WCE)[1] is a novel medical procedure which has revolutionized gastrointestinal (GI) diagnostics by turning into reality the concept of painless and effective visual inspection of the entire length of small bowel (SB). In recent years, the validity of SB WCE in clinical practice has been systematically reviewed[2]. Out of this evidence base, it clearly emerges that WCE is invaluable in evaluating various disorders, such as Crohn’s disease (CD), and mucosal ulcers. CD is a chronic disorder of the GI tract (GT) that may affect the deepest layers of the intestinal walls. In 45% of cases, CD lesions are located in small intestine. One of the main characteristics of inflammatory bowel diseases, such as CD, is the evolution of extended internal inflammations to ulcers, or open sores, in the GT. CD is not lethal by itself, but serious complications are of high risk, rendering early diagnosis and treatment essential.

Despite the great advantages of WCE and the revolution that has brought, there are challenging issues to deal with. A WCE system produces more than 55000 images per examination that are reviewed in a form of a video, that requires more than one hour of intense labor for the expert, in order to be examined[3]. This time consuming task is a burden, since the clinician has to stay focused and undistracted in front of a monitor for such a long period. Moreover, it is not guaranteed that all findings will be detected. It is not rare that abnormal findings are visible in only one or two frames and easily missed by the physician. Thus, automatic inspection and analysis of WCE images is of immediate need, in order to reduce the labor of the clinician and eliminate the possibility of omitting a lesion due to the clinician’s non-concentration. Motivated by the latter, a number of automatic GI content interpretation research efforts have been proposed in the literature (see Section “Related Work”).

In this work, we introduce a novel WCE image analysis system for the recognition of lesions created by mucosal inflammation in CD. The main contributions of this paper are in: (1) extending the relatively limited research efforts on CD lesions and ulcers detection; (2) developing the novel Hybrid Adaptive Filtering (HAF) for efficiently isolating the lesion-related WCE image characteristics, by applying genetic algorithms (GA)[4] to the representation of the WCE images on the Curvelet Transform[5] domain; and (3) examining the performance of the proposed approach, namely, HAF-DLac, based on the severity of lesions. Additionally, this work extends the effectiveness of Differential Lacunarity (DLac)-based feature vector, presented in[6] and further examines the potential of the YCbCr space for efficient lesion detection.

In the recent literature, the principal research interest (more than 75% of the WCE-related published works[7]) towards the reduction of the examination time of WCE data deals with detection of certain disorders in the internal mucous membrane. The major types of pathologies targeted are polyps, bleeding, ulcerations, celiac disease, and CD. As far as inflammatory tissue (i.e., ulcer and CD lesions) detection is concerned, only a small proportion of research efforts (7% for ulcers and 2% for CD[7]) are targeted towards this direction, in spite of the great importance and wide-spreading of such disorders. Detecting such kind of eroded tissue is very challenging, since it is characterized by huge diversity in appearance. For ulcer detection, a feature vector that consists of curvelet-based rotation invariant uniform local binary patterns (riuLBP) classified by multilayer perceptron was proposed[8]. Detection rates are heartening, but the performance is affected by the downsides of LBP. Although riuLBP perform well in illumination variations, they are based on the assumption that the local differences of the central pixel and its neighbors are independent of the central pixel itself, which is not always guaranteed, as the value of the central pixel may also be significant. Moreover, there is lack of between-scale texture information that is highly important for medical image analysis. In[9], the authors present a segmentation scheme, utilizing log Gabor filters, color texture features, and support vector machines (SVM) classifier, based on Hue-Saturation-Value (HSV) space. Classification results are promising, but the dataset is rather limited (50 images) and includes perforated ulcerations that are quite easily detected due to clear appearance. Additionally, the HSV model suffers some shortcomings, as the RGB model[10]. The authors in[11] propose bag-of-words-based local texture features (LBP and scale-invariant feature transform-SIFT) extracted in RGB space and SVM classifier, whereas in[12], a saliency map is used along with contour and LBP data. Both approaches are affected by the weaknesses of LBP and SIFT features, which are of narrow use, since such features are often limited in relatively small regions of interest, are susceptible to noise, and exhibit insufficient sensitivity results. In the same direction, the works[6,13,14] investigate the potential of Empirical Mode Decomposition-based structural features extracted from various color spaces, introduce texture features based on color rotation, and perform preliminary research on Curvelet-based lacunarity texture features. The proposed results are promising, but the dataset used for validation is rather small. To the best of our knowledge, the main research efforts reported in the literature dealing with the detection of CD lesions are[15-17]. In[15,16], Color Histogram statistics, MPEG-7 features along with a Haralick features and a Mean-Shift algorithm are used, whereas in[17] a fusion of MPEG-7 descriptors and SVM classifiers are employed. Even though the classification performance is promising, MPEG-7 standards were not particularly designed to describe medical images; thus, there are several problems behind applying them in medical image analysis. They were developed for multimedia content description; hence, in case of images, they describe the overall content of the image, not allowing efficient characterization of local properties or arbitrary shaped regions of interest. Besides, they compute descriptors within relatively big rectangular regions that are inadequate for description of local medical image properties.

Aside from schemes developed to detect a single abnormality, there are efforts towards broad frameworks that detect multiple abnormalities, such as blood, erythema, polyps, ulcers and villous edema[18-24]. Nevertheless, none of them deals with less straightforward lesions created by CD inflammations. It is unquestionable that detecting multiple abnormalities is important for an overall computer-assisted diagnosis tool, but it is crucial that all abnormalities are equally detected properly. This is extremely challenging and not achieved in any of the aforementioned techniques, where ulcer detection results are rather low. There is no one-size-fits-all approach, particularly for CD lesions and ulcerations that exhibit huge diversity in appearance, with attributes (color, texture, size) varying significantly over severity and position.

From a methodological validation point of view, a serious limitation of the preceding efforts is the employment of quite small databases (< 250 images instead of > 500[7]), which are often unbalanced and not described in detail (severity, incorporation of confusing tissue). Moreover, the inclusion of multiple instances from the lesion taken in the very same region within the GT that exhibit high similarity is a possible source of overfitting and virtual optimistic results. Last but not least, none of the published approaches validates the performance against the severity of lesions, apart from[17], where lesion severity classification takes place.

In the direction of reducing the reading time of WCE images, apart from the research efforts reported in the literature, there are some existing software solutions that have been implemented within the WCE image reviewing software by the manufacturing companies of the wireless capsules. The first software module designed towards reading time reduction was the Suspected Blood Indicator[25]. This software module analyses WCE images with respect to color and selects the frames that contain a large number of red pixels, detecting, in this way, blood or other lesions characterized by the red color. The notion behind this software module is the same as the ones presented above (and the one proposed in this work), i.e., automatic detection of a specific disorder. However, the performance is substandard in terms of sensitivity (40.9%) and specificity (70.7%)[25] and, thus, cannot be reliably used in clinical practice. Another software tool aiming at reading time reduction is Automatic Mode[26], that groups images with similar semantics based on color, shape and texture features and projects only one representative frame. No automatic detection of disorders, however, takes place. In this context, the physician saves time (up to 47%[26]) by reviewing less images. Nevertheless, lesions that only appear in few images and small-sized lesions that do not cause significant shifts of the image features are often missed. Consequently, this tool is suggested to be used when diffuse or large lesions are expected to be found[26]. Last but not least, two software tools targeting data enhancement have been incorporated in WCE images reading software, namely Blue Mode[27] and Fuji Intelligent Colour Enhancement (FICE)[27]. Blue Mode enhances the images by applying color shifting in the short wavelength range of visible light (around the wavelength of blue color). On the other hand, FICE, based on Spectral Estimation Technology[28], analyses an image, estimates spectra at various wavelengths and produces an enhanced image of a given wavelength of light (most often to narrowed blue and green). Both techniques do not provide direct automatic lesion detection, but reduce WCE reading time in an indirect way. By improving image quality and intestinal surface structure representation, the doctors, theoretically, can more easily identify pathologic changes and, thus, review the whole sequence faster. Although such tools seem to improve the detection accuracy of lesions[27], the significance of WCE data reading time reduction in clinical practice has not been studied yet.

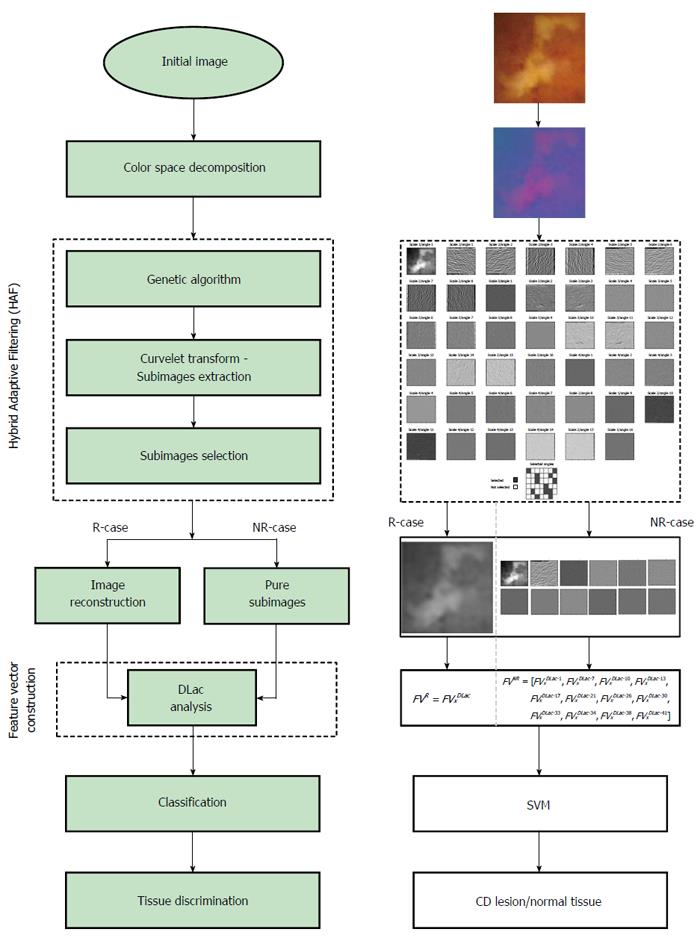

The objective of HAF-DLac scheme is as follows. Given a region of interest (ROI) I within a WCE image, identify if I corresponds to normal tissue or CD lesion. The overall structure of HAF-DLac scheme, along with a working example, is depicted in Figure 1. After a pre-processing stage, where the RGB image is converted to YCbCr space and the chromatic channels are extracted, the WCE image is inputted to HAF section of HAF-DLac scheme. YCbCr space was selected because it is a perceptually uniform color space that separates color from brightness information and overcomes the disadvantage of high correlation between the RGB channels[29]. The role of HAF is to isolate the CD lesion-related WCE image characteristics, facilitating the task of feature vector extraction that follows. To achieve this, HAF incorporates GA that acts upon the representation of WCE images on the Curvelet space. In the latter, the image is decomposed into a series of Curvelet-based sub-images of various scales and orientations. Then, GA is employed and, by using energy- or Lacunarity curve gradient-based fitness function, selects the optimum sub-images that relate the most with the CD lesion-related characteristics. The HAF output consists of the selected sub-images that could be either combined through a reconstruction process to produce a reconstructed image (R-case), or used directly with no reconstruction (NR-case). Under both scenarios, the HAF output is used as input to the DLac section of the HAF-DLac scheme. There, DLac-based analysis is performed, resulting in efficient extraction of feature vector (FVDLac), corresponding to the R- and NR-case (FVR and FVNR, respectively). The latter is forwarded to SVM-based classification.

In order to follow the WCE image characteristics and focus upon the ones that mostly relate to the CD lesion information, a hybrid adaptive filtering (HAF) approach was developed. As declared by the term “hybrid”, HAF entails two processing tools, i.e., CT and a simple GA optimization concept, so as to construct a filtering process adapted to specific characteristics of the filtered signal. CT is qualified as a filter bank due to its functionality to decompose an image into sub-images at various scales and orientations that can be interpreted as a pseudo-spectral-spatial representation[30]. In order to exploit the aforementioned capability of CT, a new GA-based approach was introduced for the optimized selection of sub-images that correspond to specific features of an image. The concept of decomposing a WCE image in curvelet domain and selecting specific informative sub-images was implanted by a previous preliminary study[6], where it was evidenced that sub-images at certain scales and angles exhibit high discrimination capabilities. One of the most important modules of the filtering procedure described above is the fitness function (FF) of the GA, as this is a pivotal criterion according to which the filtering is implemented. Energy-based fitness function (EFF) and Lacunarity curve gradient-based (LFF) FFs were employed in this approach.

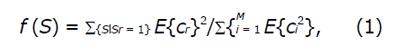

The aim of using EFF was to conduct a filtering procedure by selecting the sub-images which embed the minority of the energy of the image. Based on the results of[6], we observed that the sub-images with better performance exhibited lower mean energy compared to the others that achieved worse results. This may be explained by the fact that the sub-images with high mean energy contain abrupt and steep structures that do not convey valuable information about the texture of normal and eroded mucosa. On the contrary, the low energy sub-images are free from misleading content and are more likely to contain CD lesion-based information. This potential is evidenced in Figure 2A, where we can see the decomposition of an ulcer image (Y channel) at scale 3 and 8 angles. It is clear that sub-images at angles 1, 4, 5 and 8, which contain less mean energy than the rest (Figure 2B), are more likely to exhibit informative texture content, since they display informative, apparently, distribution of non-zero pixels and they do not contain sharp changes. On the contrary, the sub-images at angles 2, 3, 6 and 7 exhibit intense variations at their borders, highlighted by the grater intensity range, that may conceal the delicate textural patterns and hinder efficient features extraction. The formula used for the EFF is:

Math 10

where S is the string of 1/0S, Sr = 1 is the set of the elements of S with value 1, ci represents the sub-image at angle i.

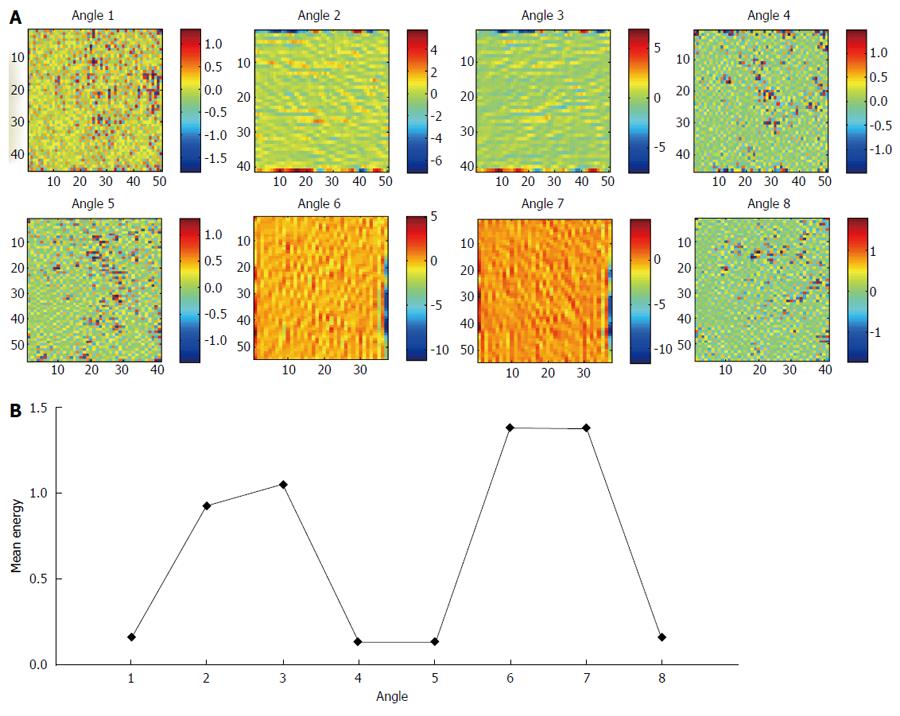

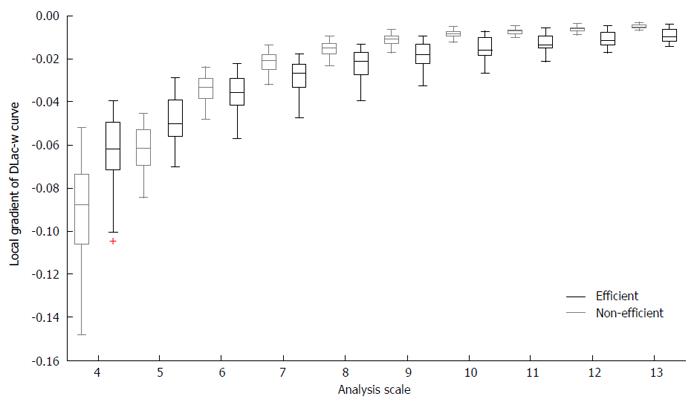

Apart from the EFF, a second approach was attempted, by employing the gradient of the DLac-w curve estimation in the FF structure. As mentioned before, DLac is a measure of heterogeneity and a scale-dependent measure to characterize and discriminate textures and patterns[31]. In this way, an image with uniform patterns delivers lower DLac values than an image with arbitrary and irregular patterns. The DLac analysis scale is determined by the size w of the gliding window (see Section “DLac-Based Feature Vector”). The DLac-w curve can be considered as a multi-scale description of structural patterns and its gradient can reveal the existence of specific textures and structures. For example, if an image contains microstructures with moderate differentiation for a variety of observation scales, the gradient of the DLac-w curve is expected to be lower than the one that corresponds to more abrupt and irregular structures that can be described rather diversely from various scale perspectives.

The aim of using LFF was to capture the variations in structural and textural characteristics of WCE images. Images with small DLac-w curve gradient may correspond to structures of normal and eroded mucosa, while DLac-w curves with steeper slope may account for distracting content. To this end, by considering the capability of DLac-w curve to monitor the existence of valuable or meaningless content, DLac-based filtering would act as a boosting procedure of the information of the initial WCE images related to the CD lesion structures in it. This concept is validated by the observations made, based on the results of[6], where the sub-images that provided better performance exhibited divergent DLac-w curve gradient compared to those that granted worse results. In Figure 3, the boxplot of the local gradient of DLac-w curves vs the analysis scale is depicted for efficient (black) and non-efficient (gray) sub-images at the Curvelet domain, coming from 30 randomly selected WCE images depicting CD lesion or normal tissue. From Figure 3 it is clear that non-efficient sub-images tend to expose higher slope at smaller scales and lower slope at bigger scales. The gradient of DLac curve at scale i (Gr(i)) is calculated as the difference Λ(i + 1) - Λ(i - 1). The formula used for the LFF is expressed by

Math 11

since the gradient at the first two scales has to be high and the gradient of the rest scales has to be low (based on Figure 3).

The second part of the proposed HAF-DLac scheme is DLac analysis that aims to efficiently extract FVDLac. As noted before, CD lesions exhibit widely diverse appearance; thus, a robust tool is required to be able to perform multi-scale, translation invariant texture analysis. DLac is such an attractive tool due to its simple calculation and precision that has been previously used successfully for WCE image analysis[6,14]. The rationale for using DLac[32] is its capability of revealing either sharp or slight changes in neighboring pixels (that characterize CD lesion texture), since it does not use thresholding, as does a very common feature extraction tool, namely riuLBP, that conceals the magnitude of changes. The downsides of riuLBP and other feature extraction approaches are presented in Section “Related Work”. Moreover, DLac is tolerant to: (1) non-uniform illumination (very common in WCE images), due to the differential calculation; and (2) rotational translation, since the pixel arrangement in the gliding box is irrelevant. In general, DLac surpasses the simple statistical (e.g., Haralick features, co-occurrence matrix, etc.) as well as the more advanced structural approaches (such as riuLBP, textons, texture spectrum, etc.) of texture because it is based on, neither plain non-scale statistical analysis of the raw pixel intensities, nor predefined structural patterns. On the contrary, it relies on the statistical analysis of pseudo-patterns (box mass), defined by the data itself, at multiple scales while providing between-scale information. For the above reasons, DLac is expected to produce powerful features from the HAF-enhanced WCE images and achieve advanced classification results that is evidenced by the experimental results.

In order to exploit the multi-scale analysis advantage of DLac, the value Λ(w,r) [see (1)] is not calculated for a single set of parameters. In this work, we calculate Λvs w, with r being a constant, despite the fact that initial approaches suggested the opposite[32]. This technique[33,34] is adopted because w is the primary feature that affects the scale of the analysis, since it determines the size of the image region on which the box mass will be calculated. According to[31,33], the larger the area on which the box mass is calculated, the coarser the scale of Lac analysis becomes. On the contrary, r value affects the scale of DLac analysis only to a certain degree, by determining the size of the neighborhood on which the differential height is calculated and, consequently, the sensitivity to recognize intensity variations. Thus, in our approach, we achieve to identify slight variations in neighboring pixels (by selecting a small value for r) and to analyze structure patterns at different scales. Moreover, Λ(w)|wmin curve is normalized (ΛN(w)) to the value Λ(w), in order to secure an identical reference level and extract more efficient information[35].

The decay of ΛN(w) as a function of window size follows characteristic patterns for random, self-similar, and structured spatial arrangements, and lacunarity functions can provide a framework for identifying such diversities. Thus, the ΛN(w) curve may form the FV. The concept of reducing the feature space dimension introduces the essence of modelling ΛN(w) with another function L(w). The normalized DLac-w curves bear resemblance to hyperbola. On this ground, the function

L(w) = b/wa + c,w = [wmin,wmax] (3)

was chosen to model the ΛN(w) curves[35]. Parameter a portrays the convergence of L(w), b represents the concavity of hyperbola and c is the translational term. The best interpretation of ΛN(w) by the model L(w) is computed as the solution of a least squares problem, where parameters a, b, c are the independent variables[36]. Parameters a, b, c embody the global behaviour of the ΛN(w) curve, i.e., the DLac-based texture features of a WCE image. Another way to reduce the feature space dimension established by the DLac curve is to use six statistical measures that are calculated on the ΛN(w) curve[8,37]. The six common statistical features extracted from ΛN(w) curve are: mean (MN = E[X]), standard deviation (STD = (E[(X-μ)/σ2])1/2), entropy (ENT = -Σ(pi∙log(pi))), energy (ENG = E[X2]), skewness (r3 = E[((X-μ)/σ)3], measure of the asymmetry of the probability distribution), and kurtosis (r4 = E[((X-μ)/σ)4], descriptor of the shape of probability distribution), where pi is the probability of value xi, X is a random variable with mean value μ and standard deviation σ.

In order to draw more conclusive results about the efficiency of DLac-based FV, five different types of FVs are constructed:

FV1DLac = [ΛN(wmin + 1), ..., ΛN(wmin + 5)], (4)

FV2DLac = [a, b, c], (5)

FV3DLac = [a, b, c, ΛN(wmin + 1), ΛN(wmin + 2)], ΛN(wmin + 3)], (6)

FV4DLac = [MN, STD, ENT, ENG, r3, r4,), (7)

FV5DLac = [FV3DLac, FV4DLac]. (8)

In FV1DLac, the entire DLac curve values are not used, in order to avoid the “curse of dimensionality” effect and because the length of the curve depends on the size of the input image/sub-image (for more details see Section “Parameter Setting, HAF Realization and FV Construction). FV3DLac aims to express the glocal, i.e., both global (parameters a, b, c) and local (values ΛN(w)), behavior of the curve. As a previous study[6] has shown, this is quite an efficient approach to replace the lengthy DLac-w curve, without omitting crucial information. At last, FV5DLac constitutes an augmented version of FV3DLac, in terms of global DLac-w curve behavior representation.

A fundamental part to develop a robust and efficient algorithm for WCE-based lesion detection, in general, is the existence of a sufficiently rich database, on which the algorithm is going to be tested. Unfortunately, the majority of related approaches (59%) are based on databases consisting of less than 500 images[7]. Using a limited number of images, or even highly correlated images can doubtlessly lead to overfitting that may produce a virtual, unrealistic, fruitful performance.

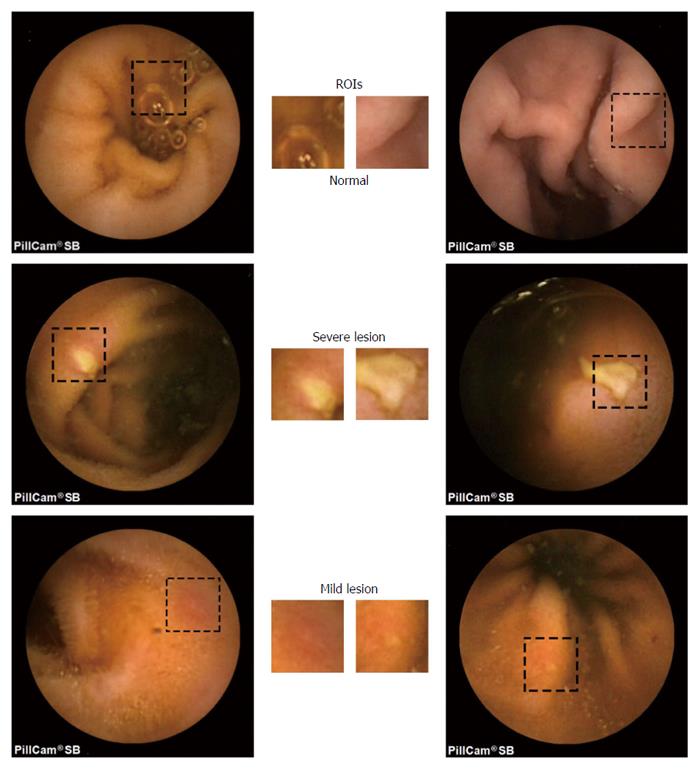

The WCE image database used in this study contains 400 frames depicting CD-related lesions and 400 lesion-free frames acquired from 13 patients who undertook a WCE examination. The exams were rated twice by two clinicians. Then, we selected only the images that have been classified all four times into the same class. This procedure allowed to assess the inter-/intra-rater variability and acquire a highly confident dataset. Moreover, the physicians, upon mutual agreement, manually computed a ROI in each image. Some characteristic examples are given in Figure 4. The CD lesion images were manually annotated into mild (152 samples) and severe (248 samples) cases, based upon the size and severity of the lesion. The mild case includes lesions at an early stage with vague boundaries that are difficult to recognize (Figure 4 bottom), whereas the severe case contains lesions that are clearly shaped (Figure 4 middle). This discrimination was performed in order to extensively assess the performance of the proposed scheme on the basis of the lesion detection difficulty. Additionally, a “total” scenario that contains all lesion images is examined, so as to assess the performance from a spherical perspective. The 400 abnormal images were taken from 400 different lesion events for achieving the lowest possible similarity. The normal part of the dataset contains frames that depict both simple and confusing tissue (folds, villus, bubbles, intestinal juices/debris) for creating realistic conditions and avoiding virtual optimistic results.

In order to further validate the efficacy of the proposed scheme, two open WCE databases are engaged, namely CapsuleEndoscopy.org (CaEn)[38] and KID[39-41]. The CaEn database contains 6 normal and 22 CD-related lesion images (collected using the Pillcam SB from Given Imaging, Israel) while the KID database contains 60 normal (30 with confusing intestinal content) and 14 CD-related lesion images (collected using the MiroCam system, IntroMedic Co, South Korea).

As far as the CT is concerned, two parameters have to be determined, i.e., the number of analysis scales and the number of analysis angles at the second scale. It is prevalent in related applications to use three to four scales for the analysis[8]. One of the main factors that determine the number of scales is the input data to be processed. As the number of scales increases, the size of the computed sub-images decreases, which may lead to negative effects. In our approach, after exhaustive trials we opted for four analysis scales. Each scale employs a certain number of angles that differ from scale to scale. It has been shown[6] that, for avoiding data redundancy and complexity, the optimum number of angles at the second scale is 8.

Considering the implementation of DLac analysis, Λ(w) is calculated for gliding box size r = 3 pixels and gliding window size w = 4 to wmax, where wmax is the minimum dimension of the input data. We did not choose a fixed value for wmax because the curvelet sub-images vary a lot in size, and we needed as longer DLac curves as possible, so as to acquire more efficient FVs.

Regarding the gliding box, its size has to be small in order to be capable to recognize slight local spatial variations that characterize lesion tissue. The value r = 3 pixels was selected after exhaustive experiments. As far as the gliding window is concerned, its size has to range from small to large values so as to capture both micro- and macro-structures and achieve multiscale information extraction. The minimum size of gliding window adopted here is the smallest feasible value, i.e., r + 1, in order not to miss information from the tightest possible analysis scale.

In order to implement the curvelet sub-image selection via HAF, the 25% of the dataset was used. From the 800 images in total, we randomly selected 100 normal and 100 abnormal samples without considering the severity class they belong. For each generation of GA, the FF value was calculated accordingly to the whole dataset of the 200 images per chromatic channel. The selected sub-images per chromatic channel and FF method were found to be (scale/angle):

{[2/(5, 6, 8), 3/(4, 5, 8, 9, 12, 13), 4/(1, 4, 5, 10, 13)]|Y; [2/(2, 6), 3/(4, 5, 9, 12, 13, 16), 4/(4, 8, 13, 16)]|Cb; [2/(1, 5, 6), 3/(1, 4, 9, 13), 4/(1, 5, 9, 13, 16)]|Cr}|EFF, and

{[1/(1), 2/(6, 7, 8), 3/(8, 9, 12, 13), 4/(1, 2, 3, 7, 9, 13)]|Y; [1/(1), 2/(2, 6), 3/(9, 12), 4/(1, 4, 8, 9, 13, 16)]|Cb; [1/(1), 2/(6), 3/(1, 4, 8, 12), 4/(1, 5, 8, 9, 13, 16)]|Cr}|LFF.

The FVxDLac, (x = [1,5]), was calculated for each individual chromatic channel, for the combination of all channels and for each feature extraction approach (R-/NR-case). For the combined channel scenario (Section “Hybrid Adaptive Filtering”), the NR-case was not taken into consideration, as it would lead to a lengthy FV and the classification procedure would suffer from the “curse of dimensionality” effect. For example, for the FV5DLac and EFF case the resulted FV would contain 456 features (12 features/sub-images × 38 sub-images).

The classification phase of the HAF-DLac scheme is performed by a SVM classifier with radial basis kernel function[42]. SVM have been used extensively in pattern recognition applications related to WCE image analysis[6,9,11,17], showing superior performance. The data from the database that did not contribute to the sub-image selection, were used for the classification procedure. In order to achieve as much generalization as possible, 3-fold cross validation was applied 100 times and the average accuracy (ACC), sensitivity (SENS), specificity (SPEC), and precision (PREC) values were estimated.

The performance of the proposed scheme is evaluated through the experimental results derived from the application of the CD lesion detection technique to the experimental dataset. To this end, results from every individual channel (Y, Cb, Cr) and the combination of them, under both HAF-DLac implementation scenarios (R/NR-case) and all severity cases (mild, severe, total) are presented.

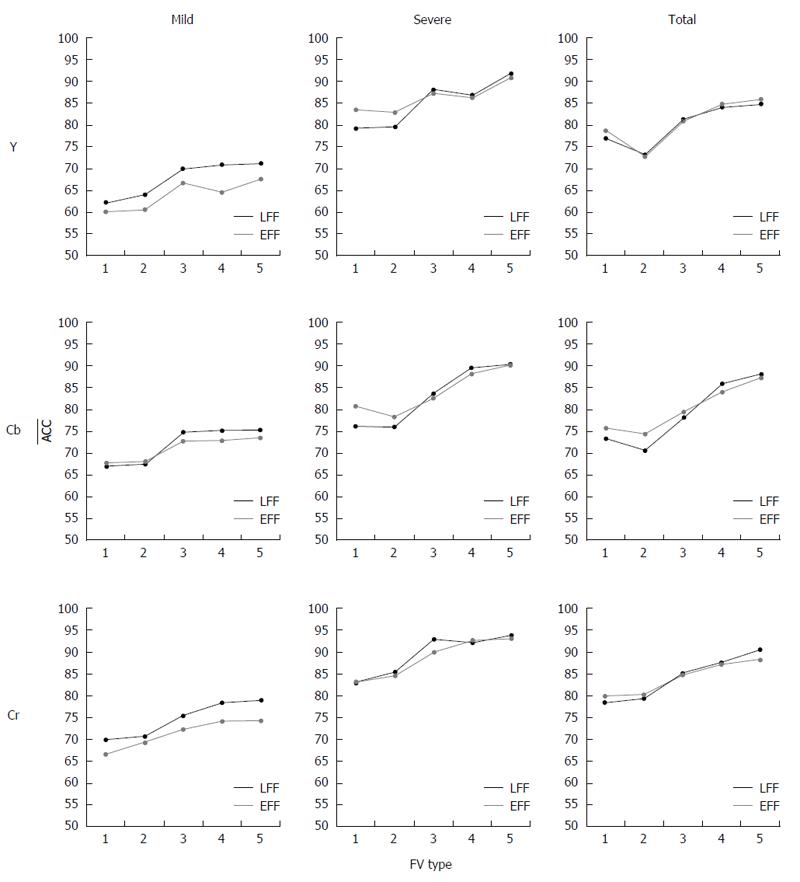

For the individual channel case, ACC values were calculated for R and NR cases, for all severity scenarios and FVs.

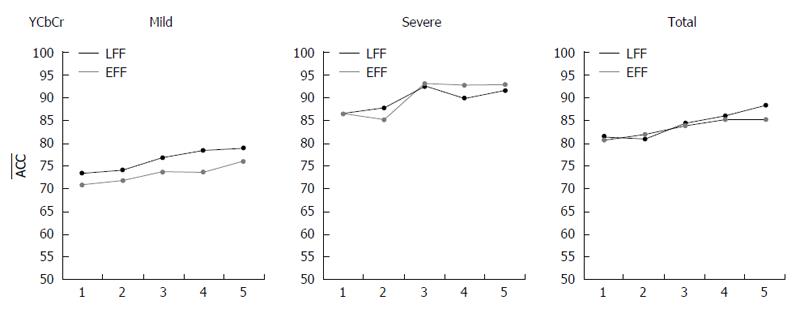

Reconstruction case: For the R-case, the ACC values for all individual channels and all CD lesion cases are depicted in Figure 5 for the two FFs used and for the five types of FV. For the mild lesion case, it is clear that the augmented FV (FV5DLac) extracted from Cr channel provides with the best performance (78.8% ACC). Channel Cb achieves 3.7 percentage points (pp) lower ACC than Cr, whereas channel Y delivers the worst detection accuracy (71.2%) for the same FV. These results refer to the LFF case. On the contrary, the EFF scenario evidently exhibits deteriorated performance for all channels. This is explained by the fact that LFF-based filtering, due to the intuitive characteristics of DLac, is able to discern and boost more efficiently the textural structures of mucosa that slightly differ in case of mild lesions. In case of severe lesions, the detection accuracy of the HAF-DLac is significantly higher, as expected, for all channels compared to mild lesion scenario. ACC is 91.5%, 90.3% and 93.8% for Y, Cb and Cr channels, respectively, for the LFF case and FV5DLac. Given the easier task of discriminating severe lesions, the EFF-based filtering, provides with results that slightly differ (-0.2 to -0.9 pp) from the LFF ones, as opposed to the mild lesion case, where the difference is -1.6 to -4.8 pp. Finally, at the total scenario, Cr channel also provides with the best performance (90.5% ACC), followed by Cb (88.0% ACC) for FV5DLac and LFF. Y channel achieves 85.7% ACC or the same FV but for EFF case.

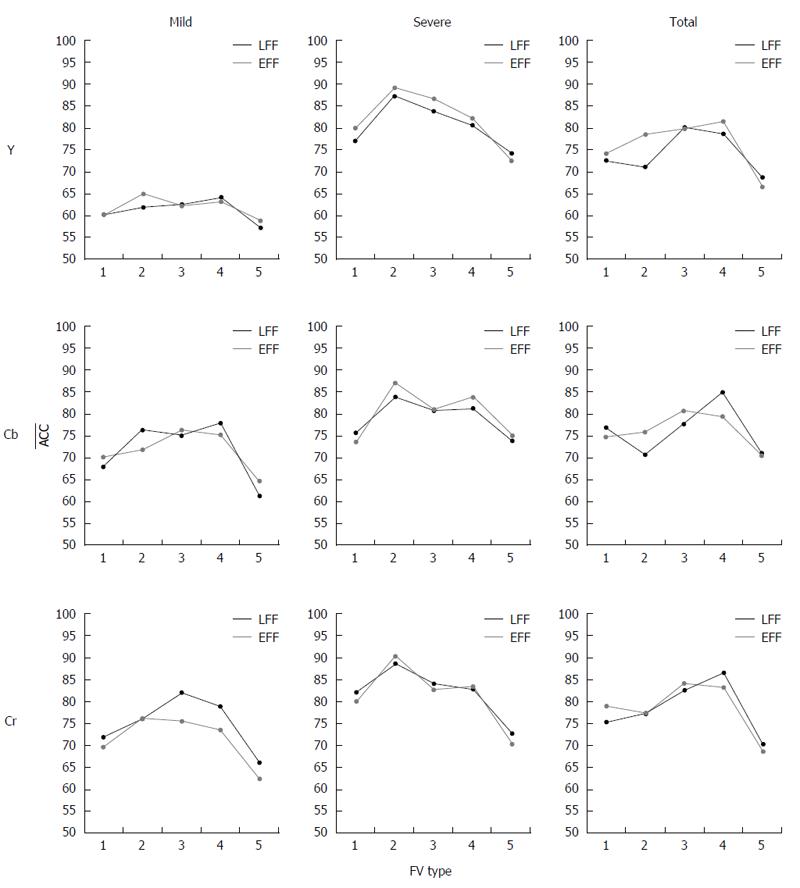

No-reconstruction case: The procedure followed in the R-case was also adopted in NR-case. More specifically, Figure 6 shows the ACC values for all individual channels. It is clear that, as in R-case, channel Cr and LFF approach exhibit the best performance regarding the value of ACC for the majority of cases. Considering mild lesions, the highest ACC values achieved are 64.8% for {EFF, FV2DLac, Y}, 77.8% for {LFF, FV4DLac, Cb} and 81.2% for {LFF,FV3DLac, Cr}. For severe lesions, the best ACC values are 89.1%, 87.0% and 90.2% for {EFF, FV2DLac, Y/Cb/Cr (respectively)}. Last but not least, for the total CD lesion case, the highest ACC value is 86.3% for Cr channel, followed by Cb channel with 84.8% ACC value for {LFF, FV4DLac}. The worst performance is delivered by Y channel, achieving 81.5% ACC for {EFF, FV4DLac}.

Combined channel case (R-case only): The evaluation of HAF-DLac for the combined channel data followed the same practice as in individual channel data. The ACC values for the combination of Y, Cb and Cr channels and all CD lesion scenarios are depicted in Figure 7 for the two FFs used [LFF (black line) and EFF (gray line)] and for the five types of FV. For mild lesions, the highest classification ACC value for LFF approach is 79% and for EFF approach is 75.9% for FV5DLac. In case of severe lesions, the ACC values are increased by 13.7 pp and 17.3 pp (i.e., 92.7% and 93.2%) for LFF and EFF, respectively, for FV3DLac. At last, in the total case, LFF achieves 88.3% ACC value for FV5DLac, whereas EFF provides with 85.2% ACC value for the same FV.

Table 1 presents the best ACC values in the format of “percent (R/NR-case - FF - FV type)”, both for individual and combined channel cases and all three severity scenarios from a spherical perspective. The best mean results for each severity scenario are formatted in bold. The SENS-SPEC values for Cr-mild, Cr-severe and Cr-total are 76.6%-85.8%, 95.2%-92.4%, and 91.8%-89.2%, respectively. Moreover, for comparison purposes, the best classification results of the proposed scheme for all severity scenarios, and the classification results when using some of the most promising schemes in literature, proposed in[6] (CurvLac)[8], (CurvLBP), and[17] (ECT), are presented in Table 2. In[6], the authors engaged curvelet-based Lac features extracted from single or combined sub-images in the curvelet domain, whereas in[8], curvelet-based LBP is applied for ulcer recognition, and in[17], MPEG-7-based edge, color and texture features are used in order to detect CD lesions. At last, Table 3 presents the classification results acquired from applying the above approaches to the open databases CaEn and KID.

| Channel | Severity scenario | ||

| Mild | Severe | Total | |

| Y | 71.3% (R-LFF-FV5) | 91.5% (R-LFF-FV5) | 85.7% (R-EFF-FV5) |

| Cb | 77.8%(NR-LFF-FV4) | 90.3 %(R-LFF-FV5) | 88.0% (R-LFF-FV5) |

| Cr | 81.2%(NR-LFF-FV3) | 93.8% (R-LFF-FV5) | 90.5% (R-LFF-FV5) |

| YCbCr | 79.0% (R-LFF-FV5) | 93.2% (R-EFF-FV3) | 88.3% (R-LFF-FV5) |

| Methodology | Severity scenario | ||

| Mild | Severe | Total | |

| HAF-DLac | 81.2%/76.6%/85.8%/84.3%78.8/73.2/84.4/82.41 | 93.8%/95.2%/92.4%/92.6% | 90.5%/91.8%/89.2%/89.5% |

| CurvLac | 69.8%/64.3%/75.3%/72.2% | 90.4%/92.5%/88.3%/88.8% | 84.5%/87.1%/81.9%/82.8% |

| CurvLBP | 73.4%/67.2%/79.6%/76.7% | 89.6%/91.9%/87.3%/87.9% | 81.7%/83.2%/80.2%/80.8% |

| ECT | 71.8%/65.9%/77.7%/74.7% | 91.2%/92.8%/89.6%/89.9% | 85.6%/87.5%/83.7%/84.3% |

| Classification measures | CaEn database | KID database | ||||||

| HAF-DLac | CurvLac | CurvLBP | ECT | HAF-DLac | CurvLac | CurvLBP | ECT | |

| ACC | 89.3% | 75.0% | 75.0% | 82.1% | 85.1% | 74.3% | 78.4% | 75.7% |

| SENS | 90.9% | 77.3% | 77.3% | 81.8% | 85.7% | 57.1% | 71.4% | 64.3% |

| SPEC | 83.3% | 66.7% | 66.7% | 83.3% | 85.0% | 78.3% | 80.0% | 78.3% |

| PREC | 95.2% | 89.5% | 89.5% | 94.7% | 57.1% | 38.1% | 45.5% | 40.9% |

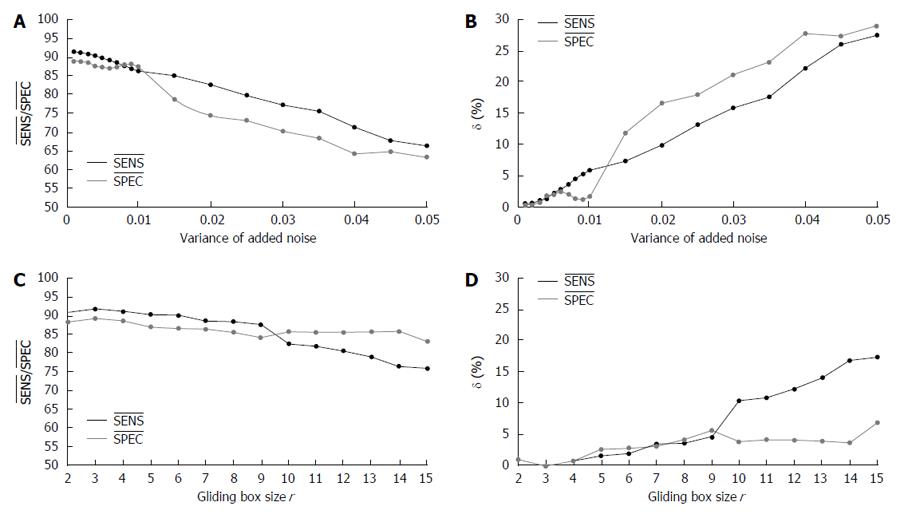

To examine the robustness of the proposed HAF-DLac approach, sensitivity analysis with regard to image noise, the parameter r of DLac, and some GA parameters (initial population (IP), generations, P0→1, and P1→0) was performed. In particular, the sensitivity of the quantities SENS and SPEC, defined as δ (X) = (|Xnew - Xbase|/Xbase) × 100%, where X is SENS or SPEC, Xbase is the base value that is achieved with the current settings and Xnew is the new value acquired after changing one parameter of the system, was estimated. Given that the δ calculation with respect to each examined parameter requires full analysis, we performed it only for the total scenario. The SENSbase and SPECbase used in this study are the highest values achieved for the total scenario R-case LFF approach and FV5DLac, i.e., 91.8% and 89.2%, respectively (see Section “Overall Performance”).

As far as the resiliency to noise is concerned, zero mean Gaussian noise was added to the images. The variance of the added noise ranged from 0.005 to 0.05 in increments of 0.001 up to 0.01 and 0.005 from 0.01 to 0.05. In Figure 8A, the SENS and SPEC values are depicted, whereas in Figure 8B the index δ for these metrics is shown. It is observed that the proposed system is rather robust to noise, as the sensitivities of SENS and SPEC are < 2% for noise variance 0.005 and do not exceed 6% and 2.5%, respectively, for noise variance up to 0.01. When more intense noise is added, the performance notably drops; however, even in such a case, 83% SENS and 74.4% SPEC for 0.02 variance noise are quite acceptable.

Another significant parameter of the proposed system is the size r of the gliding box of DLac analysis. Figure 8C presents the SENS and SPEC values when r ranges from 2 to 15 pixels, whereas Figure 8D depicts the corresponding sensitivity values of SENS and SPEC. The base values correspond to r = 3. It is evident that the bigger the gliding window, the lower the performance. However, the HAF-DLac scheme exhibits remarkable robustness, since the sensitivities of SENS and SPEC are 4.5% and 5.5%, respectively, even for tripling the size of the gliding window. At the extreme case of r = 15, the SENS value is more than 75% and SPEC value is more than 83%, indicating an efficient performance.

Finally, Table 4 tabulates the results of the sensitivity calculations for +20% and -20% shift of four GA parameters. It is clear that the proposed approach is very robust with respect to all parameters, as the sensitivity of SENS and SPEC is less than 1% for all tested cases.

| Parameter | IP | Generations | P0→1 | P1→0 | ||||

| (%) change | 20 | -20 | 20 | -20 | 20 | -20 | 20 | -20 |

| δ (SENS) | 0.27% | 0.64% | 0.54% | 0.68% | 0.14% | 0.08% | 0.21% | 0.17% |

| δ (SPEC) | 0.41% | 0.87% | 0.81% | 0.96% | 0.17% | 0.11% | 0.11% | 0.09% |

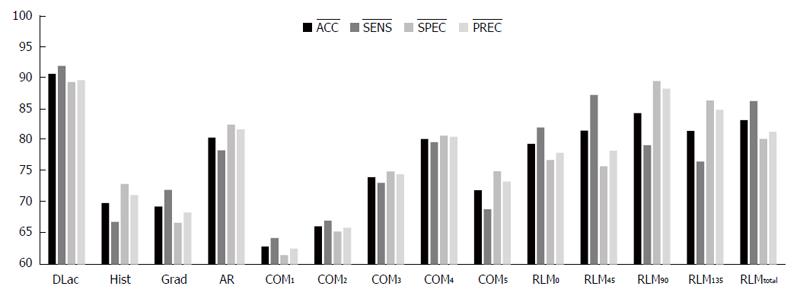

In order to validate the choice of DLac analysis for the extraction of texture features a comparative study took place of the classification performance of various widely used statistical features that include: nine histogram-based features (Hist), five gradient-based features (Grad), five feature based on the autoregressive model (AR), 11 features based on the co-occurrence matrix for four directions (44 features in total) for five inter-pixel distances (COM1-COM5) and five run-length-matrix-based features for four different directions (RLM0, RLM45, RLM90 and RLM135) and a combination of them (RLMt)[43]. These feature were calculated from the HAF-outputted images (R-case and LFF approach) in Cr channel (most efficient setup) and only the total scenario of data was considered. Figure 9 presents the classification results (ACC, SENS, SPEC, PREC) achieved by each of the latter FVs along with the DLac-based FV (FV5DLac).

In this paper we developed the HAF-DLac scheme for the detection of CD-based lesions. HAF-DLac was thoroughly tested on a rich dataset to ensure its good performance.

As the experimental results have shown at the individual channel R-case, engaging specific DLac values alone, i.e., FV1DLac, is incapable to potently describe the characteristics of CD lesions. In the same way, the mere representation of the DLac curve with the hyperbola parameters does not provide fruitful effects. Moreover, in some cases (Y and Cb channels, severe and total scenario) the performance decreases, implying the loss of critical information. On the other hand, the utilization of FV5DLac that incorporates information in various formats from the entire DLac curve significantly improves the performance of the proposed scheme. FV5DLac provides the highest classification results, however, in many cases (mild-Y/Cb/Cr, severe-Cb/Cr, total-Y) FV3DLac and/or FV4DLac that are half in size compared to FV5DLac, achieve similar or slightly lower results (less than one pp lower ACC value), while reducing the complexity. It is also evidenced that the Cr component is the most efficient for CD lesion detection, implying that the majority of CD lesion-related information is more thoroughly expressed by the amount of blue-greenish or fuchsia-reddish hues. This might be explained by the fact that the reflected reddish-greenish light is closely related to blood volume. Intestine walls are packed with a blood-vessels grid that is locally deformed by the mucosal erosion. On the contrary, the luminance plane Y, is the least competent for such an approach, specifically when the lesions are mild. Luminance varies significantly within GT, even for normal regions. Consequently, Y plane cannot adequately capture the modest lighting variations caused by eroded intestine walls.

As far as the NR-case is concerned, from an overall point of view and considering the FV types performance, it becomes apparent that the most powerful FVs are the second and the forth with an exception of the mild-Cr case, where FV3DLac is the most efficient. In contrast to the R-case, the lengthiest FV (FV5DLac) is unable to correctly classify abnormal regions, maybe owing to the over-length of FV resulting in the “curse of dimensionality” effect. Moreover, in most cases, FV2DLac performs better than FV1DLac, denoting that the synopsis of the DLac curve information in just three parameters, although it omits important information (as shown in R-case), tends to improve the detection potential, because multiple sub-images are simultaneously employed. We should also highlight the low results presented by Y channel during the mild lesion scenario, implying that combining information from multiple sub-images in the low-potential luminance plane results in deteriorated performance. Finally, as far as the two selection approaches are concerned (EFF and LFF) in NR-case, it is apparent that there is no clear winner. The utilization of features extracted from multiple structural components of the WCE images (sub-images) permits to camouflage, to a certain extent, the shortcomings introduced by each approach.

Considering the combined channel case, the differences in terms of classification accuracy between the two FFs is marginal, with an exception of mild lesion detection. Similarly to the R-individual channel case, the FVs that include augmented information from the entire DLac-w curve (FV3DLac, FV4DLac and FV5DLac) achieve better performance, compared to the more simple FVs (FV1DLac and FV2DLac).

From an overall perspective, it is evident that Cr channel delivers the highest classification accuracy for all severity scenarios. The LFF approach and FV5DLac are also proven to provide better results for the majority of cases. However, this notion does not apply for the NR-case, where FV3DLac and FV4DLac are more efficient. It is also noteworthy that, although the highest ACC value (81.2%) for the Cr-mild case is achieved for NR-case and FV3DLac, the corresponding result for the R-case and FV5DLac (which are the most efficient settings for severe and total scenarios) is only 2.4 pp lower, implying a rather satisfactory behavior. Comparing the results between R- and NR-cases of individual channels we can conclude that NR-case results in decreased classification performance for the majority of cases. The utilization of features from individual curvelet-based sub-images results in lengthy overall FVs and the classification process suffers from the increased complexity. The detection of mild CD lesions in Cb and Cr channels is an exception, where NR yields better results, engaging, however, smaller FVs that have half the size of the most efficient FV at the R-case. The abundance of information caused by the individual sub-images leads to better discrimination of slightly eroded tissue. By considering the results of the combined channel case we observe that the use of information simultaneously captured from all planes leads to slightly diminished performance, compared to the behavior of the individual Cr channel, although it surpasses the performance of the other two individual channels. This might be explained by the inclusion of misleading data from Y channel and the tripling is size of the FVs.

Compared to other approaches, HAF-DLac scheme demonstrates superior performance, exhibiting 4.9, 4.3, 5.5 and 5.2 pp higher accuracy, sensitivity, specificity and precision, respectively, than the second most efficient method[17], when considering the total case. Even more, significant is the progress (7.8, 9.4, 6.2 and 7.6 pp in terms of accuracy, sensitivity, specificity and precision, respectively, compared to[8]) in successfully detecting mild erosions, an examination that none of the other approaches has conducted. The detection rates (accuracy, sensitivity, specificity and precision) of severe, clearly defined lesions reach the considerable values of 93.8%, 95.2%, 92.4% and 92.6%, respectively, surpassing the other efforts[6,8,17] by at least 2.6, 2.4, 2.8 and 2.7 pp, respectively. Moreover, the advanced behavior of the proposed scheme is evidenced by the classification results obtained by CaEn and KID database. The performance of HAF-DLac is rather solid in recognizing efficiently both kinds of tissue [20/22-5/6 (CaEn) and 12/14-51/60 (KID) lesion-normal images]. On the contrary, the other approaches exhibited inferior discrimination capability as they failed to identify, mainly, the lesion cases, and, specifically, from the KID database. The above results highlight that the HAF process combined with DLac-based features surpass two of the most promising methodologies in the literature that use LBP and MPEG-7 features.

Regarding the performance of the various feature extraction techniques, it becomes apparent (Figure 9) that the proposed FV is by far the most efficient, validating our hypothesis about the superiority of DLac analysis in extracting texture features compared to widely used statistical approaches.

Furthermore, it should be noted that the proposed scheme is quite efficient in terms of computational cost; that is < 0.5 s for a ROI of 140 × 140 pixels (average size) on a 4-core, 2.67 GHz desktop computer given the unoptimized Matlab implementation. It should be stressed that the time-consuming training phase is not included in the computational cost since it is performed only once during the development of the application. Focusing on even more efficient realizations, other programming languages (such as C++), and multithreading programming should be considered.

A new method, namely HAF-DLac, for CD inflammatory tissue detection using WCE images in YCbCr color space was presented. The proposed scheme combined HAF with DLac analysis to initially process the WCE data for enhancing the underlying lesion information, by incorporating Curvelet transform and GA-based techniques and, then, applying feature extraction analysis that resulted in five different DLac-based feature vectors with increased classification potential. The dataset (800 images with normal, mild and severe CD lesion cases) was subjected to HAF-DLac analysis for individual-channel and combined channel cases. Extensive classification tests were implemented concerning all severity scenarios showing the effectiveness of the proposed method. The comparison of the introduced HAF-DLac scheme with other relevant WCE-based lesion recognition methods evidenced its efficiency, consistency and robustness to more competent detection of CD lesions. The promising performance sets the ball rolling for an integrated computer-aided diagnosis system on the service of gastroenterologists.

The authors would like to thank the anonymous reviewers for the constructive comments that contributed to improving the final version of the paper.

Manuscript source: Invited manuscript

Specialty type: Gastroenterology and hepatology

Country of origin: Greece

Peer-review report classification

Grade A (Excellent): A

Grade B (Very good): 0

Grade C (Good): C, C

Grade D (Fair): 0

Grade E (Poor): 0

P- Reviewer: Hosoe N, Sakin YS, Lakatos L S- Editor: Qi Y L- Editor: A E- Editor: Wang CH

| 1. | Iddan G, Meron G, Glukhovsky A, Swain P. Wireless capsule endoscopy. Nature. 2000;405:417. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 1994] [Cited by in F6Publishing: 1297] [Article Influence: 54.0] [Reference Citation Analysis (0)] |

| 2. | Rondonotti E, Soncini M, Girelli C, Ballardini G, Bianchi G, Brunati S, Centenara L, Cesari P, Cortelezzi C, Curioni S. Small bowel capsule endoscopy in clinical practice: a multicenter 7-year survey. Eur J Gastroenterol Hepatol. 2010;22:1380-1386. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 52] [Cited by in F6Publishing: 49] [Article Influence: 3.5] [Reference Citation Analysis (0)] |

| 3. | Maieron A, Hubner D, Blaha B, Deutsch C, Schickmair T, Ziachehabi A, Kerstan E, Knoflach P, Schoefl R. Multicenter retrospective evaluation of capsule endoscopy in clinical routine. Endoscopy. 2004;36:864-868. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 55] [Cited by in F6Publishing: 58] [Article Influence: 2.9] [Reference Citation Analysis (0)] |

| 4. | Goldberg DE. Genetic algorithms in search, optimization and machine learning. Boston, MA: Addisson-Wesley Longman Publishing 1989; 1-432. [Cited in This Article: ] |

| 5. | Candes J, Demanet L, Donoho D, Ying L. Fast discrete curvelet transforms. Multiscale Model Simul. 2006;5:861-899. [DOI] [Cited in This Article: ] |

| 6. | Eid A, Charisis VS, Hadjileontiadis LJ, Sergiadis GD. A curvelet-based lacunarity approach for ulcer detection from wireless capsule endoscopy images. Proceedings of the 26th IEEE International Symposium on Computer-Based Medical Systems; 2013 Jun 20-22. Porto, Portugal: IEEE 2013; 273-278. [DOI] [Cited in This Article: ] |

| 7. | Liedlgruber M, Uhl A. Computer-aided decision support systems for endoscopy in the gastrointestinal tract: a review. IEEE Rev Biomed Eng. 2011;4:73-88. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 118] [Cited by in F6Publishing: 79] [Article Influence: 6.6] [Reference Citation Analysis (0)] |

| 8. | Li B, Meng M. Texture analysis for ulcer detection in capsule endoscopy images. Image Vis Comput. 2009;27:1336-1342. [DOI] [Cited in This Article: ] |

| 9. | Karargyris A, Bourbakis N. Identification of ulcers in wireless capsule endoscopy videos. Proceedings of the 6th IEEE International Symposium on Biomedical Imaging: From Nano to Macro; 2009 Jun 28-Jul 01. Boston, USA: IEEE 2009; 554-557. [DOI] [Cited in This Article: ] |

| 10. | Gevers T, Weijer J, Stokman H. Color feature detection. Color image processing: Methods and applications. Boca Raton: CRC Press 2006; 203-226. [Cited in This Article: ] |

| 11. | Yu L, Yuen PC, Lai J. Ulcer detection in wireless capsule endoscopy images. Proceedings of the 21st IEEE International Conference on Pattern Recognition. 2012 Nov 11-15. Tsukuba, Japan: IEEE 2012; 45-48. [Cited in This Article: ] |

| 12. | Chen Y, Lee J. Ulcer detection in wireless capsule endoscopy videos. Proceedings of the 20th ACM International Conference on Multimedia. 2012 2012 Oct 29-Nov 2. Nara, Japan, New York: ACM 2012; 1181-1184. [DOI] [Cited in This Article: ] |

| 13. | Charisis VS, Katsimerou C, Hadjileontiadis LJ, Liatsos CN, Sergiadis GD. Computer-aided capsule endoscopy images evaluation based on color rotation and texture features: An educational tool to physicians. In: Rodrigues PP, Pechenizkiy M, Gama J, Correia RC, Liu J, Traina A, Lucas P, Soda P, editors. Proceedings of the 26th IEEE International Symposium On Computer-Based Medical Systems. 2013 Jun 20-22; Porto, Portugal. Red Hook: IEEE 2013; 203-208. [DOI] [Cited in This Article: ] |

| 14. | Charisis VS, Hadjileontiadis LJ, Liatsos CN, Mavrogiannis CC, Sergiadis GD. Capsule endoscopy image analysis using texture information from various colour models. Comput Methods Programs Biomed. 2012;107:61-74. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 38] [Cited by in F6Publishing: 18] [Article Influence: 1.5] [Reference Citation Analysis (0)] |

| 15. | Girgis H, Mitchell B, Dassopoulos T, Mullin G, Hager G. An intelligent system to detect Chrohn’s disease inflammation in wireless capsule endoscopy videos. Proceedings of the 7th IEEE International Symposium on Biomedical Imaging: From Nano to Macro. 2010 Apr 14-17. Rotterdam, The Netherlandsn: IEEE 2010; 1373-1376. [DOI] [Cited in This Article: ] |

| 16. | Jebarani W, Daisy VJ. Assessment of Crohn’s disease lesions in wireless capsule endoscopy images using SVM based classification. In: Proceedings of the 2013; IEEE, 2013: 303-307. [DOI] [Cited in This Article: ] |

| 17. | Kumar R, Zhao Q, Seshamani S, Mullin G, Hager G, Dassopoulos T. Assessment of Crohn’s disease lesions in wireless capsule endoscopy images. IEEE Trans Biomed Eng. 2012;59:355-362. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 60] [Cited by in F6Publishing: 48] [Article Influence: 3.7] [Reference Citation Analysis (0)] |

| 18. | Coimbra M, Cunha J. MPEG-7 visual descriptors-contributions for automated feature extraction in capsule endoscopy. IEEE Trans Circuits Syst Video Technol. 2006;16:628-637. [DOI] [Cited in This Article: ] |

| 19. | Li B, Meng MQ. Computer-based detection of bleeding and ulcer in wireless capsule endoscopy images by chromaticity moments. Comput Biol Med. 2009;39:141-147. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 112] [Cited by in F6Publishing: 54] [Article Influence: 3.6] [Reference Citation Analysis (0)] |

| 20. | Hwang S. Bag-of-visual-words approach to abnormal image detection in wireless capsule endoscopy videos. In: Bebis G, Richard B, Parvin B, Koracin D, Wang S, Kyungnam K, Benes B, Moreland K, Borst C, DiVerdi S, Yi-Jen C, Ming J, editors. Advances in visual computing. Lecture Notes in Computer Science 6939. Proceedings of the 7th International Symposium on Visual Computing, Part II; 2011 Sept 26-28. Las Vegas, NV, USA, New York: Springer 2011; 320-327. [DOI] [Cited in This Article: ] [Cited by in Crossref: 28] [Cited by in F6Publishing: 28] [Article Influence: 2.2] [Reference Citation Analysis (0)] |

| 21. | Karargyris A, Bourbakis N. Detection of small bowel polyps and ulcers in wireless capsule endoscopy videos. IEEE Trans Biomed Eng. 2011;58:2777-2786. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 129] [Cited by in F6Publishing: 65] [Article Influence: 5.0] [Reference Citation Analysis (0)] |

| 22. | Nawarathna R, Oh J, Muthukudage J, Tavanapong W, Wong J, de Groen PC, Tang SJ. Abnormal Image Detection in Endoscopy Videos Using a Filter Bank and Local Binary Patterns. Neurocomputing. 2014;144:70-91. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 69] [Cited by in F6Publishing: 47] [Article Influence: 4.7] [Reference Citation Analysis (0)] |

| 23. | Iakovidis DK, Koulaouzidis A. Automatic lesion detection in capsule endoscopy based on color saliency: closer to an essential adjunct for reviewing software. Gastrointest Endosc. 2014;80:877-883. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 75] [Cited by in F6Publishing: 66] [Article Influence: 6.6] [Reference Citation Analysis (0)] |

| 24. | Szczypiński P, Klepaczko A, Pazurek M, Daniel P. Texture and color based image segmentation and pathology detection in capsule endoscopy videos. Comput Methods Programs Biomed. 2014;113:396-411. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 94] [Cited by in F6Publishing: 51] [Article Influence: 5.1] [Reference Citation Analysis (0)] |

| 25. | Signorelli C, Villa F, Rondonotti E, Abbiati C, Beccari G, de Franchis R. Sensitivity and specificity of the suspected blood identification system in video capsule enteroscopy. Endoscopy. 2005;37:1170-1173. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 32] [Cited by in F6Publishing: 35] [Article Influence: 1.8] [Reference Citation Analysis (0)] |

| 26. | Kyriakos N, Karagiannis S, Galanis P, Liatsos C, Zouboulis-Vafiadis I, Georgiou E, Mavrogiannis C. Evaluation of four time-saving methods of reading capsule endoscopy videos. Eur J Gastroenterol Hepatol. 2012;24:1276-1280. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 8] [Cited by in F6Publishing: 17] [Article Influence: 1.4] [Reference Citation Analysis (0)] |

| 27. | Krystallis C, Koulaouzidis A, Douglas S, Plevris JN. Chromoendoscopy in small bowel capsule endoscopy: Blue mode or Fuji Intelligent Colour Enhancement? Dig Liver Dis. 2011;43:953-957. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 24] [Cited by in F6Publishing: 33] [Article Influence: 2.5] [Reference Citation Analysis (0)] |

| 28. | Haneishi H, Hasegawa T, Hosoi A, Yokoyama Y, Tsumura N, Miyake Y. System design for accurately estimating the spectral reflectance of art paintings. Appl Opt. 2000;39:6621-6632. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 159] [Cited by in F6Publishing: 34] [Article Influence: 1.4] [Reference Citation Analysis (0)] |

| 29. | Tkalcic M, Tasic JF. Colour spaces: perceptual historical and applicational background. In: Zajc B, Tkalcic M, editors. Proceedings of the IEEE Region 8 EUROCON 2003; IEEE, 2003: 304-308. [DOI] [Cited in This Article: ] |

| 30. | Starck JL, Candès EJ, Donoho DL. The curvelet transform for image denoising. IEEE Trans Image Process. 2002;11:670-684. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 1479] [Cited by in F6Publishing: 309] [Article Influence: 14.0] [Reference Citation Analysis (0)] |

| 31. | Plotnick RE, Gardner RH, Hargrove WW, Prestegaard K, Perlmutter M. Lacunarity analysis: A general technique for the analysis of spatial patterns. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics. 1996;53:5461-5468. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 314] [Cited by in F6Publishing: 332] [Article Influence: 11.9] [Reference Citation Analysis (0)] |

| 32. | Dong P. Test of a new lacunarity estimation method for image texture analysis. Int J Remote Sens. 2000;21:3369-3373. [DOI] [Cited in This Article: ] |

| 33. | Plotnick RE, Gardner RH, O’Neill RV. Lacunarity indices as measures of landscape texture. Landscape Ecol. 1993;8:201-211. [DOI] [Cited in This Article: ] |

| 34. | Zaia A, Eleonori R, Maponi P, Rossi R, Murri R. MR imaging and osteoporosis: fractal lacunarity analysis of trabecular bone. IEEE Trans Inf Technol Biomed. 2006;10:484-489. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 33] [Cited by in F6Publishing: 35] [Article Influence: 1.9] [Reference Citation Analysis (0)] |

| 35. | Hadjileontiadis LJ. A texture-based classification of crackles and squawks using lacunarity. IEEE Trans Biomed Eng. 2009;56:718-732. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 24] [Cited by in F6Publishing: 24] [Article Influence: 1.6] [Reference Citation Analysis (0)] |

| 36. | Marquardt D. An algorithm for least squares estimation of nonlinear parameters. J Soc Indust Appl Math. 1963;11:431-441. [DOI] [Cited in This Article: ] |

| 37. | Haralick RM. Statistical and structural approaches to texture. Proc IEEE. 1979;67:786-804. [DOI] [Cited in This Article: ] |

| 38. | Given Imaging. Capsule endoscopy. 2014. Available from: http://www.capsuleendoscopy.org. [Cited in This Article: ] |

| 39. | Iakovidis DK, Koulaouzidis A. Automatic lesion detection in wireless capsule endoscopy - A simple solution for a complex problem. Proceedings of the 2014 IEEE International Conference on Image Processing; 2014 Oct 27-30. Paris, France: IEEE 2014; 2236-2240. [Cited in This Article: ] |

| 40. | Iakovidis DK, Koulaouzidis A. Software for enhanced video capsule endoscopy: challenges for essential progress. Nat Rev Gastroenterol Hepatol. 2015;12:172-186. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 142] [Cited by in F6Publishing: 103] [Article Influence: 11.4] [Reference Citation Analysis (0)] |

| 41. | Koulaouzidis A, Iakovidis DK. KID: Koulaouzidis-Iakovidis Database for Capsule Endoscopy. Available from: http://is-innovation.eu/kid. [Cited in This Article: ] |

| 42. | Cristianini N, Shawe-Taylor J. An introduction to support vector machines and other kernel-based learning methods. Cambridge: Cambridge University Press 2000; 93-124. [Cited in This Article: ] |

| 43. | Szczypiński PM, Strzelecki M, Materka A, Klepaczko A. MaZda--a software package for image texture analysis. Comput Methods Programs Biomed. 2009;94:66-76. [PubMed] [DOI] [Cited in This Article: ] [Cited by in Crossref: 496] [Cited by in F6Publishing: 400] [Article Influence: 26.7] [Reference Citation Analysis (0)] |