Published online Apr 28, 2022. doi: 10.35711/aimi.v3.i2.33

Peer-review started: December 20, 2021

First decision: January 26, 2022

Revised: February 20, 2022

Accepted: April 21, 2022

Article in press: April 21, 2022

Published online: April 28, 2022

Processing time: 129 Days and 8.6 Hours

Artificial intelligence (AI) has been entwined with the field of radiology ever since digital imaging began replacing films over half a century ago. These algorithms, ranging from simplistic speech-to-text dictation programs to automated interpretation neural networks, have continuously sought to revolutionize medical imaging. With the number of imaging studies outpacing the amount of trained of readers, AI has been implemented to streamline workflow efficiency and provide quantitative, standardized interpretation. AI relies on massive amounts of data for its algorithms to function, and with the wide-spread adoption of Picture Archiving and Communication Systems (PACS), imaging data is accumulating rapidly. Current AI algorithms using machine-learning technology, or computer aided-detection, have been able to successfully pool this data for clinical use, although the scope of these algorithms remains narrow. Many systems have been developed to assist the workflow of the radiologist through PACS optimization and imaging study triage, however interpretation has generally remained a human responsibility for now. In this review article, we will summarize the current successes and limitations of AI in radiology, and explore the exciting prospects that deep-learning technology offers for the future.

Core Tip: Artificial intelligence (AI) has been an increasingly publicized subject in the field of radiology. This review will attempt to summarize the evolving philosophy and mechanisms behind the AI movement as well as the current applications, limitations, and future directions of the field.

- Citation: Fromherz MR, Makary MS. Artificial intelligence: Advances and new frontiers in medical imaging. Artif Intell Med Imaging 2022; 3(2): 33-41

- URL: https://www.wjgnet.com/2644-3260/full/v3/i2/33.htm

- DOI: https://dx.doi.org/10.35711/aimi.v3.i2.33

Advancements in artificial intelligence (AI) technology have created a stir of excitement—and trepidation—amongst professionals in radiology. With the advent of concepts such as machine learning and artificial neural networks promising instantaneous and accurate image interpretation, AI has been heralded as the next step in radiology evolution[1,2]. The ability to reduce image interpretation time and increase detection to levels beyond what is possible for the human eye could create a revolutionary, and increasingly necessary, impact on patient care across all medical disciplines.

AI in radiology has focused on improving three broad principles attributed to human limitations; efficiency, objectivity, and standardization[1,2,3]. Over the past few years there has been a continual increase in imaging orders, and it has been estimated that a radiologist must interpret an image every 3-4 s to match the demand[3,4] This demand, combined with declining reimbursement, has put more pressure on radiologists to increase productivity[5]. Additionally, human and health system variability has long been seen as a potential target to improve standardization across the field. Depending on who the reader is, what hospital system they work for, the time of day, and the number of scans the radiologist has read can result in measurable discrepancies in accuracy and timeliness of image interpretation[3,6,7].

Despite the exciting potential of AI utilization, the fear of algorithms replacing radiologists is ever present. AI companies have grown at an astonishing rate, with 60 new Food and Drug Administration (FDA) approved products in 2020, however the once foreseen AI takeover has not yet manifested[8-10]. Nonetheless, AI is making an impact, just not in the way it was originally planned. A fundamental shift has occurred in recent years in AI implementation, scope, and underlying philosophy. The idea of “replacing radiologists” is not a viable next step in AI evolution, at least for now, and the new philosophy of “working with radiologists” is one that is rapidly gaining traction[11,12]. By examining the current utilizations and limitations of AI in radiology, we can recognize the importance of this fast-rising technology and where the interaction between human and machine may be headed in the future.

The current state of AI utilization in the field of radiology is variable based on institution, although there are several widely-adopted systems. Aligning with the newer philosophy of “working with radiologists”, many of the current AI systems are being used in a limited capacity as tools to enhance the radiologist’s workflow. Many of these AI systems fall under the category of “micro-optimizations”[13].

The primary goal for micro-optimization algorithms is to assist the radiologist in his or her daily tasks rather than fully automating the radiologic process. Micro-optimizations can be broken down into two categories; nonpixel-based optimizations and pixel-based optimizations. By using AI to streamline the efficiency and standardization of time-consuming, mundane, or non-interpretive tasks, radiologists can better allocate their time and energy to further focus on image interpretation, consultation, and overall patient care[3,4,14]. Table 1 provides a summary of AI applications for both nonpixel-based and pixel-based optimizations.

| Workflow target | Application examples |

| Nonpixel-based | |

| Triage | Risk stratification for aortic pathology and generation of ‘aortic calcification score’ to assess for disease severity[15,16] |

| PACS display | Automated hanging protocol and comparison image generation[11] |

| Order verification | Patient medical record mining with built-in appropriateness criteria guidelines to approve or flag study orders[17,18,19,20,21] |

| Reporting | Automated data insertion into templates for standardized reporting of chest radiograph findings[23,24] |

| Pixel-based | |

| Segmentation | Segmentation of simple lung nodules on chest CT images[43] |

| Disease registration | PI-RADS lesion classification based on MRI image characteristics[25,26] |

| Screening | Algorithmic interpretation and classification of screening mammograms[27,28] |

Nonpixel-based optimizations refers to AI assistance in tasks that are not directly related to image interpretation. Some of these tasks include triaging patients, Picture Archiving and Communication Systems (PACS) optimizations, and standardized reporting. As an example, to better triage patients for immediate interpretation AI systems are currently being tested for risk stratification in patients with possible aortic dissection or aneurysm rupture[15,16]. As a different example, through big data analysis, AI algorithms have started to tackle the issue of automated image protocol creations. By reviewing imaging study requests, AI can determine if the study is appropriate, if another study may be more appropriate, or if contrast is necessary or not. With the ability to automatically mine the electronic medical record system and compare it to established guidelines, the system can then make the appropriate recommendation[17-19]. With an estimated 10% of all imaging studies being ordered in error, these nonpixel-based algorithms can automatically detect and eliminate erroneous study orders[20,21].

The automatic generation of hanging protocols and standardized screen display is another target for optimization. Before data interpretation can commence, a radiologist can spend 10-60 s selecting the appropriate images for comparison[11]. By having the appropriate hanging protocol and display automatically generate, image interpretation can commence instantaneously. What may at first seem like an insignificant amount of time, the elimination of manual protocol selection can significantly improve efficiency and allow for the redirection of the radiologist’s brain power toward actual diagnostic interpretation[11].

The standardization of reporting is one of the final areas for optimization, and one that is becoming increasingly necessary among all medical specialties in order to efficiently navigate and report in the electronic medical systems. Reporting is the final step in the radiologist’s workflow, and it is also one of the most error-prone[22]. Many micro-optimization AI algorithms are working on increasing the efficiency of reporting through the creation of automatic report generation tools including pre-selected formats specific for the study and automatic annotation. Automating and standardizing reporting can optimize radiologists’ reimbursements and save time, as demonstrated by one current chest x-ray reporting algorithm that saved radiologists an average of 8.5 h per month[23,24].

While the importance of these nonpixel-based micro-optimizations cannot be understated, the prospect of instantaneous image interpretation is the ultimate ambition of AI. Although AI technology has not yet achieved this ability in a broad sense, the development of pixel-based micro-optimizations have been paramount in maximizing a radiologist’s workflow efficiency[14]. Some example applications of these systems involve image segmentation, reconstruction, and disease registration.

AI segmentation has the ability to automatically delineate structures and provide measurements such as organ volume or the surface area of a tumor. Taken a step further, these AI algorithms can be specialized to stage tumors and provide pre-interpreted read-outs such as PI-RADS scores for prostate cancer staging[25,26]. A study by Sanford et al[25] demonstrated a modest 40% agreement between an AI algorithm and an expert radiologist when assigning PI-RADS scores based on magnetic resonance imaging (MRI). This result was comparable with previous human inter-reader agreements. Automated segmentation and pre-interpreted read-outs may be maximally utilized in areas that have the most amount of data, such as screening imaging studies.

Utilizing AI for screening processes helps to reduce the workload for radiologists while not over-extending the abilities of AI. As the typical screen produces categorically “positive”, “negative”, or “inconclusive” results, the complexity of the AI reads can be minimized. Using machine learning for screening detection is referred to as computer aided detection (CADe). CADe is currently being used in screening mammography, where there is an abundance of imaging studies and a relatively disproportionate amount of mammography trained readers[1,2,27]. CADe highlights the area of interest, and it is then determined whether an additional diagnostic study is indicated. CADe for mammography has been around since 1998 and its implementation into clinical workflow has continued to increase allowing radiologists to read more screening studies in less time. Along with the decreased read-time, it should be noted that several studies comparing the accuracy of CADe mammography to traditional radiologist-read mammograms have shown no discernable difference[26]. In one such study, an ensemble of top-performing AI algorithms combined with a single radiologist reader achieved an area under the curve (AUC) of 0.942, with 92% specificity, outperforming the radiologists’ specificity of 90.5%[28]. This is a representative example of new AI algorithms geared toward instantaneous, automatic interpretation.

Despite the constant development of new AI companies, advanced algorithms, and enhanced learning technology, AI has not yet become mainstream in the radiology world due to a combination of both logistical and clinical challenges. The ease of which AI programs can be implemented varies widely based on the scope and technicalities of the clinical problem they aim to solve, as well as the mechanism by which they solve them. In general terminology, there are two main types of AI systems, machine-learning and deep-learning, each of with have some specific limitations of their own[1,29].

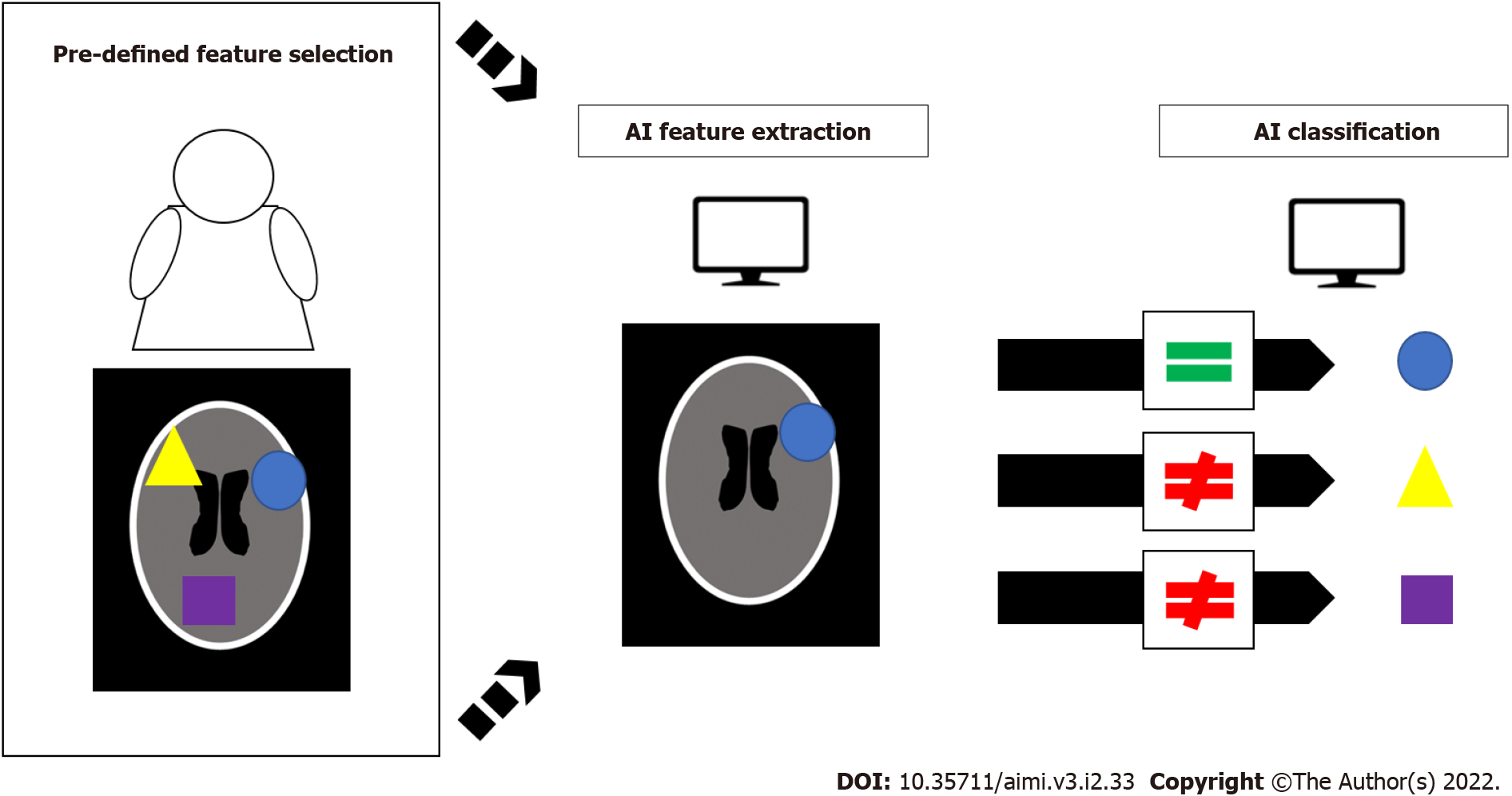

Machine-learning functions largely on the principal of pattern recognition. If the machine is able to “see” enough example image characteristics of a certain disease, it can then look at new images and be able to recognize them based on those previously defined features. The caveat here, is that these “pre-defined features”, such as tumor volume, density, etc., must be hand-fed into each specific machine-learning classifier[3]. In this way the AI does not actually learn, but rather applies the specifics of its pre-engineered programming. Consequently, machine-learning AI is intrinsically limited by these specific characteristics which can reduce its ability to recognize image features, such as rare or unusual disease presentations[30,31]. Figure 1 demonstrates a schematic example of how machine-learning AI systems utilize these pre-defined features for classification. Furthermore, as the breadth of medical knowledge continues to expand, previous CAD systems may become outdated, and therefore obsolete[30]. The theoretical solution to these hard-wired restrictions is the use of AI algorithms that do not rely on pre-engineered feature recognition, but rather one that can learn and adapt in a manner similar to the human brain.

Deep-learning is programmed to mimic the pattern of neural networks such as those in the human brain, referred to in the literature as convolutional neural networks (CNNs). The principal mechanism behind AI algorithms relies on a vast quantity of data, and through this data the AI can develop its own pattern of feature recognition without the need for pre-programming from human experts. Deep-learning AI uses these features to create connections and draw conclusions in a way similar to the human brain, and allowing it to operate freely from human input thus increasing its automaticity and decreasing restrictions[3,32,33]. While in theory this method appears to be a step-up from classical machine-learning technology, the reliance on data and complexity of the mechanism has its limitations.

With the wide-implementation of PACS and an ever-increasing number of medical images, there is no shortage of data for AI algorithms to mine[34]. The issue is not quantity—but quality. Different PACS, different imaging machine manufacturers, and different protocols can all effect the generalizability of an AI algorithm. These variations in image reconstruction, segmentation, and labelling can have adverse effects on the AI’s ability to learn, and the process of standardization across these variables would be a time-consuming and expensive task. This is one of the reasons for the current narrow use of AI in clinical practice. Currently approved AI programs only function with specific computed tomography (CT) imager models, specific PAC systems, and specific disease processes. With such a narrow clinical window, AI in its current form is limited in scope[30,31]. If multiple different AI systems are needed for each specific pathology the process of creating and implementing these systems may not be fiscally feasible[35]. Even with implementation, a lapse in the detection of rare diseases would still exist.

Questions regarding the mechanism of how deep-learning functions can also create additional limitations, specifically regarding FDA approval and the accuracy of the AI’s results[8,36]. The mechanism is extremely complex, and in many instances, the exact way in which the AI forms these CNNs is either unknown or proprietary. If the way the AI algorithm functions to produce its results is not well understood, this begs the question of whether or not its results can be trusted[8,36,37]. This question has haunted AI since its inception, and the answer of whether or not health professionals and patients would be willing to put their faith in the recommendation of a 100% computer-controlled radiologic study is not an easy one to answer. A variety of comparison studies have been conducted to determine whether AI accuracy is comparable to that of human readers, and the results have been mixed.

In the previously mentioned Schaffter et al[28] study on breast cancer detection, no single AI algorithm was able to outperform the radiologists, with a specificity of 66.1% for the top-performing algorithm compared to 90.5% for the radiologists. In a breast cancer detection study using a different AI system, the AI outperformed the radiologists with an AUC of 0.740 compared to the radiologists’ AUC of 0.625[38]. In a study comparing chest radiograph interpretation, AI outperformed the radiologists on detection of pulmonary edema, underperformed on detection of consolidation, and had comparable performance for detection of pleural effusions[39]. These studies collectively demonstrate that AI systems have mixed performance compared to human radiologists.

The utilization of different algorithms, training datasets, and radiologist experience in these studies makes drawing conclusions about AI’s general trustworthiness difficult. Concerns such as these are why the shift toward micro-optimizations has been an attractive one for the interim, however as new technologies are developed and deep-learning systems are polished the future of AI continues to push the boundaries of possibility.

The future of AI in radiology is constantly evolving, and with new computer systems, implementation targets, and algorithms being developed seemingly by the day there is no discernable end to what is possible[8-10]. Within PACS, the utilization of deep learning AI could theoretically be implemented wherever large quantities of data are available, although as previously stated there are several limitations to deep learning technology. With the interconnectivity, digitization, and increasing data pool in modern radiology, the limitations of deep-learning may slowly start to be overcome, and the use of micro-optimization may ramp up in scale.

The next phase in AI utilization will likely continue the trend of micro-optimization, but with increased efficiency. As hospital systems become more integrated, with imaging devices and PACS being able to directly communicate with each other, it would only make sense that the AI algorithms within these systems do the same. With AI’s current narrow clinical usage, each system excels at only one specific task[30,31]. By combining these systems, the scope of each can be summated into a larger, more efficient system. For example a lung cancer screening CT reconstruction algorithm could be used alongside a hanging protocol algorithm, with CADe for detection, and another algorithm for report generation[40]. Until a more encompassing system is created, combining existing micro-optimizations can scale efficiency in clinical workflow.

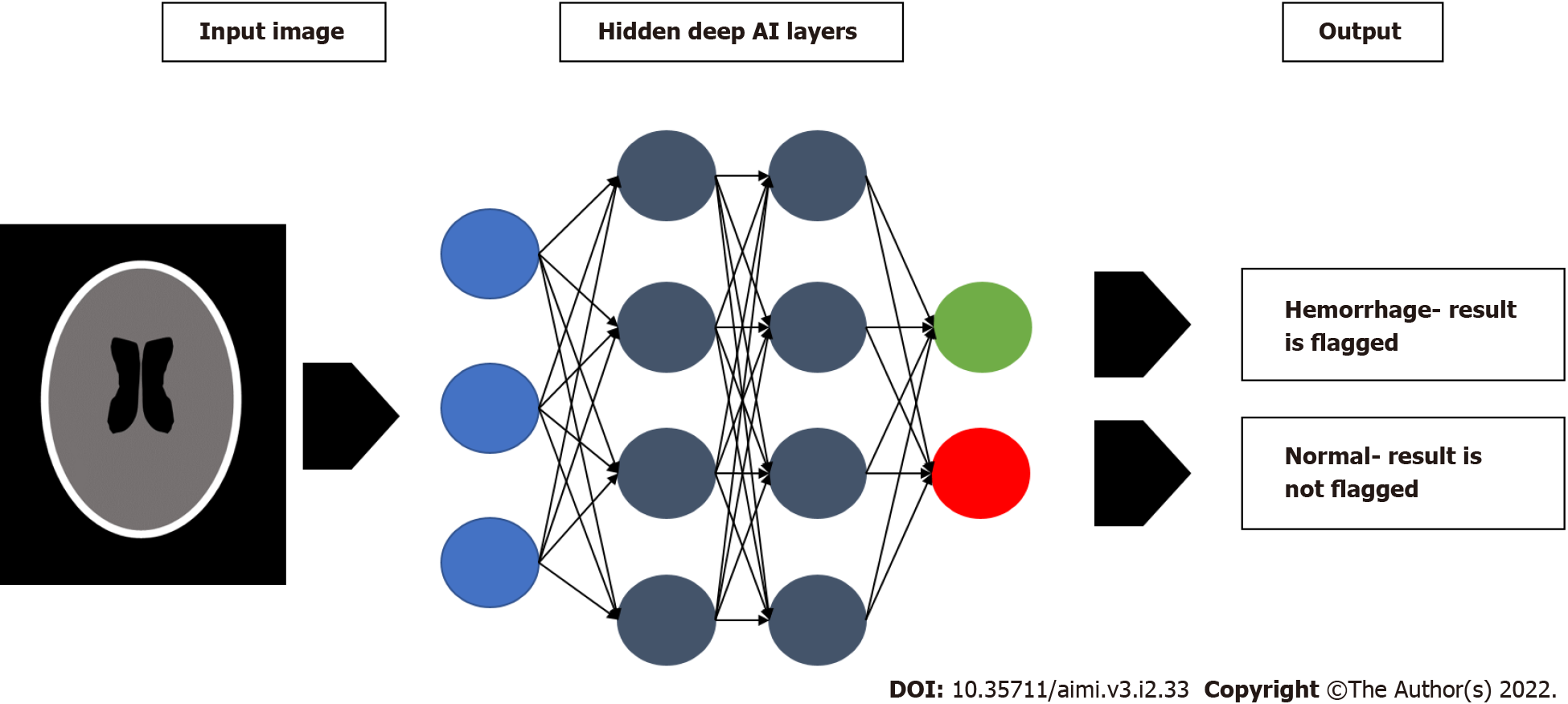

Despite the profound promise of deep learning, it has yet to have seen wide-spread clinical utilization. That being said, the power behind deep learning is data and the amount of available data is continuously growing. As we gather more high-quality data, the deep learning systems should become more powerful, increasing their usage potential. The full potential of deep learning is still unknown, however there are several promising applications in detection and automated disease monitoring. One of these applications is in the identification of incidental findings. When a radiologist is examining a trauma study, the AI system can detect incidental pulmonary nodules, allowing the radiologist to focus on the primary clinical issue without overlooking other findings[41,42,43]. Looking to improve upon current CAD systems, utilizing deep learning AI for triage is another attractive target, where the urgency of a given study is prioritized and then sent to a radiologist for final interpretation. These algorithms pool hundreds of thousands of imaging studies along with their subsequent reports, and use this information to train their CNNs. In a study of one such algorithm on assigning priority to adult chest radiographs, AI was able to assign priority with a sensitivity of 71% and a specificity of 95%. Importantly, the time taken to report critical findings was reduced significantly from 11.2 to 2.7[32]. Another study on triaging patients based on head CT findings produced similar results, with an AUC of 0.92 for accurately detecting intracranial hemorrhage[44]. Figure 2 is schematic example demonstrating this type of AI triage system. The ability for the system to distinguish between ‘normal’ and ‘abnormal’ accurately, and then further stratify ‘abnormal’ into severity categories, is a promising step toward automated interpretation[32,44].

The prospect of monitoring disease progression is a more complicated one, but the ability of the deep learning system to accumulate and track data changes over time makes this an attractive target. These systems may also have the ability to automatically adjust for changes in patient position or body habitus at the times the studies were conducted[3]. One of the obvious applications for this is oncology, with AI models already demonstrating their ability to accurately measure therapeutic response and tumor recurrence[45,46]. Throughout the coronavirus disease 2019 (COVID-19) pandemic, the ability to track disease progression has been crucial for medical decision making. Unfortunately, the wide variability in an individual’s disease course has been difficult to predict. To solve this problem, several deep learning systems have been tested to identify minute chest CT changes based on quantitative pixel analysis, giving us a more sophisticated look into the pathophysiology of the disease[47-49]. Not only does this present the potential to make educated decisions for COVID-19 patients regarding the need for hospitalization and allocation of resources, but the pandemic in general has further stressed the need of increased efficiency in radiology during times of unprecedented volume.

As the role of AI in radiology continues to advance and diversify, the potential for revolutionary clinical impact persists. One of the most important factors for the continued development of AI in radiology is achieving wide-spread implementation, and to achieve this AI must be embraced by radiologists. Currently, only an estimated 30% of radiologists use AI in day-to-day workflow[50]. With the shift of AI philosophy away from replacing radiologists, the view of AI as a threat to fear may be replaced with its view as a tool to exploit. As more algorithms are approved, more studies published, and more systems implemented into clinical practice, radiologists and trainees alike need to educate themselves on what AI can do for them and their patients. When radiologists and AI learn to work together, the potential clinical benefits of a human-machine symbiosis can be fully realized.

Provenance and peer review: Invited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Radiology, nuclear medicine and medical imaging

Country/Territory of origin: United States

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): B

Grade C (Good): C, C

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Dabbakuti JRKKK, India; Liu Y, China; Wan XH, China S-Editor: Liu JH L-Editor: A P-Editor: Liu JH

| 1. | Oakden-Rayner L. The Rebirth of CAD: How Is Modern AI Different from the CAD We Know? Radiol Artif Intell. 2019;1:e180089. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 24] [Cited by in RCA: 24] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 2. | Mun SK, Wong KH, Lo SB, Li Y, Bayarsaikhan S. Artificial Intelligence for the Future Radiology Diagnostic Service. Front Mol Biosci. 2020;7:614258. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 36] [Cited by in RCA: 41] [Article Influence: 10.3] [Reference Citation Analysis (0)] |

| 3. | Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18:500-510. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1552] [Cited by in RCA: 1838] [Article Influence: 262.6] [Reference Citation Analysis (2)] |

| 4. | Zha N, Patlas MN, Duszak R Jr. Radiologist Burnout Is Not Just Isolated to the United States: Perspectives From Canada. J Am Coll Radiol. 2019;16:121-123. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 16] [Article Influence: 2.3] [Reference Citation Analysis (0)] |

| 5. | Stempniak M. “Grave Concern”: Radiologist Reimbursement Expected to Plummet after CMS Clinical Labor Wage Update, August 16, 2021. Available from: https://www.radiologybusiness.com/topics/economics/radiologist-reimbursement-cms-clinical-labor-wage. |

| 6. | Patel AG, Pizzitola VJ, Johnson CD, Zhang N, Patel MD. Radiologists Make More Errors Interpreting Off-Hours Body CT Studies during Overnight Assignments as Compared with Daytime Assignments. Radiology. 2020;297:E281. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 1] [Article Influence: 0.2] [Reference Citation Analysis (0)] |

| 7. | Hanna TN, Lamoureux C, Krupinski EA, Weber S, Johnson JO. Effect of Shift, Schedule, and Volume on Interpretive Accuracy: A Retrospective Analysis of 2.9 Million Radiologic Examinations. Radiology. 2018;287:205-212. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 52] [Cited by in RCA: 54] [Article Influence: 7.7] [Reference Citation Analysis (0)] |

| 8. | Benjamens S, Dhunnoo P, Meskó B. The state of artificial intelligence-based FDA-approved medical devices and algorithms: an online database. NPJ Digit Med. 2020;3:118. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 418] [Cited by in RCA: 489] [Article Influence: 97.8] [Reference Citation Analysis (0)] |

| 9. | Chen MM, Golding LP, Nicola GN. Who Will Pay for AI? Radiol Artif Intell. 2021;3:e210030. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 19] [Cited by in RCA: 45] [Article Influence: 11.3] [Reference Citation Analysis (0)] |

| 10. | Bloom J, Dyrda L. 100+ artificial intelligence companies to know in healthcare. Beckers Hospital Review. Published July 19, 2019. Accessed August 4, 2020. Available from: https://www.beckershospitalreview.com/Lists/100-artificial-intelligence-companies-to-know-in-healthcare-2019.html. |

| 11. | Langlotz CP. Will Artificial Intelligence Replace Radiologists? Radiol Artif Intell. 2019;1:e190058. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 100] [Cited by in RCA: 98] [Article Influence: 16.3] [Reference Citation Analysis (0)] |

| 12. | Siwicki B. Mass General Brigham and the Future of AI in Radiology. Healthcare IT News, May 10, 2021. Available from: https://www.healthcareitnews.com/news/mass-general-brigham-and-future-ai-radiology. |

| 13. | Gimenez F. Real-Time Interpretation: The next Frontier in Radiology AI - MedCity News. Medcitynews, July 22, 2022. Available from: https://medcitynews.com/2021/07/real-time-interpretation-the-next-frontier-in-radiology-ai/. |

| 14. | Do HM, Spear LG, Nikpanah M, Mirmomen SM, Machado LB, Toscano AP, Turkbey B, Bagheri MH, Gulley JL, Folio LR. Augmented Radiologist Workflow Improves Report Value and Saves Time: A Potential Model for Implementation of Artificial Intelligence. Acad Radiol. 2020;27:96-105. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 26] [Cited by in RCA: 40] [Article Influence: 8.0] [Reference Citation Analysis (0)] |

| 15. | Hahn LD, Baeumler K, Hsiao A. Artificial intelligence and machine learning in aortic disease. Curr Opin Cardiol. 2021;36:695-703. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 26] [Article Influence: 6.5] [Reference Citation Analysis (0)] |

| 16. | Chin CW, Pawade TA, Newby DE, Dweck MR. Risk Stratification in Patients With Aortic Stenosis Using Novel Imaging Approaches. Circ Cardiovasc Imaging 2015; 8: e003421. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 31] [Cited by in RCA: 40] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 17. | Desai V, Flanders A, Zoga AC. Leveraging Technology to Improve Radiology Workflow. Semin Musculoskelet Radiol. 2018;22:528-539. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 4] [Article Influence: 0.6] [Reference Citation Analysis (0)] |

| 18. | Hassanpour S, Langlotz CP. Information extraction from multi-institutional radiology reports. Artif Intell Med. 2016;66:29-39. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 106] [Cited by in RCA: 98] [Article Influence: 10.9] [Reference Citation Analysis (0)] |

| 19. | Bhatia N, Trivedi H, Safdar N, Heilbrun ME. Artificial Intelligence in Quality Improvement: Reviewing Uses of Artificial Intelligence in Noninterpretative Processes from Clinical Decision Support to Education and Feedback. J Am Coll Radiol. 2020;17:1382-1387. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 8] [Cited by in RCA: 14] [Article Influence: 2.8] [Reference Citation Analysis (0)] |

| 20. | Bernardy M, Ullrich CG, Rawson JV, Allen B Jr, Thrall JH, Keysor KJ, James C, Boyes JA, Saunders WM, Lomers W, Mollura DJ, Pyatt RS Jr, Taxin RN, Mabry MR. Strategies for managing imaging utilization. J Am Coll Radiol. 2009;6:844-850. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 44] [Cited by in RCA: 48] [Article Influence: 3.2] [Reference Citation Analysis (0)] |

| 21. | Lehnert BE, Bree RL. Analysis of appropriateness of outpatient CT and MRI referred from primary care clinics at an academic medical center: how critical is the need for improved decision support? J Am Coll Radiol. 2010;7:192-197. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 192] [Cited by in RCA: 213] [Article Influence: 14.2] [Reference Citation Analysis (0)] |

| 22. | Onder O, Yarasir Y, Azizova A, Durhan G, Onur MR, Ariyurek OM. Errors, discrepancies and underlying bias in radiology with case examples: a pictorial review. Insights Imaging. 2021;12:51. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 11] [Cited by in RCA: 41] [Article Influence: 10.3] [Reference Citation Analysis (0)] |

| 23. | Lakhani P, Prater AB, Hutson RK, Andriole KP, Dreyer KJ, Morey J, Prevedello LM, Clark TJ, Geis JR, Itri JN, Hawkins CM. Machine Learning in Radiology: Applications Beyond Image Interpretation. J Am Coll Radiol. 2018;15:350-359. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 127] [Cited by in RCA: 144] [Article Influence: 18.0] [Reference Citation Analysis (0)] |

| 24. | Chung CY, Makeeva V, Yan J, Prater AB, Duszak R Jr, Safdar NM, Heilbrun ME. Improving Billing Accuracy Through Enterprise-Wide Standardized Structured Reporting With Cross-Divisional Shared Templates. J Am Coll Radiol. 2020;17:157-164. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 11] [Article Influence: 2.8] [Reference Citation Analysis (0)] |

| 25. | Sanford T, Harmon SA, Turkbey EB, Kesani D, Tuncer S, Madariaga M, Yang C, Sackett J, Mehralivand S, Yan P, Xu S, Wood BJ, Merino MJ, Pinto PA, Choyke PL, Turkbey B. Deep-Learning-Based Artificial Intelligence for PI-RADS Classification to Assist Multiparametric Prostate MRI Interpretation: A Development Study. J Magn Reson Imaging. 2020;52:1499-1507. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 57] [Cited by in RCA: 57] [Article Influence: 11.4] [Reference Citation Analysis (0)] |

| 26. | Winkel DJ, Wetterauer C, Matthias MO, Lou B, Shi B, Kamen A, Comaniciu D, Seifert HH, Rentsch CA, Boll DT. Autonomous Detection and Classification of PI-RADS Lesions in an MRI Screening Population Incorporating Multicenter-Labeled Deep Learning and Biparametric Imaging: Proof of Concept. Diagnostics (Basel) 2020; 10. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 18] [Cited by in RCA: 34] [Article Influence: 6.8] [Reference Citation Analysis (0)] |

| 27. | Raya-Povedano JL, Romero-Martín S, Elías-Cabot E, Gubern-Mérida A, Rodríguez-Ruiz A, Álvarez-Benito M. AI-based Strategies to Reduce Workload in Breast Cancer Screening with Mammography and Tomosynthesis: A Retrospective Evaluation. Radiology. 2021;300:57-65. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 25] [Cited by in RCA: 90] [Article Influence: 22.5] [Reference Citation Analysis (0)] |

| 28. | Schaffter T, Buist DSM, Lee CI, Nikulin Y, Ribli D, Guan Y, Lotter W, Jie Z, Du H, Wang S, Feng J, Feng M, Kim HE, Albiol F, Albiol A, Morrell S, Wojna Z, Ahsen ME, Asif U, Jimeno Yepes A, Yohanandan S, Rabinovici-Cohen S, Yi D, Hoff B, Yu T, Chaibub Neto E, Rubin DL, Lindholm P, Margolies LR, McBride RB, Rothstein JH, Sieh W, Ben-Ari R, Harrer S, Trister A, Friend S, Norman T, Sahiner B, Strand F, Guinney J, Stolovitzky G; and the DM DREAM Consortium, Mackey L, Cahoon J, Shen L, Sohn JH, Trivedi H, Shen Y, Buturovic L, Pereira JC, Cardoso JS, Castro E, Kalleberg KT, Pelka O, Nedjar I, Geras KJ, Nensa F, Goan E, Koitka S, Caballero L, Cox DD, Krishnaswamy P, Pandey G, Friedrich CM, Perrin D, Fookes C, Shi B, Cardoso Negrie G, Kawczynski M, Cho K, Khoo CS, Lo JY, Sorensen AG, Jung H. Evaluation of Combined Artificial Intelligence and Radiologist Assessment to Interpret Screening Mammograms. JAMA Netw Open. 2020;3:e200265. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 223] [Cited by in RCA: 228] [Article Influence: 45.6] [Reference Citation Analysis (0)] |

| 29. | Do S, Song KD, Chung JW. Basics of Deep Learning: A Radiologist's Guide to Understanding Published Radiology Articles on Deep Learning. Korean J Radiol. 2020;21:33-41. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 35] [Cited by in RCA: 62] [Article Influence: 12.4] [Reference Citation Analysis (0)] |

| 30. | Futoma J, Simons M, Panch T, Doshi-Velez F, Celi LA. The myth of generalisability in clinical research and machine learning in health care. Lancet Digit Health. 2020;2:e489-e492. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 102] [Cited by in RCA: 215] [Article Influence: 43.0] [Reference Citation Analysis (0)] |

| 31. | Zech JR, Badgeley MA, Liu M, Costa AB, Titano JJ, Oermann EK. Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: A cross-sectional study. PLoS Med. 2018;15:e1002683. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 536] [Cited by in RCA: 655] [Article Influence: 93.6] [Reference Citation Analysis (0)] |

| 32. | Annarumma M, Withey SJ, Bakewell RJ, Pesce E, Goh V, Montana G. Automated Triaging of Adult Chest Radiographs with Deep Artificial Neural Networks. Radiology. 2019;291:196-202. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 117] [Cited by in RCA: 147] [Article Influence: 24.5] [Reference Citation Analysis (0)] |

| 33. | Shen D, Wu G, Suk HI. Deep Learning in Medical Image Analysis. Annu Rev Biomed Eng. 2017;19:221-248. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2581] [Cited by in RCA: 1940] [Article Influence: 242.5] [Reference Citation Analysis (0)] |

| 34. | Barlow RD. Larger Volume Data Sets Redefining PACS. Imaging Technology News, June 12, 2008. Available from: http://www.itnonline.com/article/Larger-volume-data-sets-redefining-pacs. |

| 35. | Tadavarthi Y, Vey B, Krupinski E, Prater A, Gichoya J, Safdar N, Trivedi H. The State of Radiology AI: Considerations for Purchase Decisions and Current Market Offerings. Radiol Artif Intell. 2020;2:e200004. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 18] [Cited by in RCA: 41] [Article Influence: 8.2] [Reference Citation Analysis (0)] |

| 36. | Aggarwal R, Sounderajah V, Martin G, Ting DSW, Karthikesalingam A, King D, Ashrafian H, Darzi A. Diagnostic accuracy of deep learning in medical imaging: a systematic review and meta-analysis. NPJ Digit Med. 2021;4:65. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 290] [Cited by in RCA: 348] [Article Influence: 87.0] [Reference Citation Analysis (0)] |

| 37. | Chartrand G, Cheng PM, Vorontsov E, Drozdzal M, Turcotte S, Pal CJ, Kadoury S, Tang A. Deep Learning: A Primer for Radiologists. Radiographics. 2017;37:2113-2131. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 511] [Cited by in RCA: 688] [Article Influence: 98.3] [Reference Citation Analysis (0)] |

| 38. | McKinney SM, Sieniek M, Godbole V, Godwin J, Antropova N, Ashrafian H, Back T, Chesus M, Corrado GS, Darzi A, Etemadi M, Garcia-Vicente F, Gilbert FJ, Halling-Brown M, Hassabis D, Jansen S, Karthikesalingam A, Kelly CJ, King D, Ledsam JR, Melnick D, Mostofi H, Peng L, Reicher JJ, Romera-Paredes B, Sidebottom R, Suleyman M, Tse D, Young KC, De Fauw J, Shetty S. International evaluation of an AI system for breast cancer screening. Nature. 2020;577:89-94. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1364] [Cited by in RCA: 1184] [Article Influence: 236.8] [Reference Citation Analysis (0)] |

| 39. | Wu JT, Wong KCL, Gur Y, Ansari N, Karargyris A, Sharma A, Morris M, Saboury B, Ahmad H, Boyko O, Syed A, Jadhav A, Wang H, Pillai A, Kashyap S, Moradi M, Syeda-Mahmood T. Comparison of Chest Radiograph Interpretations by Artificial Intelligence Algorithm vs Radiology Residents. JAMA Netw Open. 2020;3:e2022779. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 47] [Cited by in RCA: 83] [Article Influence: 16.6] [Reference Citation Analysis (0)] |

| 40. | Svoboda E. Artificial intelligence is improving the detection of lung cancer. Nature. 2020;587:S20-S22. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 17] [Cited by in RCA: 29] [Article Influence: 5.8] [Reference Citation Analysis (0)] |

| 41. | Cui S, Ming S, Lin Y, Chen F, Shen Q, Li H, Chen G, Gong X, Wang H. Development and clinical application of deep learning model for lung nodules screening on CT images. Sci Rep. 2020;10:13657. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 38] [Cited by in RCA: 51] [Article Influence: 10.2] [Reference Citation Analysis (0)] |

| 42. | Ottawa (ON): Canadian Agency for Drugs and Technologies in Health. Artificial Intelligence for Classification of Lung Nodules: A Review of Clinical Utility, Diagnostic Accuracy, Cost-Effectiveness, and Guidelines [Internet]. 2020-Jan-22. [PubMed] |

| 43. | Weikert T, Akinci D'Antonoli T, Bremerich J, Stieltjes B, Sommer G, Sauter AW. Evaluation of an AI-Powered Lung Nodule Algorithm for Detection and 3D Segmentation of Primary Lung Tumors. Contrast Media Mol Imaging. 2019;2019:1545747. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 10] [Cited by in RCA: 16] [Article Influence: 2.7] [Reference Citation Analysis (0)] |

| 44. | Chilamkurthy S, Ghosh R, Tanamala S, Biviji M, Campeau NG, Venugopal VK, Mahajan V, Rao P, Warier P. Deep learning algorithms for detection of critical findings in head CT scans: a retrospective study. Lancet. 2018;392:2388-2396. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 443] [Cited by in RCA: 499] [Article Influence: 71.3] [Reference Citation Analysis (0)] |

| 45. | Liu Z, Wang S, Dong D, Wei J, Fang C, Zhou X, Sun K, Li L, Li B, Wang M, Tian J. The Applications of Radiomics in Precision Diagnosis and Treatment of Oncology: Opportunities and Challenges. Theranostics. 2019;9:1303-1322. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 558] [Cited by in RCA: 605] [Article Influence: 100.8] [Reference Citation Analysis (0)] |

| 46. | Bi WL, Hosny A, Schabath MB, Giger ML, Birkbak NJ, Mehrtash A, Allison T, Arnaout O, Abbosh C, Dunn IF, Mak RH, Tamimi RM, Tempany CM, Swanton C, Hoffmann U, Schwartz LH, Gillies RJ, Huang RY, Aerts HJWL. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J Clin. 2019;69:127-157. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 848] [Cited by in RCA: 813] [Article Influence: 135.5] [Reference Citation Analysis (3)] |

| 47. | Wynants L, Van Calster B, Collins GS, Riley RD, Heinze G, Schuit E, Bonten MMJ, Dahly DL, Damen JAA, Debray TPA, de Jong VMT, De Vos M, Dhiman P, Haller MC, Harhay MO, Henckaerts L, Heus P, Kammer M, Kreuzberger N, Lohmann A, Luijken K, Ma J, Martin GP, McLernon DJ, Andaur Navarro CL, Reitsma JB, Sergeant JC, Shi C, Skoetz N, Smits LJM, Snell KIE, Sperrin M, Spijker R, Steyerberg EW, Takada T, Tzoulaki I, van Kuijk SMJ, van Bussel B, van der Horst ICC, van Royen FS, Verbakel JY, Wallisch C, Wilkinson J, Wolff R, Hooft L, Moons KGM, van Smeden M. Prediction models for diagnosis and prognosis of covid-19: systematic review and critical appraisal. BMJ. 2020;369:m1328. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1833] [Cited by in RCA: 1745] [Article Influence: 349.0] [Reference Citation Analysis (0)] |

| 48. | Huang L, Han R, Ai T, Yu P, Kang H, Tao Q, Xia L. Serial Quantitative Chest CT Assessment of COVID-19: A Deep Learning Approach. Radiol Cardiothorac Imaging. 2020;2:e200075. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 258] [Cited by in RCA: 200] [Article Influence: 40.0] [Reference Citation Analysis (0)] |

| 49. | Li Z, Zhong Z, Li Y, Zhang T, Gao L, Jin D, Sun Y, Ye X, Yu L, Hu Z, Xiao J, Huang L, Tang Y. From community-acquired pneumonia to COVID-19: a deep learning-based method for quantitative analysis of COVID-19 on thick-section CT scans. Eur Radiol. 2020;30:6828-6837. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 51] [Cited by in RCA: 68] [Article Influence: 13.6] [Reference Citation Analysis (0)] |

| 50. | Allen B, Agarwal S, Coombs L, Wald C, Dreyer K. 2020 ACR Data Science Institute Artificial Intelligence Survey. J Am Coll Radiol. 2021;18:1153-1159. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 25] [Cited by in RCA: 89] [Article Influence: 22.3] [Reference Citation Analysis (0)] |